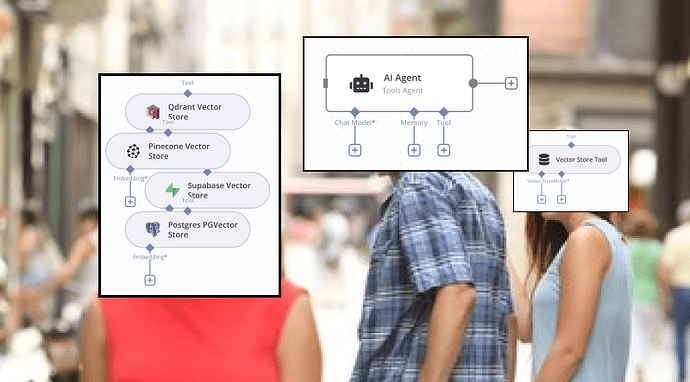

In n8n 1.74.0 released last month (Jan 2025), brought a long-awaited update to vector stores for AI agents - we can now finally use Vector Stores directly as tools!

Wait, wasn’t this possible before?

This marks exactly 6 months since I posted my first review on the predecessor, the vector store tool, where I mentioned quite a few drawbacks in certain scenarios and how a few workarounds could be implemented using custom Langchain code. Not ideal as this was quite hacky and only available to self-hosted users.

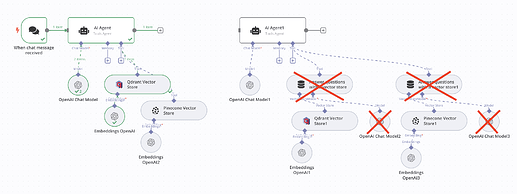

Well, you’ll be glad to know that all of the drawbacks have now been addressed with this update! Rather than having the vector store as part of a chain, the n8n team have created dedicated tool subnodes to link the agent directly to the vector store instead.

Ok, but what’s changed?

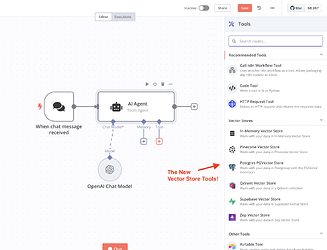

There are now 5 new AI Agent tool subnodes for each of the respective vector stores you’ve come to know in the tools sidepanel.

Having played with the new nodes briefly, here’s what I’m absolutely loving right now comapred to the vector store tool.

1. No more intermediary LLM!

1. No more intermediary LLM!

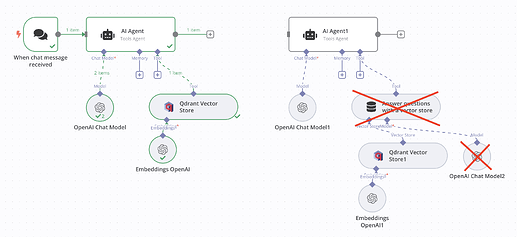

At last! Documents are no longer gated by the LLM which sits between the agent and the vector store. This was a pain for me as it was akin to “data loss” in scenarios which required exact items, wording or numbers to be presented back to the user.

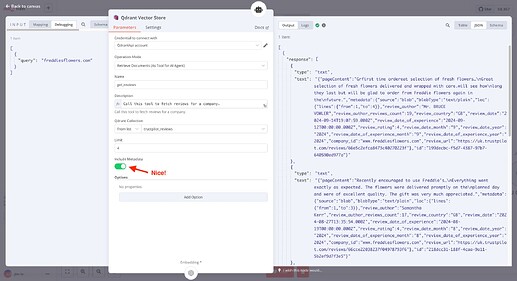

2. Document Metadata can now be returned!

2. Document Metadata can now be returned!

A small but significant detail, there is now an option which can be toggled to return metadata associated with vector store documents. Metadata can be incredibly useful to store related data which you might not want as part of the search query but still vital to be available in context. ie. navigational elements such as URLs. By having this change, it does away with extra queries/tool calls to get this additional info.

3. Much simplier and cleaner user experience!

3. Much simplier and cleaner user experience!

By which I mean, less clutter to achieve the same or better result! If you’ve ever had to use more than one vector store for an agent, this update is definitely going to help tremendously with canvas space.

What does this mean for RAG in 2025?

New year, new resolutions! For me, this is going to be an easy recommend to start switching from the vector store tool and using the vector store as a tool directly (that’s quite a mouthful!). If you’ve been using my Langchain code node hack, this would also be a good time to switch now that the “Quality of Life” improvements are now officially supported.

If you’re new to building RAG in n8n, then this will make for a much smoother start. Though, be aware that at time of writing, many existing tutorials will be based on the old vector store tool - no fret, just switch it out with the equivalent vector store subnode. That’s the magic of n8n!

As for me, I got a lot of templates to revisit and update! If you’re interested, please do check up again on my templates in the coming weeks - jimleuk | n8n Creator

Conclusion

One of my favourite n8n updates this year and it’s only February! How is everyone else building RAG in 2025? Would you revisit past templates to make the transition? Leave a comment below.

Thanks!

Jim

If you enjoy posts on n8n and AI, check out my other posts in the forum!

Follow me on LinkedIn or Twitter/X .