So this is a bit tricky, but I think a possible approach would be to store that huge file on the file system, then read it in chunks. This will only work if you’re not using n8n cloud though and you would need to adjust the paths used in this workflow to your own folder structure. But the basic idea could be to have a parent workflow like this to download a large file, write it to the file system and then prepare batches:

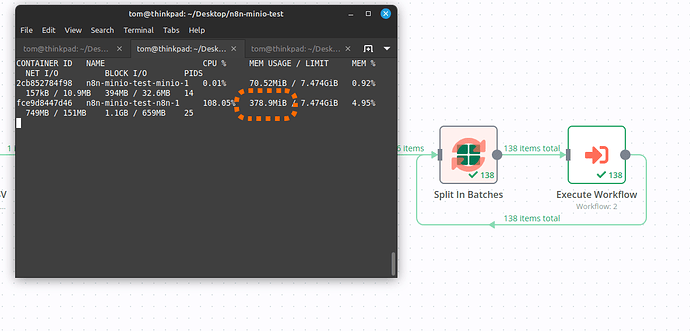

Then call a sub-workflow reading only a limited number of lines at once from that file, do something with it and then return only a very small result set to the parent rather than the full data:

Memory usage was quite low when testing this with a dummy file:

Now this is assuming your CSV file is properly formatted and the only problem is indeed its size. If not It’d be great if you could share an actual example of one of these files (you can of course redact anything confidential, the only thing that matter is that any issues you might have can be reproduced with it).

Hope this helps!