Sure!

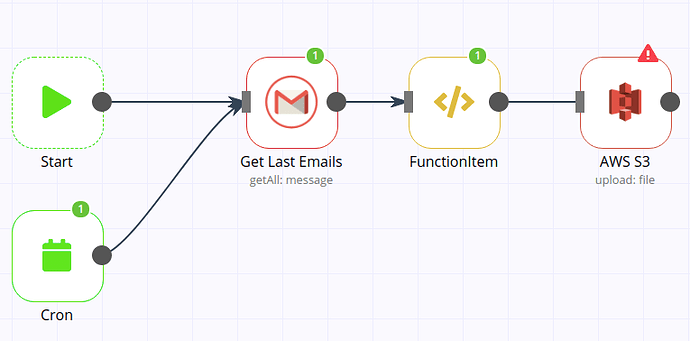

{

"name": "copy_attachments_to_s3",

"nodes": [

{

"parameters": {},

"name": "Start",

"type": "n8n-nodes-base.start",

"typeVersion": 1,

"position": [

300,

410

]

},

{

"parameters": {

"operation": "upload",

"bucketName": "bucket-name",

"fileName": "={{$node[\"Get Last Emails\"].binary.attachment_0.fileName}}",

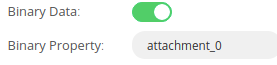

"binaryPropertyName": "attachment_0",

"additionalFields": {}

},

"name": "AWS S3",

"type": "n8n-nodes-base.awsS3",

"typeVersion": 1,

"position": [

930,

410

],

"credentials": {

"aws": "s3"

}

},

{

"parameters": {

"functionCode": "var d = new Date(item.date);\nvar year = d.getFullYear();\nvar month = d.getMonth() + 1;\nvar day = d.getDate();\n\nmonth = month < 10 ? \"0\" + month : month;\nday = day < 10 ? \"0\" + day : day;\nitem.formatted_date = year + \"-\" + month + \"-\" + day;\nitem.id = item.messageId.replace(\"<\", \"\").replace(\">\", \"\");\n\nreturn item;\n"

},

"name": "FunctionItem",

"type": "n8n-nodes-base.functionItem",

"typeVersion": 1,

"position": [

740,

410

]

},

{

"parameters": {

"triggerTimes": {

"item": [

{

"mode": "custom",

"cronExpression": "0 15 8 * * 1-5"

}

]

}

},

"name": "Cron",

"type": "n8n-nodes-base.cron",

"typeVersion": 1,

"position": [

300,

580

]

},

{

"parameters": {

"resource": "message",

"operation": "getAll",

"returnAll": true,

"additionalFields": {

"format": "resolved",

"q": "=has:attachment newer_than:1d"

}

},

"name": "Get Last Emails",

"type": "n8n-nodes-base.gmail",

"typeVersion": 1,

"position": [

560,

410

],

"credentials": {

"gmailOAuth2": "oauth-gmail"

}

}

],

"connections": {

"Start": {

"main": [

[

{

"node": "Get Last Emails",

"type": "main",

"index": 0

}

]

]

},

"Cron": {

"main": [

[

{

"node": "Get Last Emails",

"type": "main",

"index": 0

}

]

]

},

"Get Last Emails": {

"main": [

[

{

"node": "FunctionItem",

"type": "main",

"index": 0

}

]

]

},

"FunctionItem": {

"main": [

[

{

"node": "AWS S3",

"type": "main",

"index": 0

}

]

]

}

},

"active": false,

"settings": {},

"id": "20"

}