@FelixL @MotazHakim

This method seems to work quite decently for me.

I’ve gone up to executing 25-30 sub-workflows in parallel.

In my case, that’s where a comfortable threshold was established, with regards to the max resource consumption by the subworkflow in question and n8n not crashing.

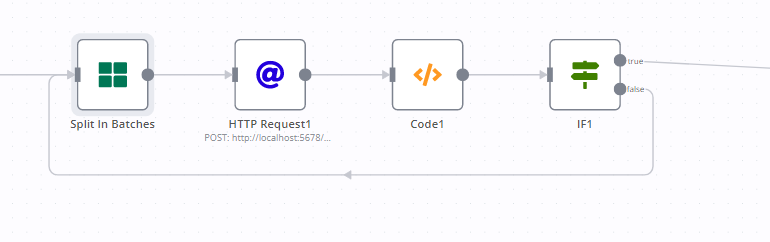

Example, in the main workflow:

Here, the Split In Batches node’s Batch Size is set to 25.

And the Code1 node’s code is set to return {}; in the Run Once for All Items mode just so as not to trigger the IF node multiple times.

Note: this arrangement becomes a serious necessity at times, whenever a large set of data needs to be processed and without parallelization, it would easily take upto 15-30 times longer!