Hi! I’ve created a deployment file with a load balancer IP on my local network. I also set up a PVC for the deployment, which is working great for persisting data.

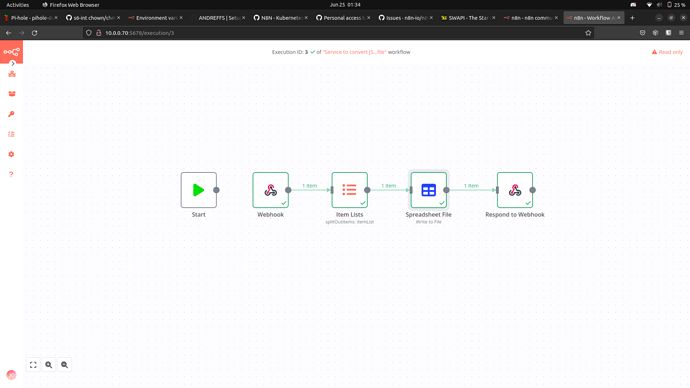

I made a workflow with a Webhook trigger, and tested with my load balancer IP and it worked great. It was from a template, and I used Postman to test it (I had to change localhost to my loadbalancer IP but that was no big deal), and all that was very happy!

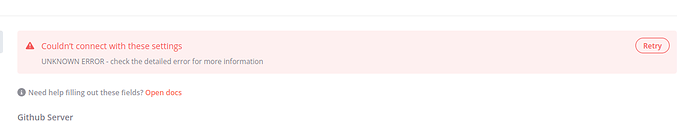

However, when I make any outbound http request, I receive the below error.

Describe the issue/error/question

I originally was trying to add my GitHub PAT for auth. It saved my auth as expected, but then gave a pretty nondescript error

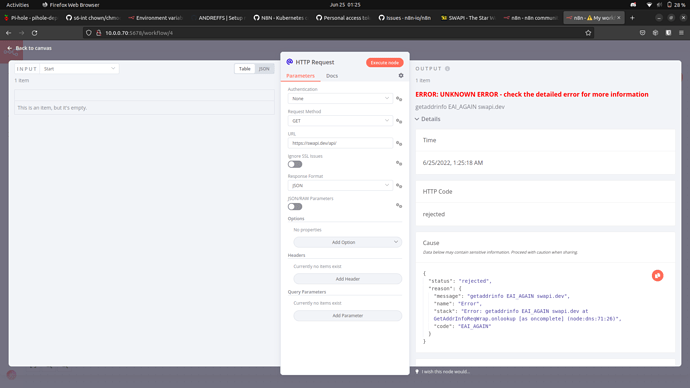

I then played with just an http request node, trying to do a get to the Star Wars API (my favorite for testing) at https://swapi.dev/api/. I then get a DNS resolution error?

What is the error message (if any)?

{"status":"rejected","reason":{"message":"getaddrinfo EAI_AGAIN swapi.dev","name":"Error","stack":"Error: getaddrinfo EAI_AGAIN swapi.dev\n at GetAddrInfoReqWrap.onlookup [as oncomplete] (node:dns:71:26)","code":"EAI_AGAIN"}}

Please share the workflow

Information on your n8n setup

- n8n version: 0.183.0

- Database you’re using (default: SQLite): SQLite (in a PVC)

- **Running n8n with the execution process [own(default), main]:**own

- Running n8n via [Docker, npm, n8n.cloud, desktop app]: Docker image, running on a local microk8s cluster.

Deployment/Service file:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: n8n-deployment

labels:

app: n8n

spec:

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

selector:

matchLabels:

app: n8n

template:

metadata:

labels:

app: n8n

spec:

containers:

- name: n8n

image: n8nio/n8n

imagePullPolicy: Always

ports:

- name: web

containerPort: 5678

volumeMounts:

- name: n8n-data

mountPath: /home/node/.n8n

volumes:

- name: n8n-data

persistentVolumeClaim:

claimName: pvc-n8n

nodeSelector:

server: mini

---

kind: Service

apiVersion: v1

metadata:

name: n8n-service

spec:

selector:

app: n8n

ports:

- name: web

protocol: TCP

port: 5678

targetPort: 5678

type: LoadBalancer

loadBalancerIP: 10.0.0.70

For good measure, screenshots of the webhook workflow