Describe the problem/error/question

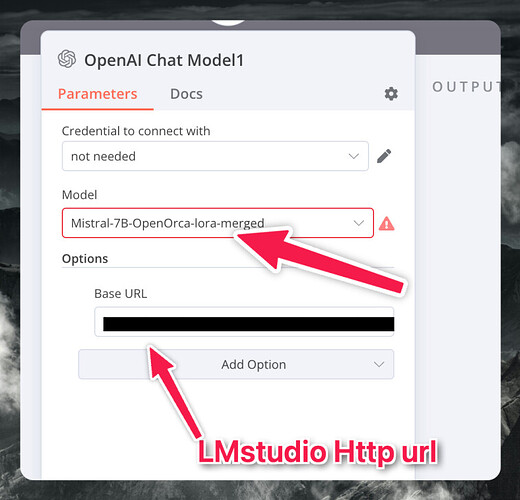

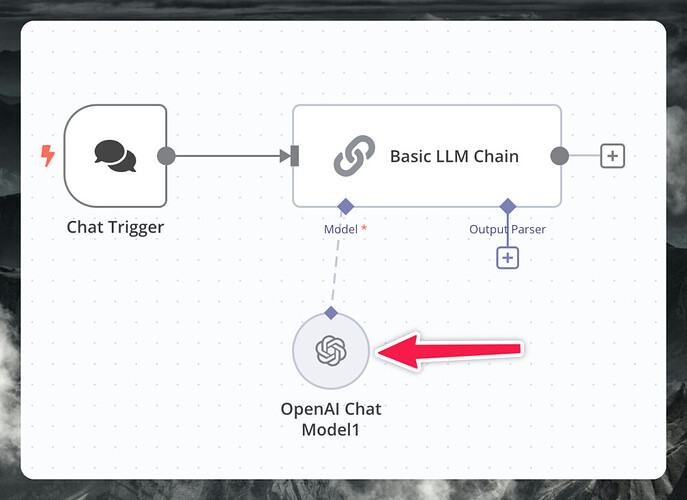

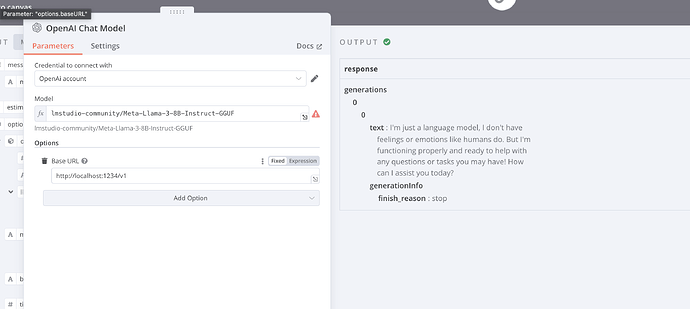

Hi guys, how to LM studio to n8n Basic LLM chain. There is no option to choose self hosted model. I have found that I can add OpenAI model, and change the URL to my Self Hosted model. But it’s not working. LM studio gives 200 error. I guess it’s due to there is no API key in credentials

What is the error message (if any)?

I got error 200 on LMStudio side. I guess because I’m using OpenAi node which reqires to add API key, but LMStudio doesn’t require API key.

Please share your workflow

{

“meta”: {

“templateCredsSetupCompleted”: true,

“instanceId”: “3f65f6f9fb3cbf10072a7fc8c26aa0afc98ab64931665c6b618072d063390891”

},

“nodes”: [

{

“parameters”: {

“public”: true,

“options”: {}

},

“id”: “8e2e6020-6529-4345-867c-3a2c46ecdfad”,

“name”: “Chat Trigger”,

“type”: “@n8n/n8n-nodes-langchain.chatTrigger”,

“typeVersion”: 1,

“position”: [

420,

420

],

“webhookId”: “845ae96e-e3f0-4c6c-9429-6bcbb445a974”

},

{

“parameters”: {

“prompt”: “Introduce yourself”,

“messages”: {

“messageValues”: [

{

“message”: “Always answer in rhymes.”

},

{

“type”: “HumanMessagePromptTemplate”,

“message”: “=Introduce yourself”

}

]

}

},

“id”: “a85470d4-cff4-4857-93c4-184b8d2809bb”,

“name”: “Basic LLM Chain”,

“type”: “@n8n/n8n-nodes-langchain.chainLlm”,

“typeVersion”: 1.3,

“position”: [

640,

420

]

},

{

“parameters”: {

“model”: “Mistral-7B-OpenOrca-lora-merged”,

“options”: {

“baseURL”: “https://lmstudio.sergejs34.xyz/v1/chat/completions”

}

},

“id”: “69051db9-da5a-4c31-9baa-4d282605f5ca”,

“name”: “OpenAI Chat Model1”,

“type”: “@n8n/n8n-nodes-langchain.lmChatOpenAi”,

“typeVersion”: 1,

“position”: [

640,

620

],

“credentials”: {

“openAiApi”: {

“id”: “Pfs8xX3ZtOPqOmeq”,

“name”: “not needed”

}

}

}

],

“connections”: {

“Chat Trigger”: {

“main”: [

[

{

“node”: “Basic LLM Chain”,

“type”: “main”,

“index”: 0

}

]

]

},

“OpenAI Chat Model1”: {

“ai_languageModel”: [

[

{

“node”: “Basic LLM Chain”,

“type”: “ai_languageModel”,

“index”: 0

}

]

]

}

},

“pinData”: {}

}

Share the output returned by the last node

nothing

Information on your n8n setup

- n8n version: 1.26.0

- Database (default: SQLite):sqlite

- n8n EXECUTIONS_PROCESS setting (default: own, main):default

- Running n8n via (Docker, npm, n8n cloud, desktop app):docker

- Operating system:ubuntu