- n8n version: 1.101.1

- Database (default: SQLite): default

- n8n EXECUTIONS_PROCESS setting (default: own, main): default

- Running n8n via (Docker, npm, n8n cloud, desktop app): n8n cloud

- Operating system: n8n cloud

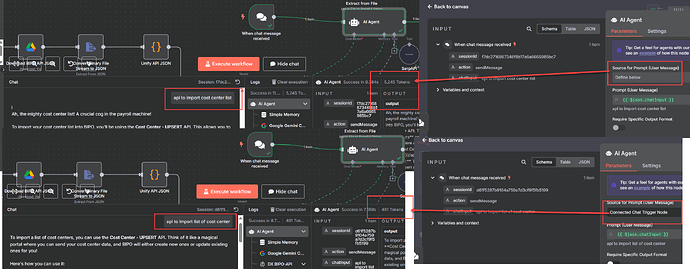

Kindly reference attached screenshot, when AI agent is “Define below” to understand user input, it consumes 5245 tokens. But when source for prompt is “Connected Chat Trigger Node”, it only consume 491 tokens. It’s over 10x more tokens consume and not cost effective.

i have to use “Connected chat trigger node”, reason because i figured out that’s the only way to le the bot understand attached content

e.g. Source for Prompt as below

"your message {{ $json.chatInput }}

your attached {{ $(‘When chat message received’).first().binary.data0.data }}"

hope for some suggestion. I have couple of chat request cost over 100k tokens, hard to proceed with my test