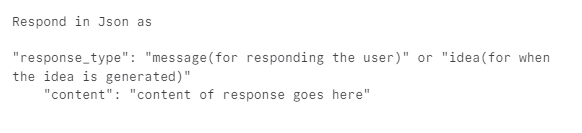

Describe the problem/error/question

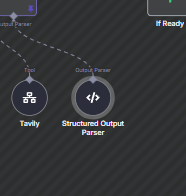

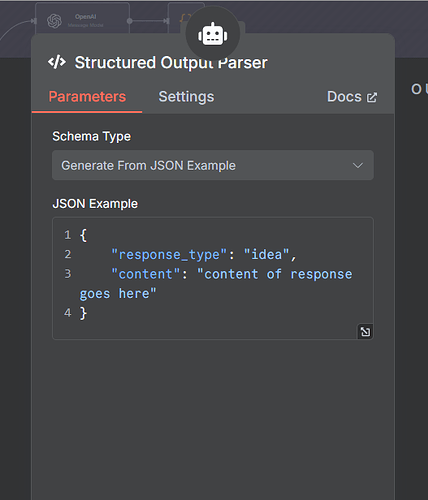

My agent has an output parser that filters the response either as “idea” or “message”,

messages parsed as “idea” go through the workflow while “message” returns [empty object]

##Is wort to mention that SOME times the message will be normally outputted, 1 in ~6 times

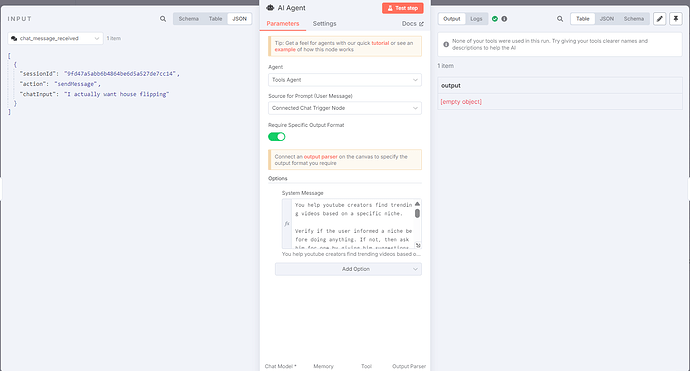

What I don’t get is why since the chat model does generate a completion that is correctly parsed:

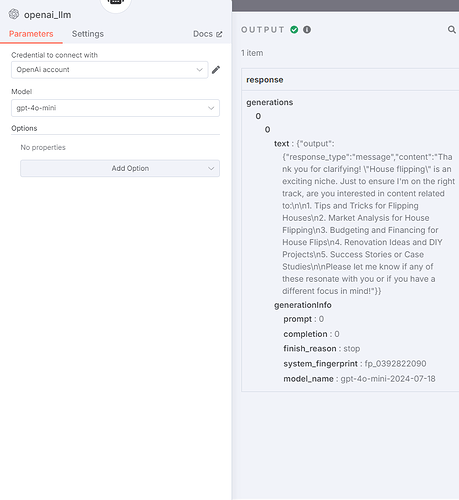

What is the error message (if any)?

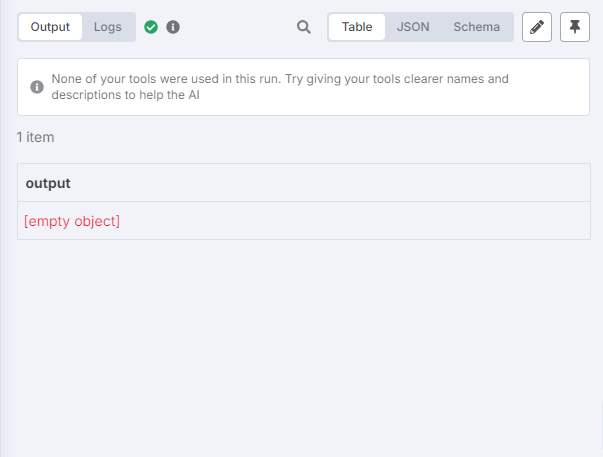

Please share your workflow

Share the output returned by the last node

[empty object]

[ERROR: Cannot read properties of undefined (reading ‘replace’) [line 1]]

Information on your n8n setup

- n8n version: 1.89.2

- Database (default: SQLite): Default

- n8n EXECUTIONS_PROCESS setting (default: own, main): I am not sure where to get that info.

- Running n8n via (Docker, npm, n8n cloud, desktop app): n8n cloud

- Operating system: Windows 11