Describe the problem/error/question

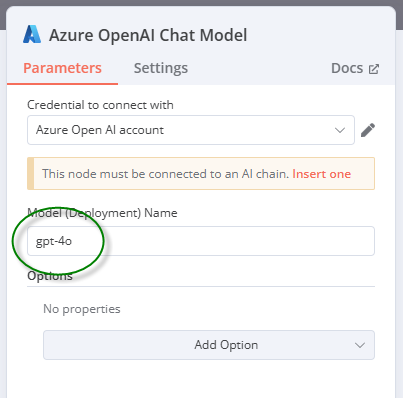

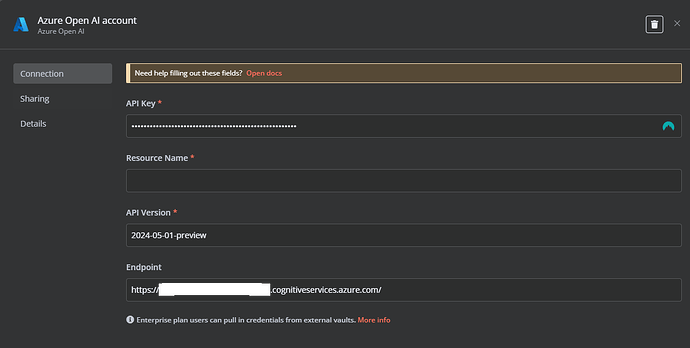

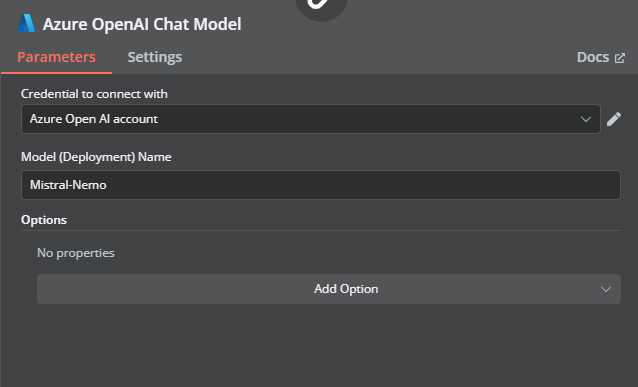

I have been trying to get Azure OpenAI Chat Model working as part of my flow but every single permutation returns “Invalid URL” whenever trying to connect.

I suspect it’s a bug specific to the Azure OpenAI chat model plugin - I’m pretty sure I’ve tried everything and would like to be proven wrong ![]()

Tests:

- I successfully connected to my Azure OpenAI model via the n8n HTTP Request - same configurations

- I successfully connected to my Azure OpenAI model via a terminal curl test - same configurations

- I successfully configured and connected with the Google Gemini API chat model without any problem.

What is the error message (if any)?

{

“errorMessage”: “Invalid URL”,

“errorDetails”: {

“rawErrorMessage”: [

“Invalid URL”

],

“httpCode”: “ERR_INVALID_URL”

},

“n8nDetails”: {

“nodeName”: “Azure OpenAI Chat Model”,

“nodeType”: “@n8n/n8n-nodes-langchain.lmChatAzureOpenAi”,

“nodeVersion”: 1,

“itemIndex”: 0,

“runIndex”: 0,

“time”: “3/3/2025, 3:28:19 PM”,

“n8nVersion”: “1.80.5 (Self Hosted)”,

“binaryDataMode”: “default”,

“stackTrace”: [

“NodeApiError: Invalid URL”,

" at ExecuteSingleContext.httpRequestWithAuthentication (/usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/node-execution-context/utils/request-helper-functions.js:946:15)“,

" at processTicksAndRejections (node:internal/process/task_queues:95:5)”,

" at ExecuteSingleContext.httpRequestWithAuthentication (/usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/node-execution-context/utils/request-helper-functions.js:1143:20)“,

" at RoutingNode.rawRoutingRequest (/usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/routing-node.js:319:29)”,

" at RoutingNode.makeRequest (/usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/routing-node.js:406:28)“,

" at async Promise.allSettled (index 0)”,

" at RoutingNode.runNode (/usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/routing-node.js:139:35)“,

" at ExecuteContext.versionedNodeType.execute (/usr/local/lib/node_modules/n8n/dist/node-types.js:55:30)”,

" at WorkflowExecute.runNode (/usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/workflow-execute.js:627:19)“,

" at /usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/execution-engine/workflow-execute.js:878:51”

]

}

}

Please share your workflow

{

“nodes”: [

{

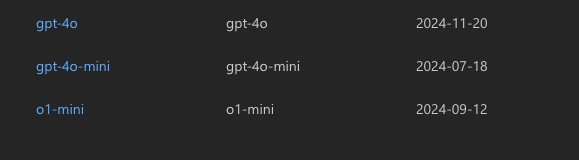

“parameters”: {

“model”: “gpt-4o”,

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.lmChatAzureOpenAi”,

“typeVersion”: 1,

“position”: [

440,

20

],

“id”: “c3048bc5-19c8-428a-964c-1a9e0a0dc9dd”,

“name”: “Azure OpenAI Chat Model”,

“credentials”: {

“azureOpenAiApi”: {

“id”: “D7x1yIbh9UYuoOUN”,

“name”: “Azure Open AI account”

}

}

}

],

“connections”: {

“Azure OpenAI Chat Model”: {

“ai_languageModel”: [

]

}

},

“pinData”: {},

“meta”: {

“templateCredsSetupCompleted”: true,

“instanceId”: “b7d6fb9c5db9476d85e6f5c4fe0a0dfe8ed7c9319469d71a9c0b6be722f7293d”

}

}

Share the output returned by the last node

Information on your n8n setup

Latest n8n installed this weekend on Synology NAS (everything else is working within the n8n environment. Just not this model.