Description

I’m running n8n in a Docker container within a closed network where internet access is only possible through a proxy (no authentication required). I have set the HTTP_PROXY and HTTPS_PROXY environment variables accordingly.

The HTTP Request node works perfectly fine with these proxy settings; however, when I attempt to use the Azure OpenAI Chat Model node, I encounter a “Request timed out” error.

Information on my n8n setup

- n8n version: 1.83.2

- Database (default: SQLite): SQLite

- n8n EXECUTIONS_PROCESS setting (default: own, main): Main

- Running n8n via (Docker, npm, n8n cloud, desktop app): Docker

- Operating system: Oracle Linux 8

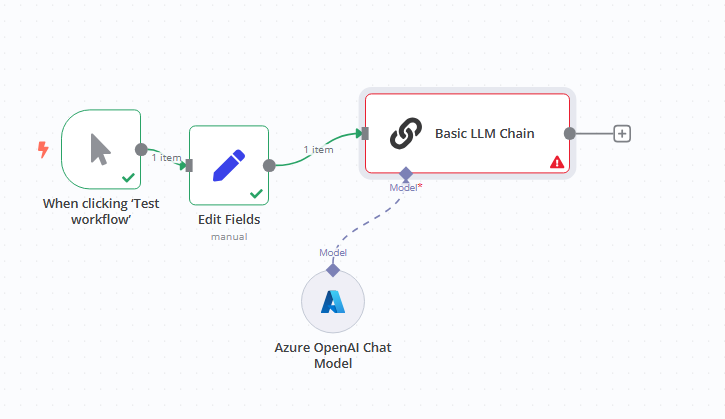

My Testing Workflow with Azure OpenAI

Error Logs

Error in handler N8nLlmTracing, handleLLMStart: TypeError: fetch failed

Request timed out.

Expected Behavior

- n8n connects to the external Azure OpenAI API through the proxy.

Actual Behavior

- n8n cannot connect to the external Azure OpenAI API using Azure OpenAI Chat Model

- n8n can connect to Azure OpenAI using HTTP Request node without issues.

Thank You!

Any assistance would be greatly appreciated!

If there’s any additional information you need, let me know!