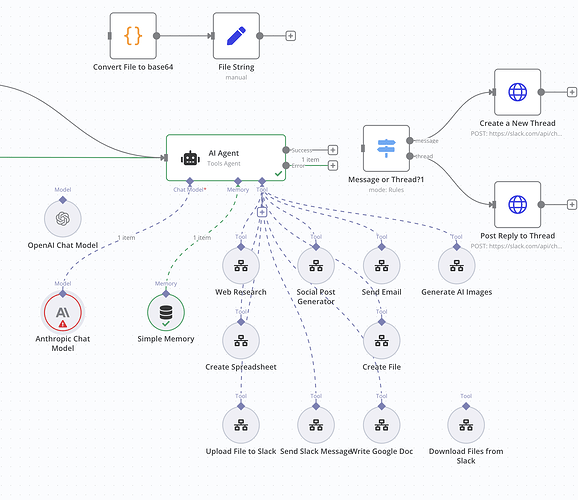

Rather frustrating bug - whenever I edit a prompt in the agent itself or a tool description, if I then test workflow, it throws an error in the Anthropic node.

This is the error: Bad request - please check your parameters

messages.1: all messages must have non-empty content except for the optional final assistant message

I’ve tried with Claude 3.5 and 3.7 - both the same error. It works for OpenAI chat models.

This is fixed by rebooting the workspace.

However that is time consuming, and I have to do it now for every single change.

Working on n8n cloud.

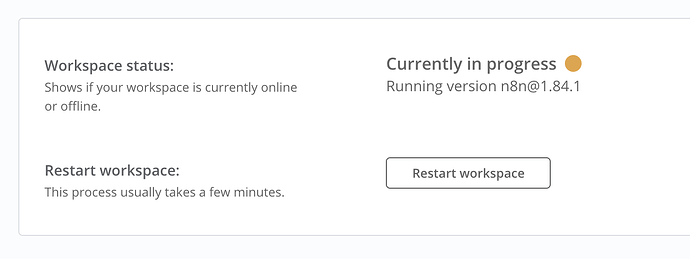

Update: I’ve updated to the latest version, and now the bug remains but it seems to be restarting the server on its own. I went to the admin section to restart, and it shows this:

Hi there, is it possible to get some support on this, as it’s now not working in our production environment and I can’t restart the server to fix it.

I think I’ve figured out the cause. The Simple Memory had an issue, so the AI Agent was sending an empty message to Anthropic which caused the error. When I disconnected the Simple Memory node, or changed the session ID, it worked.

… But I still can’t restart the server.

Another update: It breaks again because there’s an empty message in the Simple Memory. So I’ve tried a workaround for now - instructing the AI not to store empty messages in Simple Memory.

Hey @James_Pardoe , we will look into the issue with your workspace and report back in the ticket your raised with us.

Thank you - the above is the latest updates compared to the ticket

Hey James, I ran into the exact same issue… Anthropic agents with memory and tool calls. After some digging, i found that under the hood when you edit a prompt or tool description inside the AI Agent node, the first execution often triggers a tool_use and the assistant’s textual reply at the same time.

Anthropic models (like Claude 3.5/3.7) send those back as a list of steps, but only the tool_use is forwarded through the tool handling loop. The accompanying textual message from the assistant is not preserved as output after tool resolution.

My workaround was to abandon tightly coupled tool usage and switch to a distributed control architecture with multi-turn orchestration…

ok, it’s more tiring but it’s more reliable for production…

Hope that helps!

1 Like

Totally resonate with your ‘who owns the retry’ dilemma. Our current pattern is a three-layer safety net:

• Agent Self-Awareness – each agent is prompted to detect when it lacks sufficient context and to ‘escalate’ instead of hallucinating.

• n8n Workflow Guardrails – a simple JS function node checks the agent’s JSON output against a schema and fails fast if it smells off.

• Supervisor Flow – any failure bubbles to a separate “Incident Router” workflow that decides whether to auto-retry, notify Slack, or hand the task to a human.

The nice part is we can tweak retry policies without touching agent prompts, but the trade-off is extra latency.

I’m experimenting with letting the agent embed a suggested retry_after_seconds key in its error payload so the supervisor can make smarter back-off decisions. Has anyone here tried something similar or gone full-blown exponential back-off inside the agent itself?

Excited to learn how others are keeping hybrid systems resilient.

hi @ihortom - any idea on when we can expect a fix on this? It basically renders the agent node w/ Antropic models unuseable if one is using tools.

Hey Renato,

Would you please expand this? Thx.

EP

Same issue here. Confirmed, the memory stores empty CONTENT nodes inside kwargs.