How to Implement Caching for Chatbot in n8n to Reduce Vector Database Queries?

Hi n8n Community,

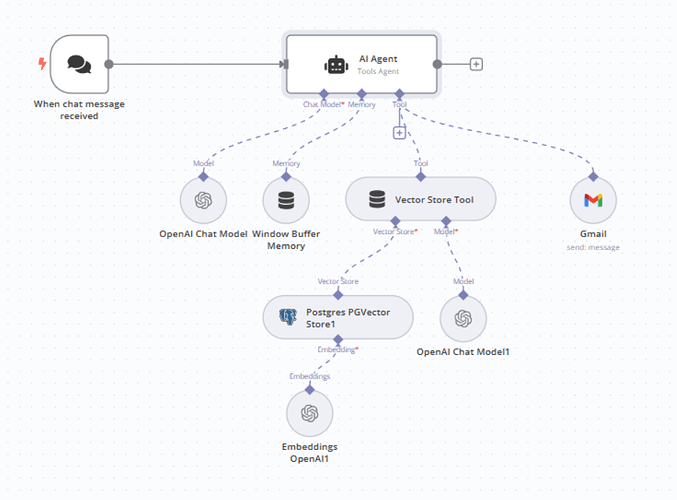

I’m currently working on a chatbot workflow in n8n that uses a vector database (PostgreSQL with pgvector) to retrieve answers based on user queries. Here’s a quick overview of my setup:

- Workflow Trigger:

The workflow starts with a When Chat Message Received node. - Query Handling:

The user query is sent to a vector database, which performs a semantic search to retrieve the best-matching response. - Challenge:

Every time a user sends a query, the workflow queries the vector database, even if the same query has already been processed. This leads to unnecessary database queries and increased token usage, especially when the same or similar queries are asked repeatedly.

What I Want to Achieve:

I’d like to implement caching in my chatbot workflow, so that:

- If a user sends a query that has already been processed, the workflow can return the cached response without querying the vector database again.

- If the query is new, the workflow queries the database, stores the response in the cache, and returns it to the user.

Below is my current workflow:

Information on my n8n setup

- n8n version:: Community Version, 1.71.3

- Database (default: PostgreSql):

- n8n EXECUTIONS_PROCESS setting (default: main):

- Running n8n via : Docker

- Operating system: Windows 64-bit operating system