Hey everyone

Sorry for bringing this post back from the dead but just wanted to contribute should someone else come across this in the future.

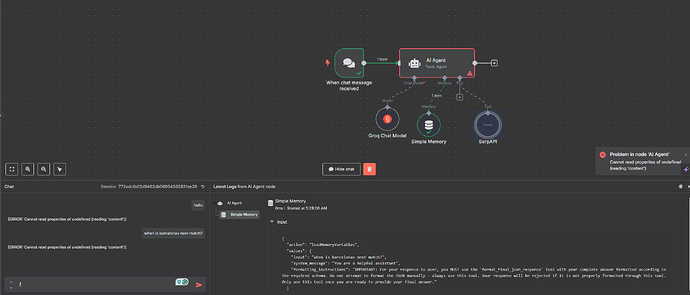

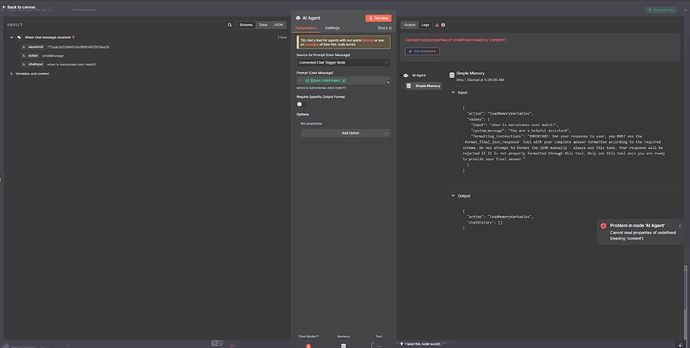

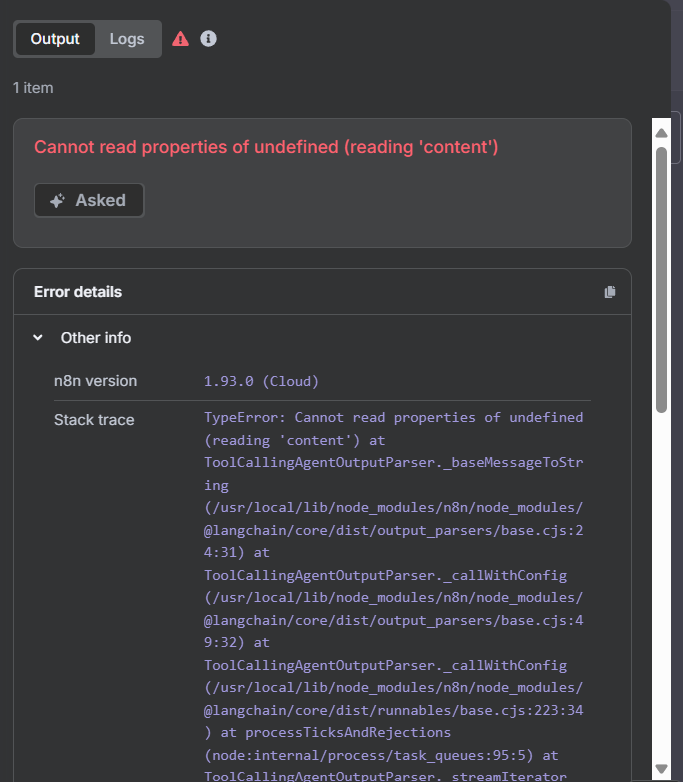

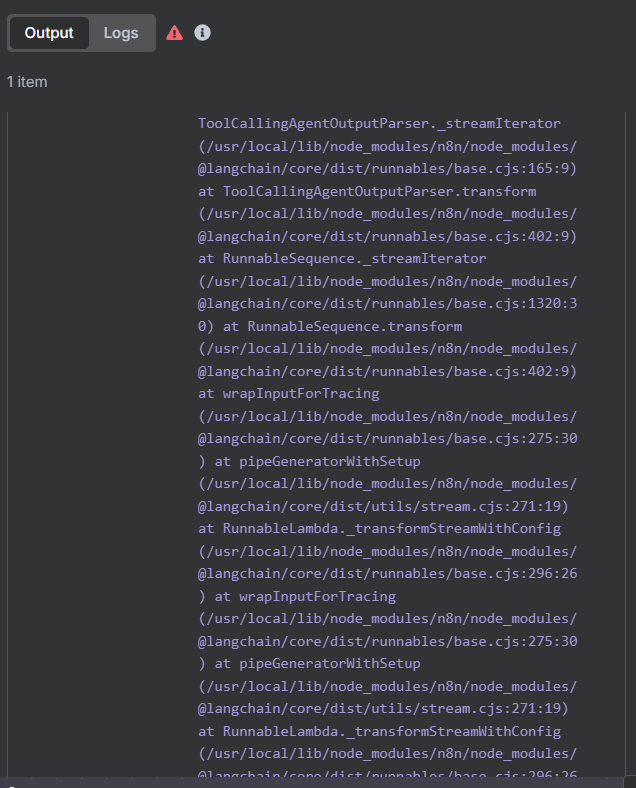

I encountered the same error when I was trying to return a non-stream response - naively thought it was the same chat completion json response. Suffice to say your LLM server must support streaming before you can use it for the agent.

The expected response from the LLM server is text/plain (not json!) and looks like the following. This is what Langchain is looking for and ultimately parses the response from.

Note: OpenAI’s responses API returns a completely different streaming format!

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"role":"assistant","content":"","refusal":null},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":"Hello"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":"!"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":" How"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":" can"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":" I"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":" assist"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":" you"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":" today"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{"content":"?"},"logprobs":null,"finish_reason":null}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[{"index":0,"delta":{},"logprobs":null,"finish_reason":"stop"}],"usage":null}

data: {"id":"chatcmpl-BYWXY...","object":"chat.completion.chunk","created":174...,"model":"gpt-4o-mini","service_tier":"default","system_fingerprint":"fp_03...","choices":[],"usage":{"prompt_tokens":8,"completion_tokens":9,"total_tokens":17,"prompt_tokens_details":{"cached_tokens":0,"audio_tokens":0},"completion_tokens_details":{"reasoning_tokens":0,"audio_tokens":0,"accepted_prediction_tokens":0,"rejected_prediction_tokens":0}}}

data: [DONE]

Hard to say if this is what OP is experiencing here but a way to check is to

- Use a HTTP request to the Groq endpoint and select model

- In the body, be sure to include

{ "stream": true }

- Check the response. If it doesn’t look like the above example then that might well be the issue.