“name”: “Test”,

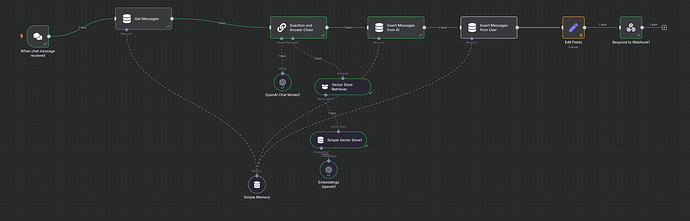

“nodes”: [

{

“parameters”: {},

“type”: “n8n-nodes-base.manualTrigger”,

“typeVersion”: 1,

“position”: [

-900,

-240

],

“id”: “b3d5f8ec-161c-4b05-b1a8-d553635e6bf6”,

“name”: “When clicking ‘Test workflow’”

},

{

“parameters”: {

“options”: {

“reset”: false

}

},

“type”: “n8n-nodes-base.splitInBatches”,

“typeVersion”: 3,

“position”: [

-240,

-240

],

“id”: “8812bc64-48b2-477c-9324-1ece64113d58”,

“name”: “Loop Over Items”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.embeddingsOpenAi”,

“typeVersion”: 1.2,

“position”: [

-20,

-20

],

“id”: “8db28cfa-237c-48cc-b88e-22d92256c576”,

“name”: “Embeddings OpenAI”,

“credentials”: {

“openAiApi”: {

“id”: “JOpHgYldTT1RHOaE”,

“name”: “OpenAi account”

}

}

},

{

“parameters”: {

“dataType”: “binary”,

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.documentDefaultDataLoader”,

“typeVersion”: 1,

“position”: [

100,

-17.5

],

“id”: “a3becf09-5601-4c7e-8453-f42b131bd909”,

“name”: “Default Data Loader”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.textSplitterRecursiveCharacterTextSplitter”,

“typeVersion”: 1,

“position”: [

188,

180

],

“id”: “e800e65d-f166-4492-909d-96f9a7dc3925”,

“name”: “Recursive Character Text Splitter”

},

{

“parameters”: {

“mode”: “insert”

},

“type”: “@n8n/n8n-nodes-langchain.vectorStoreInMemory”,

“typeVersion”: 1.1,

“position”: [

-4,

-240

],

“id”: “0cfc57e2-d976-49e6-9273-78eb2aab1b9a”,

“name”: “Simple Vector Store”

},

{

“parameters”: {

“options”: {}

},

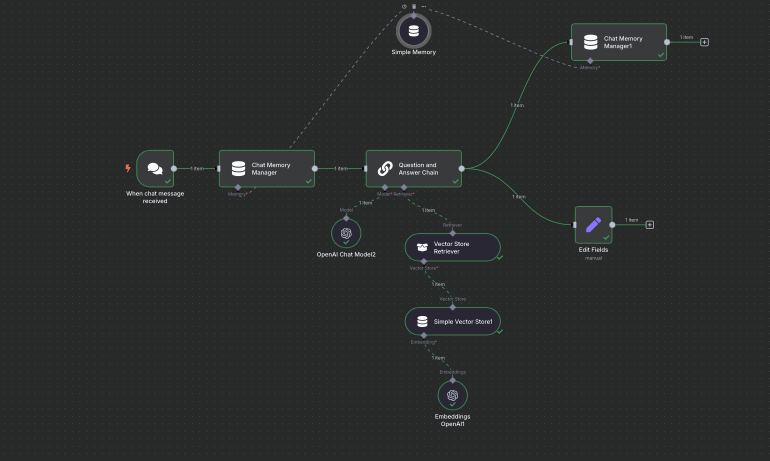

“type”: “@n8n/n8n-nodes-langchain.chatTrigger”,

“typeVersion”: 1.1,

“position”: [

-900,

1037.5

],

“id”: “fdf4ca07-fbab-4987-9ba5-8a4deb0bbbb1”,

“name”: “When chat message received”,

“webhookId”: “37a9cbf9-7c5a-44cd-bb68-405472400b75”

},

{

“parameters”: {},

“type”: “@n8n/n8n-nodes-langchain.vectorStoreInMemory”,

“typeVersion”: 1.1,

“position”: [

-184,

1457.5

],

“id”: “cf89051b-251b-4b59-97e0-f0740ff9e996”,

“name”: “Simple Vector Store1”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.embeddingsOpenAi”,

“typeVersion”: 1.2,

“position”: [

-96,

1652.5

],

“id”: “a325aa03-8183-43ba-abb1-21be663d9450”,

“name”: “Embeddings OpenAI1”,

“credentials”: {

“openAiApi”: {

“id”: “JOpHgYldTT1RHOaE”,

“name”: “OpenAi account”

}

}

},

{

“parameters”: {

“resource”: “folder”,

“folderId”: “01C7YEKCBKQVRTFOEMANFICAR27K3CWD5A”

},

“type”: “n8n-nodes-base.microsoftOneDrive”,

“typeVersion”: 1,

“position”: [

-680,

-240

],

“id”: “97a99239-913b-4a99-b6c1-a0bf0b274e8a”,

“name”: “Microsoft OneDrive4”,

“credentials”: {

“microsoftOneDriveOAuth2Api”: {

“id”: “YKrv9ZdRbB2Ep1Us”,

“name”: “Microsoft Drive account”

}

}

},

{

“parameters”: {

“operation”: “download”,

“fileId”: “={{ $json["id"] }}\n”

},

“type”: “n8n-nodes-base.microsoftOneDrive”,

“typeVersion”: 1,

“position”: [

-460,

-240

],

“id”: “d85c6b0f-8e03-4040-87ab-e36e771c2b83”,

“name”: “Microsoft OneDrive5”,

“credentials”: {

“microsoftOneDriveOAuth2Api”: {

“id”: “YKrv9ZdRbB2Ep1Us”,

“name”: “Microsoft Drive account”

}

}

},

{

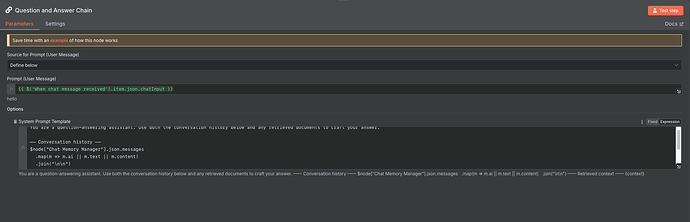

“parameters”: {

“promptType”: “define”,

“text”: “={{ $(‘When chat message received’).item.json.chatInput }}”,

“options”: {

“systemPromptTemplate”: “=You are a question-answering assistant. Use both the conversation history below and any retrieved documents to craft your answer.\n\n── Conversation history ──\n{{ $json["Chat Memory Manager"].messages\n .map(m => m.ai)\n .join("\n\n") }}\n\n── Retrieved context ──\n{context}\n\nUser’s question:\n{{ $json["When chat message received"].chatInput }}\n”

}

},

“type”: “@n8n/n8n-nodes-langchain.chainRetrievalQa”,

“typeVersion”: 1.5,

“position”: [

-288,

1037.5

],

“id”: “ba6cc6f4-5997-4e52-bd06-9e838f61aa21”,

“name”: “Question and Answer Chain”,

“notesInFlow”: false

},

{

“parameters”: {},

“type”: “@n8n/n8n-nodes-langchain.retrieverVectorStore”,

“typeVersion”: 1,

“position”: [

-184,

1260

],

“id”: “9d14085a-5423-48f2-adfb-b862beae3948”,

“name”: “Vector Store Retriever”

},

{

“parameters”: {

“model”: {

“__rl”: true,

“mode”: “list”,

“value”: “gpt-4o-mini”

},

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.lmChatOpenAi”,

“typeVersion”: 1.2,

“position”: [

-380,

1220

],

“id”: “b57dec36-934d-44f7-a22c-64b344d509bd”,

“name”: “OpenAI Chat Model2”,

“credentials”: {

“openAiApi”: {

“id”: “JOpHgYldTT1RHOaE”,

“name”: “OpenAi account”

}

}

},

{

“parameters”: {

“respondWith”: “text”,

“responseBody”: “={{$json["body"]}}\n”,

“options”: {

“responseCode”: 200,

“responseHeaders”: {

“entries”: [

{

“name”: “Name: Content-Type”,

“value”: “Value: text/plain; charset=utf-8”

}

]

}

}

},

“type”: “n8n-nodes-base.respondToWebhook”,

“typeVersion”: 1.1,

“position”: [

-900,

480

],

“id”: “a0604cb8-349e-405f-a000-cc6114b0f385”,

“name”: “Respond to Webhook1”

},

{

“parameters”: {

“assignments”: {

“assignments”: [

{

“id”: “2a08d48f-876b-45d2-b505-9a7ea4d04408”,

“name”: “output”,

“value”: “={{ $json.response }}”,

“type”: “string”

}

]

},

“options”: {}

},

“type”: “n8n-nodes-base.set”,

“typeVersion”: 3.4,

“position”: [

270,

1187.5

],

“id”: “e7b3cda8-94e1-43ce-98e3-0b26f4ac86c2”,

“name”: “Edit Fields”

},

{

“parameters”: {

“options”: {}

},

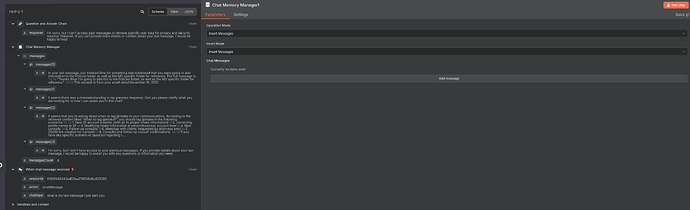

“type”: “@n8n/n8n-nodes-langchain.memoryManager”,

“typeVersion”: 1.1,

“position”: [

-680,

1037.5

],

“id”: “a3dc3e69-1640-4a49-9024-773676a73c1b”,

“name”: “Chat Memory Manager”

},

{

“parameters”: {

“sessionIdType”: “customKey”,

“sessionKey”: “345”

},

“type”: “@n8n/n8n-nodes-langchain.memoryBufferWindow”,

“typeVersion”: 1.3,

“position”: [

-200,

680

],

“id”: “80215ac1-6950-466d-9a28-ab6a7a203c2d”,

“name”: “Simple Memory”

},

{

“parameters”: {

“mode”: “insert”

},

“type”: “@n8n/n8n-nodes-langchain.memoryManager”,

“typeVersion”: 1.1,

“position”: [

260,

700

],

“id”: “35dd13e3-f9df-4204-8054-81886943ec53”,

“name”: “Chat Memory Manager1”

},

{

“parameters”: {

“sessionIdType”: “customKey”,

“sessionKey”: “42069”

},

“type”: “@n8n/n8n-nodes-langchain.memoryBufferWindow”,

“typeVersion”: 1.3,

“position”: [

-2280,

1440

],

“id”: “b86bf32c-1019-4ed0-b5d8-1d95894ada22”,

“name”: “Simple Memory1”

},

{

“parameters”: {},

“type”: “n8n-nodes-base.merge”,

“typeVersion”: 3.1,

“position”: [

-900,

220

],

“id”: “fbfe37cf-f72f-4953-be96-91e7939a6f8f”,

“name”: “Merge”

}

],

“pinData”: {},

“connections”: {

“When clicking ‘Test workflow’”: {

“main”: [

[

{

“node”: “Microsoft OneDrive4”,

“type”: “main”,

“index”: 0

}

]

]

},

“Loop Over Items”: {

“main”: [

,

[

{

“node”: “Simple Vector Store”,

“type”: “main”,

“index”: 0

}

]

]

},

“Embeddings OpenAI”: {

“ai_embedding”: [

[

{

“node”: “Simple Vector Store”,

“type”: “ai_embedding”,

“index”: 0

}

]

]

},

“Default Data Loader”: {

“ai_document”: [

[

{

“node”: “Simple Vector Store”,

“type”: “ai_document”,

“index”: 0

}

]

]

},

“Recursive Character Text Splitter”: {

“ai_textSplitter”: [

[

{

“node”: “Default Data Loader”,

“type”: “ai_textSplitter”,

“index”: 0

}

]

]

},

“Simple Vector Store”: {

“main”: [

[

{

“node”: “Loop Over Items”,

“type”: “main”,

“index”: 0

}

]

]

},

“When chat message received”: {

“main”: [

[

{

“node”: “Chat Memory Manager”,

“type”: “main”,

“index”: 0

}

]

]

},

“Simple Vector Store1”: {

“ai_vectorStore”: [

[

{

“node”: “Vector Store Retriever”,

“type”: “ai_vectorStore”,

“index”: 0

}

]

]

},

“Embeddings OpenAI1”: {

“ai_embedding”: [

[

{

“node”: “Simple Vector Store1”,

“type”: “ai_embedding”,

“index”: 0

}

]

]

},

“Microsoft OneDrive4”: {

“main”: [

[

{

“node”: “Microsoft OneDrive5”,

“type”: “main”,

“index”: 0

}

]

]

},

“Microsoft OneDrive5”: {

“main”: [

[

{

“node”: “Loop Over Items”,

“type”: “main”,

“index”: 0

}

]

]

},

“Vector Store Retriever”: {

“ai_retriever”: [

[

{

“node”: “Question and Answer Chain”,

“type”: “ai_retriever”,

“index”: 0

}

]

]

},

“OpenAI Chat Model2”: {

“ai_languageModel”: [

[

{

“node”: “Question and Answer Chain”,

“type”: “ai_languageModel”,

“index”: 0

}

]

]

},

“Question and Answer Chain”: {

“main”: [

[

{

“node”: “Edit Fields”,

“type”: “main”,

“index”: 0

},

{

“node”: “Chat Memory Manager1”,

“type”: “main”,

“index”: 0

}

]

]

},

“Edit Fields”: {

“main”: [

]

},

“Simple Memory”: {

“ai_memory”: [

[

{

“node”: “Chat Memory Manager”,

“type”: “ai_memory”,

“index”: 0

},

{

“node”: “Chat Memory Manager1”,

“type”: “ai_memory”,

“index”: 0

}

]

]

},

“Chat Memory Manager”: {

“main”: [

[

{

“node”: “Question and Answer Chain”,

“type”: “main”,

“index”: 0

}

]

]

},

“Simple Memory1”: {

“ai_memory”: [

]

},

“Respond to Webhook1”: {

“main”: [

]

},

“Chat Memory Manager1”: {

“main”: [

]

}

},

“active”: false,

“settings”: {

“executionOrder”: “v1”

},

“versionId”: “4fd292a9-16cb-4f49-ac0e-3a53aa6c582e”,

“meta”: {

“templateCredsSetupCompleted”: true,

“instanceId”: “274690a662ddcf878f1a5082b9b826fa420de465650222dc51b254869704139c”

},

“id”: “1iWDWVmfpoPqFldI”,

“tags”: