All, I looked at a lot of the ‘combining outputs’ topics here in the forumin my quest to combine markdowns, but none really address what I am trying to achieve - therefore allow me to open this new topic:

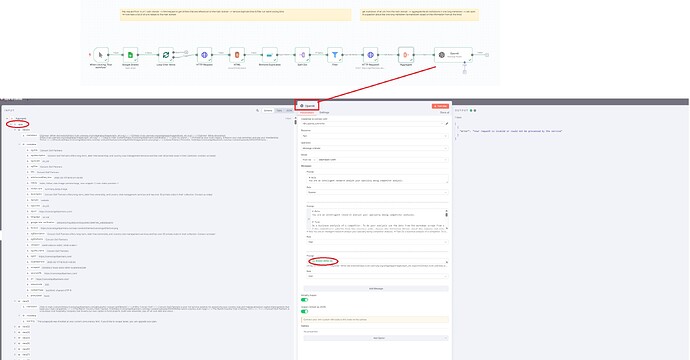

In short, i want to ask ai questions about a company, solely based on the company’s website. My flow is:

- http request from the 1x main domain → OK

- html request to get all the links referenced on that main domain 77x links → OK

- remove duplicates & filter out weird links, 69x links remaining → OK

- get the html markdown of all 69x links → OK

- aggregate the 69x markdowns, so i can ask ai later questions about the entirety of the website, the result is one object ‘{{ $json.data }}’ → OK

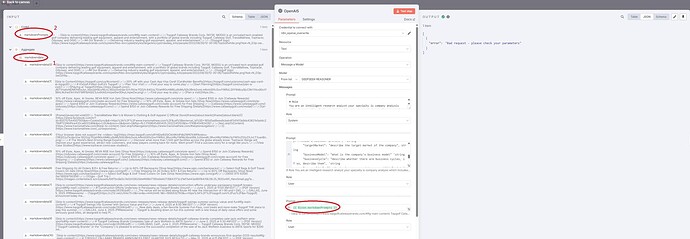

- this is where it breaks: in the last step i ask openai quesitons, referencing the one object ‘{{ $json.data }}’ from the previous node, but i receive an error “error: your request is invalid or could not be processed by service” (see screenshot). When i reference ‘{{ $json.data[0].markdown }}’ instead it works, but the ai will only reference the markdown of 1x of the 69x urls. I think combining the markdowns with the ‘aggregate’ function might be wrong? I fiddled around with other functions but none do the trick.

Would a appreciate a guiding hand a lot!

Thanks!

Felix

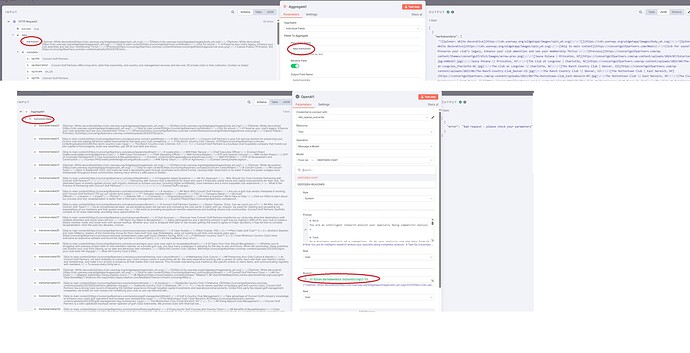

In Aggregate Node.

You should use the field markdown

Then you will get an array of markdown field.

In the OpenAI node : Using {{ $json.toJsonString() }}

1 Like

Hey Darrell, thanks for your reply. I follow your steps

- aggregate node → link to markdown

- openai nodeL → link to the aggregate-markdown with the jsonstring

I still get the error though, see screenshot

In this scenario I will guess the problem is from Deepseek

Since the prompt looks no problem now. Do you have other model to test?

1 Like

Hm strange, the chat gpt/open ai prompt works normally if i just link it to 1x single markdown, isntead of that array of multiple markdown, so i dont think the issue is with chat gpt/open ai. Seems like something in the automation logic doesnt flow correctly just yet

Maybe you can share the workflow code with pin aggregate data and we can run some test.

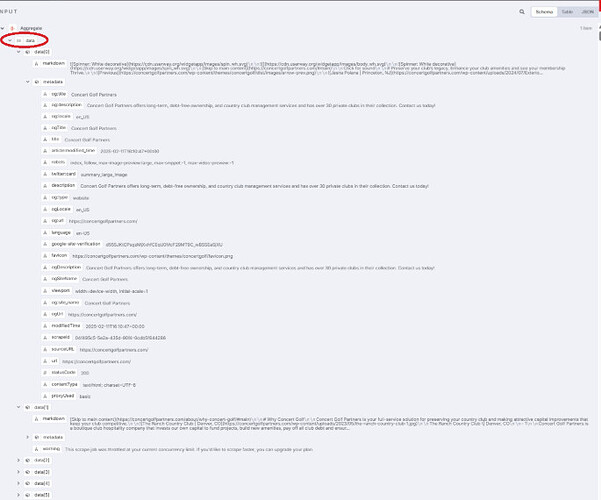

Or share the input json

We can see how to change the setting

1 Like

Hey Dan, sorry for the late reply, i was under the weather.

I am happy to share the workflow here in a comment (similar to what you did above), but I dont know how. I have the paid verison of n8n and see the share button, but dont know how to integrate it here in the chat

The documentation (Sharing | n8n Docs) and other posts asking about it didnt help (How do I share a workflow? The documentation doesn't relate to product - #2 by romain-n8n). I am sorry, i am such a noob still. The workflow id is #qn57AohkchAcLgtm

Does that help?

The issue still prevails, in case you have time to lend me your expertise

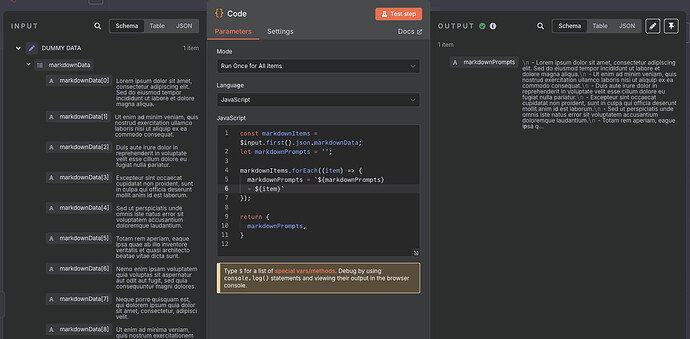

The problem is that you’re trying to pass an array of prompts into the agent. You can use a code block to build a single string value and place it inbetween the aggregate and ai node

2 Likes

It works, thanks a bunch Wouter!!!

For anyone who is reading this, the short of it: ai agents cant work with arrays, turn the array into a string via the snippet of code that Wouter suggested. The string the ai agent can work with

1 Like

Can’t tell the different between use Aggregate and toJsonString()

It’s quite the same if you want to put an array into one string.

But agree on any method can make help you work it’s a good method.

1 Like

No it’s not the same. You need to understand how n8n handles data. It can either return a number of items which the next node will get executed for each one OR with the aggregage, instead of executing the next node 10 times for example, it will create a single output item of an array type. The problem here was that the AI node expects a STRING not ARRAY.

Reading this will explain it in more detail

1 Like

Hey Wouter, sorry to ‘scope creep’ you, but its actually a related quesiton and you seem knwledgable, please allow me to pick your brains on the following

I followed your adivse

- i take the aggregate markdown data which is an array 'markdown

- i use the code to turn it successfully into a string ‘markdownprompt’

- then i hand the ‘markdownprompt’ stirng to openai to do something with it → the issue, for most websites it works, but sometimes it doesnt and the output becomes an ‘error - bad request’. Do you know what could be causing this? One thought is, the markdownpromt content might be too long?

If iam crossing a boundary, apologies and please ignore me. You already helped a lot!

No problem at all, we’re here to help where we can. Can you share your updated workflow in a code block please. It’s difficult to guess based on what you have setup. How big is the markdownprompt value for the ones which fail? If intermittend then most likely it is a context window limit. Which model are you using? What are you trying to achieve with the AI call etc. There might be a better way to deal with this issue logically and efficiently.

Reading your original question again, if all you really want to do is scrape a company’s information from their website and then pull information out of it, a RAG solution will be a better more efficient way to “question” data

Watch this video and specifically look at the demo near the beginning of the video where he explains what this solves and see if it would work in your use case

1 Like

Hey, thanks for the speedy reply. Your pointer to the ‘RAG’ models was great!!! i think this is exactly what i need - i basically do a lot of data analysis , eg competitor analysis and such. I will put my head in the books and have to watch more videos/read more about RAG setups and vector data basis.

Unfortunaltey, n8n wont allow me to share the the code because its maxed out on the character count 477,555/320,000,  I could add you to the project, but i think i should not waste your time on this any further, if i rebuild all of this with a RAG model (hopefully).

I could add you to the project, but i think i should not waste your time on this any further, if i rebuild all of this with a RAG model (hopefully).

Just so that i have a little win under my belt, the flow that i build is almost done, but i am having one last looping issue. Albeit my issue being slightly different from before, given you seem to understand how n8n flows works with arrays (looping) and string (not looping), let me run this buy you -last one in this thread, i swear- (part of the previous flow, so i cant share it because its too big). The short of it is:

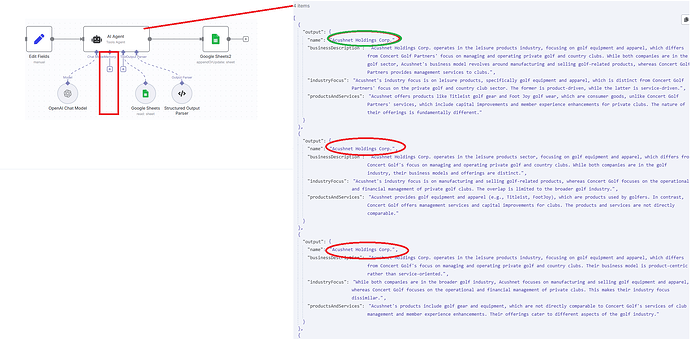

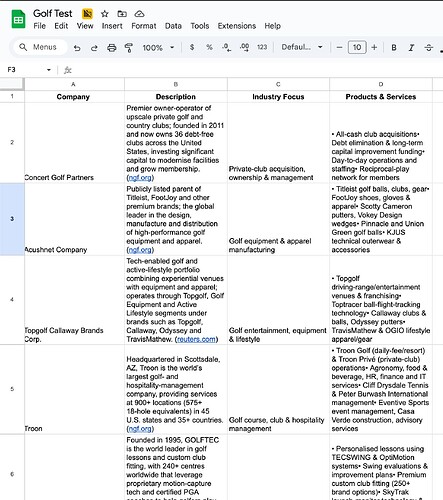

1 )I have completed my data-enrichment process after having scraped websites of companies - its a google sheet

- each each row being a company eg concert golf, ashucut, topgolf)

- each columns is a characteristic of that company (eg business descirption, industry focus etc)

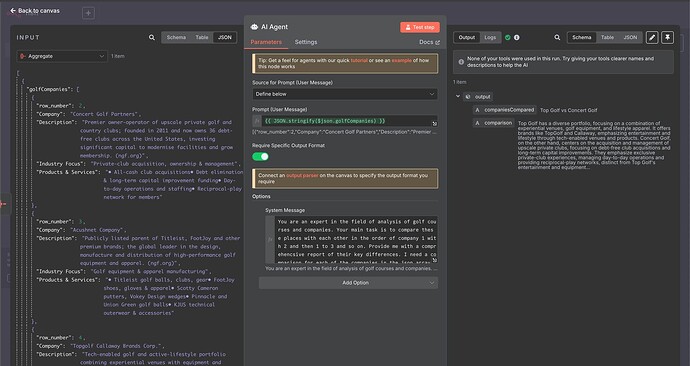

- I am giving my aiagent this google sheet as a tool and ask the agent to do a competitor analysis. (the screenshot)

- The agent should start by comparing company 1 to 2 (concert golf - acushnet)

- then compare 1 to 3 (concert golf - topfolf)

- then 1 to 4 and so on i.e. loop the task.

The agent executes the task correctly on the first run through, ie comparing compant 1 to 2 (concert golf to acushnet), but for the following rows it duplicates the result from the first row (see screenshot). So the loop doesnt work. I was wondering, if this is related to me not giving the ai agent memory? But i am having torubles hooking up memory to a previous node, given the ai agent doesnt use a previous node in its task execution (just the tool / google sheet). Or could the problem be how i wrote my system and user prompt for the ai agent?

Wouter - thanks again for your time and invaluable input!!!

To get past the size issue, you can take a screenshot instead for context and then only copy the nodes relevant to the issue by highlighting those only. We dont always need to the full workflow to troubleshoot a specific area.

Just some tips: I would advise against building workflows toooo big. Break them up into smaller working pieaces, ie one workflow for enrichment, another for processing, etc. And if you need to get data from another workflow, use the “Execute A Sub Workflow” node.

1 Like

You can see RAG more of a knowledge base for an LLM to give it specific knowledge to data you own. It might not solve your use case here

Now to try answer your challenge. Adding memory to an agent is only useful if you’re adding it to a chat bot and need it to remember context like q1: whats the whether like at x, q2: and at y? This will allow the agent to “remember” the context is asking about the weather. I would much rather give it the Think tool to turn the model into a thinking agent

I would design the above very differently though and make the data input more explicit by calling the google sheet before the ai agent and then input that instead of relying on the agent to figure out it needs to read all the rows (unless your prompt was very detailed). I also believe claude does a better job with comparing data.

Let me build a workflow to test my theory before i share here

1 Like

- I will take your advise to heart about slicing and dicing the process into sub-workflows going forward. This was my first attempt at an analysis and even with very few compaies and attributes, the agent was struggling

- rag, after my research , i agree, for my purposes excel might be suficient, but i should get into something like supabase to plan for larger data sets

- re my loop issue , please dont spend you time on it, you’ve already helped more than enough! I will try to build a mini workflow that leverages the result from the data-enrichment process from before from the worksheet, and map the parameters to AI. Also, will give claude a shot. Whether my flow works or not, i will share it here.

Thanks again for your patience with me and the help!!!

Here’s what I built. I need to refine the prompt and data input as it’s only doing one comparison. Im running into a meeting now. I’ll fix it this afternoon after my meeting and update this post, but this should give you an idea. I need to format the input better, this was sloppy and rushed

1 Like