Hi there! ![]() We’re excited to share our custom n8n community node that seamlessly integrates OpenAI with Langfuse, adding powerful observability features

We’re excited to share our custom n8n community node that seamlessly integrates OpenAI with Langfuse, adding powerful observability features ![]() . This node brings structured tracing and metadata tracking to your OpenAI-powered workflows with ease.

. This node brings structured tracing and metadata tracking to your OpenAI-powered workflows with ease.

This project is proudly developed and maintained by Wistron DXLab.

- GitHub: GitHub - rorubyy/n8n-nodes-openai-langfuse: An n8n community node that brings Langfuse observability to your OpenAI chat workflows.

- npm: n8n-nodes-openai-langfuse - npm

Why Use This Integration?

This custom node is perfect for data-driven teams and observability enthusiasts looking to:

- Track and trace every request/response between n8n and OpenAI-compatible models.

- Inject contextual metadata (

sessionId,userId, etc.) into your workflows. - Visualize workflows and trace logs directly in your Langfuse dashboard.

Installation

For n8n v0.187+, you can install this node directly from the Community Nodes feature.

How to Install

- Navigate to Settings → Community Nodes in your n8n UI.

- Click Install.

- Enter

n8n-nodes-openai-langfusein the npm package field. - Agree to the community node usage risks.

- Click Install to complete the process!

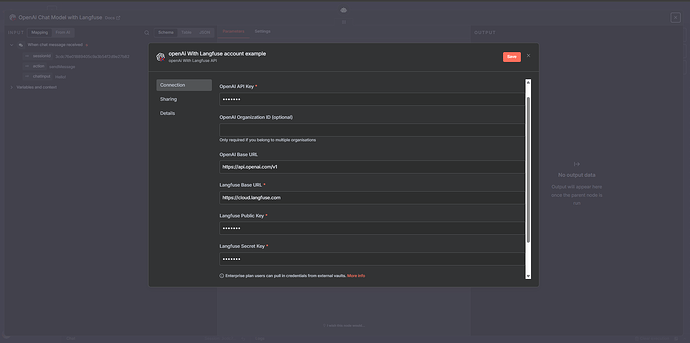

Credential Setup

To use this node, you’ll need credentials to:

- Authenticate with your OpenAI-compatible LLM endpoint.

- Enable Langfuse tracing by logging requests and responses to Langfuse.

OpenAI Credential Details

OpenAI Credential Details

| Field Name | Description | Example |

|---|---|---|

| OpenAI API Key | Your secret API key to access the OpenAI endpoint. | sk-xx |

| OpenAI Organization ID | (Optional) Your OpenAI organization ID, if required. | org-xyz789 |

| OpenAI Base URL | Base URL for your OpenAI-compatible endpoint. | Default: https://api.openai.com/v1 |

Langfuse Credential Details

Langfuse Credential Details

| Field Name | Description | Example |

|---|---|---|

| Langfuse Base URL | The base URL of your Langfuse instance (cloud or self-hosted). | https://cloud.langfuse.com or custom URL. |

Langfuse Public Key * |

Used for authentication in Langfuse. | pk-xx |

Langfuse Secret Key * |

Used for authentication in Langfuse. | sk-xx |

How to Find Your Langfuse Keys:

Log in to your Langfuse dashboard and navigate to:

Settings → Projects → [Your Project] to retrieve yourpublicKeyandsecretKey.

Once your credentials are correctly filled in, they will look like this:

After saving the credentials, you’re ready to configure your workflows and see your traces in Langfuse!

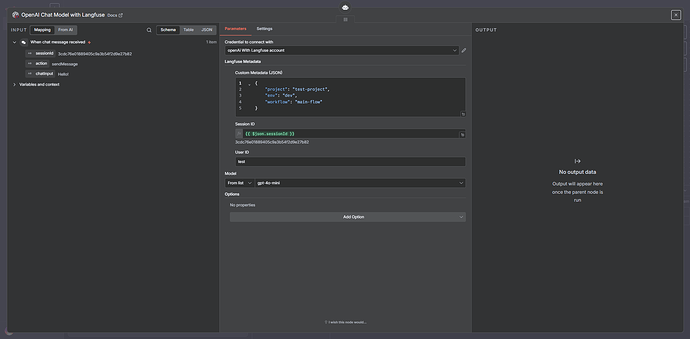

Using the Node

This node automatically injects Langfuse-compatible metadata into your OpenAI requests. You can trace every workflow run with context such as sessionId, userId, and any custom metadata.

Supported Metadata Fields

| Field | Type | Description |

|---|---|---|

sessionId |

string |

A unique identifier for your logical session. |

userId |

string |

The ID of the end user making the request. |

metadata |

object |

A custom JSON object for additional workflow context. |

Example of Custom Metadata:

{

"project": "test-project",

"env": "dev",

"workflow": "main-flow"

}

Example Workflow Setup

Here’s a typical workflow configuration using the Langfuse Chat Node:

1. Node Configuration

In the node UI, configure your inputs as follows:

| Input Field | Example Value |

|---|---|

| Session ID | {{$json.sessionId}} |

| User ID | user-123 |

| Custom Metadata | { "project": "example", "env": "prod" } |

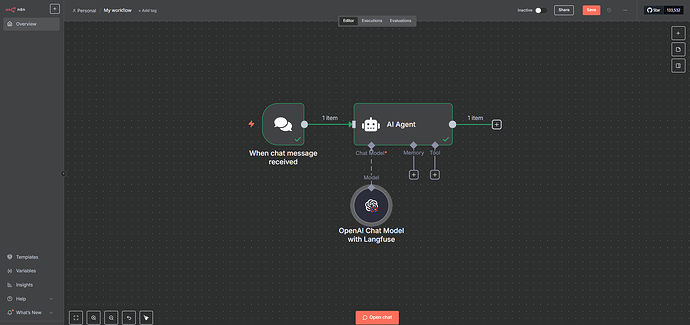

2. Workflow Design

An example workflow setup using this node:

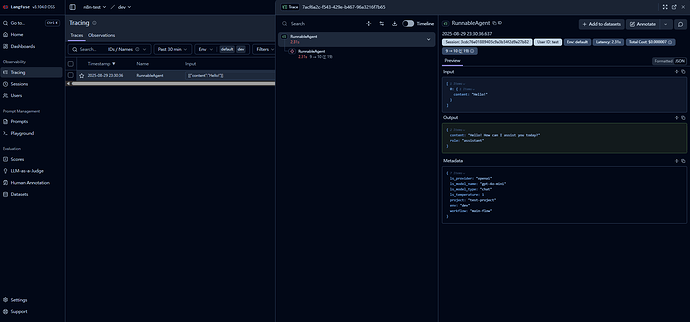

3. Langfuse Trace Output

Once your workflow runs, you can view visualized traces in your Langfuse dashboard:

It helps you easily trace and analyze LLM usage inside n8n using Langfuse, including session metadata support.

Hope it helps! ![]()