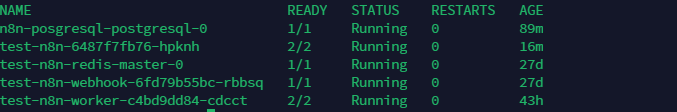

Yes Jon, I put fluentbit sidecar inside n8n pod to send logs to elasticsearch. Thats why you see 2/2 but this was after I saw unexpecting behave. I want to create an alarm if one more cron occurs at same time. Thas why I put fluentbit sidecar inside n8n pod to send logs to elasticsearch. I think the main problem is something cause n8n operator (n8n deployment) to write one more record of same workflow in Redis and thats why worker picks the schedule trigger job and executes. You can ignore fluentbit sidecar.

I installed n8n from this helm chart => git clone GitHub - 8gears/n8n-helm-chart: A Kubernetes Helm chart for n8n a Workflow Automation Tool. Easily automate tasks across different services.

my values yaml template is :

n8n:

encryption_key: # n8n creates a random encryption key automatically on the first launch and saves it in the ~/.n8n folder. That key is used to encrypt the credentials before they get saved to the database.

defaults:

config:

executions:

pruneData: “true” # prune executions by default

pruneDataMaxAge: 3760 # Per defaut we store 1 year of history

database:

type: postgresdb # Type of database to use - Other possible types [‘sqlite’, ‘mariadb’, ‘mysqldb’, ‘postgresdb’] - default: sqlite

postgresdb:

database: n8n # PostgresDB Database - default: n8n

host: n8n-posgresql-postgresql-hl # PostgresDB Host - default: localhost

password: abc123xx # PostgresDB Password - default: ‘’

port: 5432 # PostgresDB Port - default: 5432

user: postgres # PostgresDB User - default: root

schema: public # PostgresDB Schema - default: public

executions:

process: own # In what process workflows should be executed - possible values [main, own] - default: own

timeout: -1 # Max run time (seconds) before stopping the workflow execution - default: -1

maxTimeout: 3600 # Max execution time (seconds) that can be set for a workflow individually - default: 3600

saveDataOnError: all # What workflow execution data to save on error - possible values [all , none] - default: all

saveDataOnSuccess: all # What workflow execution data to save on success - possible values [all , none] - default: all

saveDataManualExecutions: false # Save data of executions when started manually via editor - default: false

pruneData: false # Delete data of past executions on a rolling basis - default: false

pruneDataMaxAge: 336 # How old (hours) the execution data has to be to get deleted - default: 336

pruneDataTimeout: 3600 # Timeout (seconds) after execution data has been pruned - default: 3600

generic:

timezone: Europe/Istanbul # The timezone to use - default: America/New_York

extraEnv:

extraEnvSecrets: {}

persistence:

enabled: true

type: emptyDir # what type volume, possible options are [existing, emptyDir, dynamic] dynamic for Dynamic Volume Provisioning, existing for using an existing Claim

storageClass: “”

accessModes:

- ReadWriteOnce

size: 1Gi

replicaCount: 1

deploymentStrategy:

type: “Recreate”

image:

repository: n8nio/n8n

pullPolicy: IfNotPresent

tag: “1.1.1”

imagePullSecrets: []

nameOverride: “”

fullnameOverride: “”

serviceAccount:

create: true

annotations: {}

template

name: “”

podAnnotations: {}

podLabels: {}

podSecurityContext: {}

securityContext:

{}

lifecycle:

{}

command: []

service:

type: ClusterIP

port: 80

annotations: {}

workerResources:

{}

webhookResources:

{}

resources:

limits:

cpu: ‘1’

memory: 2Gi

requests:

cpu: 10m

memory: 128Mi

autoscaling:

enabled: true

minReplicas: 1

maxReplicas: 3

targetCPUUtilizationPercentage: 80

nodeSelector: {}

tolerations: []

affinity: {}

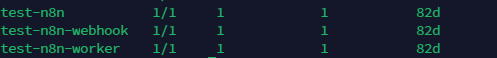

scaling:

enabled: true

worker:

count: 2

concurrency: 2

With .Values.scaling.webhook.enabled=true you disable Webhooks from the main process but you enable the processing on a different Webhook instance.

webhook:

enabled: true

count: 1

redis:

host: test-n8n-redis-master

password: abc123xx

Bitnami Redis configuration

redis:

enabled: true

architecture: standalone

auth:

enabled: true

password: abc123xx

master:

persistence:

enabled: true

existingClaim: “”

size: 2Gi