Hi!

I would like to contribute to the Slack node ![]()

These almost are my first steps digging into node development, so I would appreciate a little bit of help here ![]()

![]()

Let me explain a little bit about the use case I am trying to solve before getting to the question where I am blocked.

Slack API

Slack has rate limiting conditions while interacting with its API. The interesting thing is that they include the number of seconds until you can retry a request in the response Retry-After HTTP header. Example:

HTTP/1.1 429 Too Many Requests

Retry-After: 30

Ideal rate limiting handling

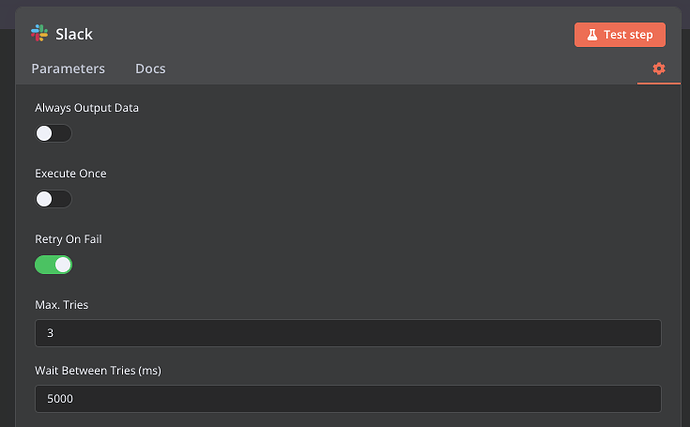

We could avoid reaching the rate limit by forcing a fixed amount of wait time like:

The limitations with this approach are:

- We would be forced to be conservative in our estimations due to how Slack define rate limits, so we will be waiting more than needed

- We would not be considering parallel execution of other workflows at this very same time which could interfere in the Slack API consumption

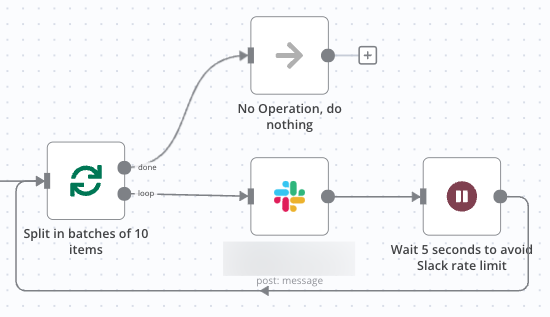

The ideal approach would be to only wait when the rate limit is reached. Something that can be actually implemented in n8n like:

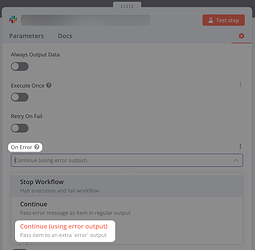

We can behave like that because n8n already allow to configure the Slack node in order to continue even in case of error, and returns the error output (note the emphasis here) with:

Slack node limitations

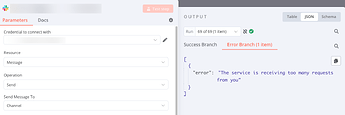

The problem is that, when the Slack API rate limit is reached, the Slack node does not expose the previously mentioned Retry-After HTTP header information in order to parametrize the Wait node with that as an input value. Instead, it only returns the error message:

I we do not modify the Slack node “On Error” setting and leave the default behaviour (Stop Workflow), it shows a little bit more information:

Node implementation

Taking a look to the node implementation, I thought that it could be as simple as adding something similar to the following code at this point:

else if (response.error === 'ratelimited') {

const retryAfter = response.headers['retry-after'];

throw new NodeOperationError(

this.getNode(),

'The service is receiving too many requests from you',

{

description: `You should wait ${retryAfter} seconds before making another request`,

level: 'warning',

messageMapping: { retryAfter },

},

);

}

However, after digging a little bit more configuring n8n locally in order to reproduce the error and testing it, that wild guess do not seem to make any sense ![]()

It seems that the this.helpers.requestWithAuthentication method call already handles the 429 response code and throws a NodeApiError exception not allowing to handle the case from the node side.

Question

I am looking for a little bit of guidance here. I have configured the development environment (congrats btw for having a very straightforward process and instructions), tried to understand the paradigm behind nodes GenericFunctions.ts files and the difference between NodeOperationError and NodeApiError, but I feel like 5 minutes of someone pointing me in the right direction could save some more hours of digging ![]()

Thanks!

Information on your n8n setup

- n8n version: 1.41.0

- Database: default

- n8n EXECUTIONS_PROCESS setting: default

- Running n8n via: pnpm

- Operating system: macOS