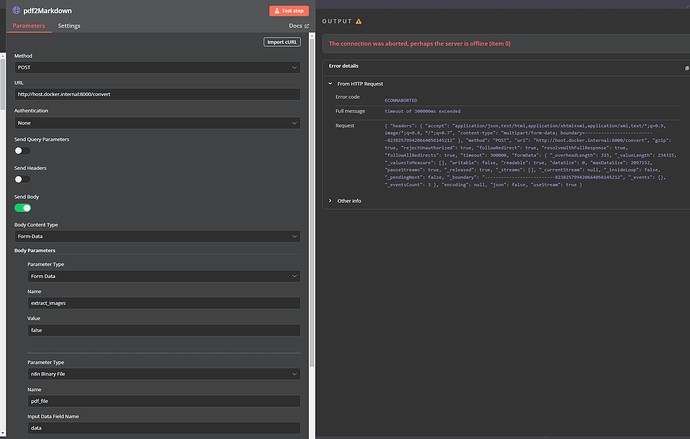

“I’m leveraging the savatar101/marker-api:0.3 to transform PDFs into Markdown format. Deployed in a Docker container, the API handles single 10-page PDFs effectively. But when processing 10 such PDFs concurrently, it fails with the following error:”

The connection was aborted, perhaps the server is offline [item 0]

Error details

From HTTP Request

Error code

ECONNABORTED

Full message

timeout of 300000ms exceeded

Request

{ “headers”: { “accept”: “application/json,text/html,application/xhtml+xml,application/xml,text/;q=0.9, image/;q=0.8, /;q=0.7”, “content-type”: “multipart/form-data; boundary=--------------------------823825799420664056145212” }, “method”: “POST”, “uri”: “http://host.docker.internal:8000/convert”, “gzip”: true, “rejectUnauthorized”: true, “followRedirect”: true, “resolveWithFullResponse”: true, “followAllRedirects”: true, “timeout”: 300000, “formData”: { “_overheadLength”: 315, “_valueLength”: 234315, “_valuesToMeasure”: , “writable”: false, “readable”: true, “dataSize”: 0, “maxDataSize”: 2097152, “pauseStreams”: true, “_released”: true, “_streams”: , “_currentStream”: null, “_insideLoop”: false, “_pendingNext”: false, “_boundary”: “--------------------------823825799420664056145212”, “_events”: {}, “_eventsCount”: 3 }, “encoding”: null, “json”: false, “useStream”: true }

Despite n8n being stopped, the Docker server continues to process files, resulting in 80% CPU utilization and 35GB RAM consumption out of 64GB."

I appreciate any help you can give

Information on your n8n setup

- n8n version: 1.74.2

- **Database (default: SQLite)

- **n8n EXECUTIONS_PROCESS setting (default: own, main):**default/I don’t know

- **Running n8n via Docker

- Operating system: windows 11 24h2