Hello everyone,

I’m encountering a strange issue with n8n and would appreciate your help in resolving it.

Context

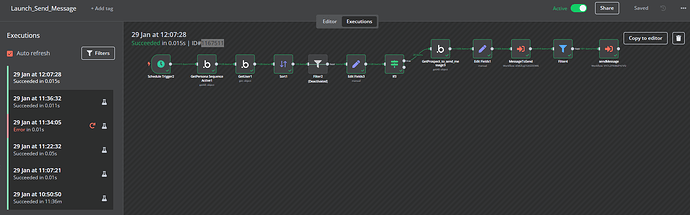

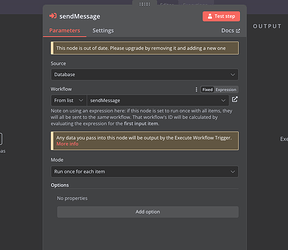

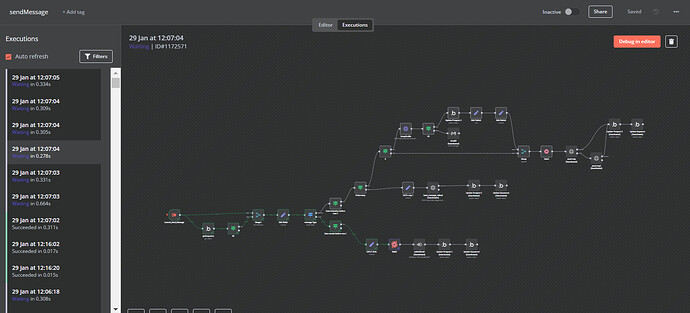

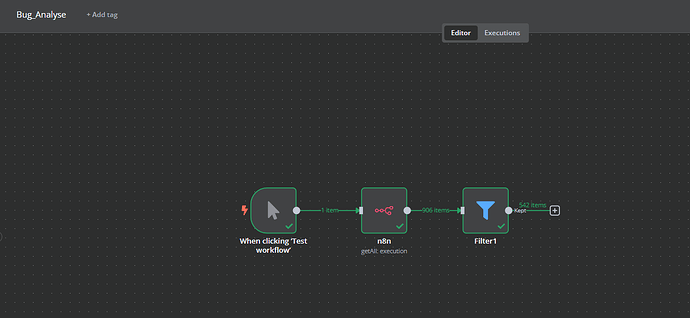

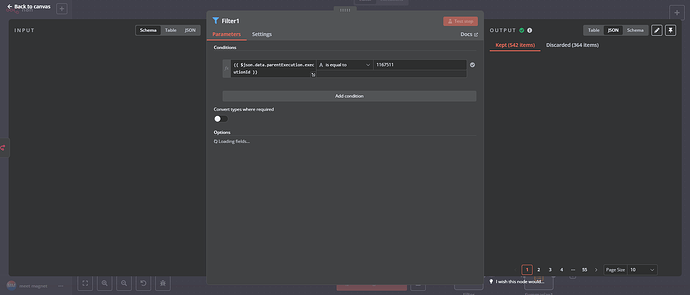

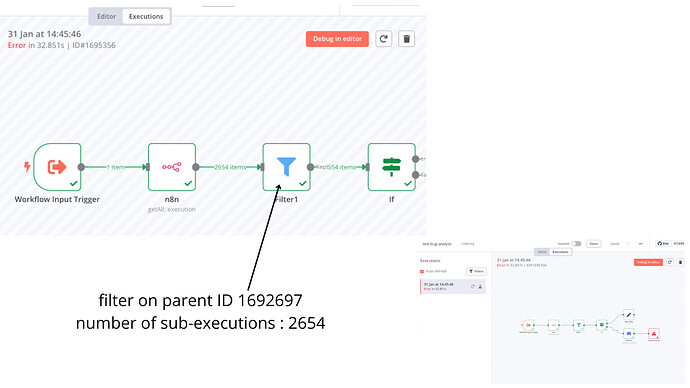

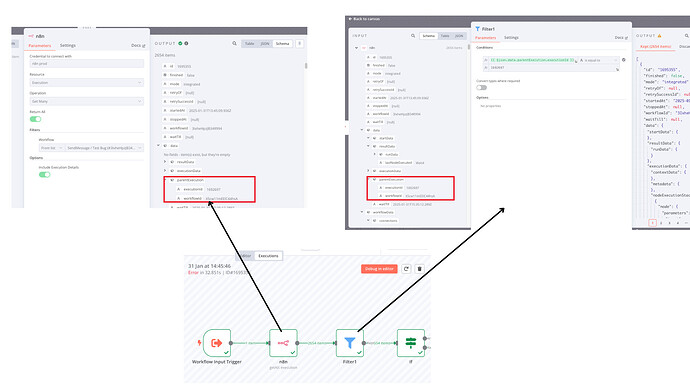

- I have a parent workflow that triggers a child workflow (named

sendMessage) using the Execute Workflow node. - Normally, the parent sends 181 items to the child workflow, so I expect to see 181 executions in

sendMessage. - Problem: In the logs, I observe that

sendMessagehas been executed ~ 542 times for the same parent execution (same ID). - Resulting Issue: There is an unexpected surplus of duplicate executions.

Let explain what hapen :

My trigger node, “sendMessage”, from the workflow “Launch_send_message”, sends 181 items to the workflow “sendMessage”.

In the “sendMessage” workflow, I receive the data once for each item (so it should normally be 181 executions) from the node “Launch_Send_message”.However, instead of 181 executions, there were approximately 542 executions, including duplicate executions with the same data, the same IDs, and identical information.

Do you know why this is happening?

Environment

- n8n is deployed using Docker and Docker Compose.

- Execution Mode:

EXECUTIONS_MODE=queue. - 1 main

n8ncontainer (handles the interface, etc.) and 6 workers (n8n-worker-X), as well asn8n-webhook-Xservices. - Database: Postgres 16.

- Queue: Redis 6.

- Proxy: Traefik.

What I Have Already Checked

- The parent workflow does not contain any loops or multiplier processes (e.g., misconfigured Split in Batches).

- In the logs, all 542 executions of

sendMessageare linked to the same parentexecutionId. - I noticed that in the main container, I did not explicitly set

N8N_DISABLE_PRODUCTION_MAIN_PROCESS=true.- This means the main process might also be executing the 181 items in addition to the workers.

- I am using multiple workers, which could be further multiplying the executions.

Questions

- Why is n8n executing the same items multiple times?

- Are there specific environment variables (e.g.,

EXECUTIONS_WORKER=queue,EXECUTIONS_WORKER_PROCESS,N8N_DISABLE_PRODUCTION_MAIN_PROCESS=true,QUEUE_HEALTH_CHECK_ACTIVE, etc.) that I might have forgotten or misconfigured? - There are several environment variables available, but which ones should I use? n8n Queue Mode Environment Variables Documentation

Thank You in Advance

Any suggestions or potential solutions are welcome!

If you have examples of functional docker-compose configurations in queue mode with multiple workers, I would greatly appreciate it.

Thank you for your assistance!

Additional Information:

For reference, here is my current docker-compose.yml configuration:

version: '3.8'

volumes:

db_storage:

n8n_storage:

redis_storage:

traefik_data:

ollama_storage:

x-shared: &shared

restart: always

image: docker.n8n.io/n8nio/n8n

environment:

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=postgres

- DB_POSTGRESDB_PORT=5432

- DB_POSTGRESDB_DATABASE=${POSTGRES_DB}

- DB_POSTGRESDB_USER=${POSTGRES_NON_ROOT_USER}

- DB_POSTGRESDB_PASSWORD=${POSTGRES_NON_ROOT_PASSWORD}

- EXECUTIONS_MODE=queue

- QUEUE_BULL_REDIS_HOST=redis

- QUEUE_HEALTH_CHECK_ACTIVE=true

- N8N_ENCRYPTION_KEY=${ENCRYPTION_KEY}

- N8N_BASIC_AUTH_ACTIVE=true

- N8N_BASIC_AUTH_USER=${N8N_BASIC_AUTH_USER}

- N8N_BASIC_AUTH_PASSWORD=${N8N_BASIC_AUTH_PASSWORD}

- N8N_HOST=n8n.exemple.fr

- N8N_PORT=5678

- N8N_PROTOCOL=https

- WEBHOOK_URL=https://n8n.exemple.fr/

links:

- postgres

- redis

volumes:

- n8n_storage:/home/node/.n8n

depends_on:

redis:

condition: service_healthy

postgres:

condition: service_healthy

x-ollama: &service-ollama

image: ollama/ollama:latest

container_name: ollama

restart: unless-stopped

volumes:

- ollama_storage:/root/.ollama

services:

traefik:

image: traefik:latest

container_name: traefik

networks:

- default

restart: unless-stopped

command:

- "--api=true"

- "--api.insecure=true"

- "--providers.docker=true"

- "--providers.docker.exposedbydefault=false"

- "--entrypoints.web.address=:80"

- "--entrypoints.web.http.redirections.entryPoint.to=websecure"

- "--entrypoints.web.http.redirections.entrypoint.scheme=https"

- "--entrypoints.websecure.address=:443"

- "--certificatesresolvers.mytlschallenge.acme.tlschallenge=true"

- "--certificatesresolvers.mytlschallenge.acme.email=${SSL_EMAIL}"

- "--certificatesresolvers.mytlschallenge.acme.storage=/letsencrypt/acme.json"

ports:

- "80:80"

- "443:443"

volumes:

- traefik_data:/letsencrypt

- /var/run/docker.sock:/var/run/docker.sock:ro

postgres:

image: postgres:16

restart: always

environment:

- POSTGRES_USER=${POSTGRES_USER}

- POSTGRES_PASSWORD=${POSTGRES_PASSWORD}

- POSTGRES_DB=${POSTGRES_DB}

- POSTGRES_NON_ROOT_USER=${POSTGRES_NON_ROOT_USER}

- POSTGRES_NON_ROOT_PASSWORD=${POSTGRES_NON_ROOT_PASSWORD}

volumes:

- db_storage:/var/lib/postgresql/data

- ./init-data.sh:/docker-entrypoint-initdb.d/init-data.sh

healthcheck:

test: ['CMD-SHELL', 'pg_isready -h localhost -U ${POSTGRES_USER} -d ${POSTGRES_DB}']

interval: 5s

timeout: 5s

retries: 10

redis:

image: redis:6-alpine

restart: always

volumes:

- redis_storage:/data

healthcheck:

test: ['CMD', 'redis-cli', 'ping']

interval: 5s

timeout: 5s

retries: 10

n8n:

<<: *shared

labels:

- "traefik.enable=true"

- "traefik.http.routers.n8n.rule=Host(`n8n.exemple.fr`)"

- "traefik.http.routers.n8n.entrypoints=websecure"

- "traefik.http.routers.n8n.tls=true"

- "traefik.http.routers.n8n.tls.certresolver=mytlschallenge"

deploy:

resources:

limits:

cpus: "1"

n8n-worker-1:

<<: *shared

command: worker

deploy:

resources:

limits:

cpus: "1"

depends_on:

- n8n

n8n-worker-2:

<<: *shared

command: worker

deploy:

resources:

limits:

cpus: "1"

depends_on:

- n8n

n8n-worker-3:

<<: *shared

command: worker

deploy:

resources:

limits:

cpus: "1"

depends_on:

- n8n

n8n-worker-4:

<<: *shared

command: worker

deploy:

resources:

limits:

cpus: "1"

depends_on:

- n8n

n8n-worker-5:

<<: *shared

command: worker

deploy:

resources:

limits:

cpus: "1"

depends_on:

- n8n

n8n-worker-6:

<<: *shared

command: worker

deploy:

resources:

limits:

cpus: "1"

depends_on:

- n8n

n8n-webhook-1:

<<: *shared

command: webhook

deploy:

resources:

limits:

cpus: "0.5"

depends_on:

- n8n

n8n-webhook-2:

<<: *shared

command: webhook

deploy:

resources:

limits:

cpus: "0.5"

depends_on:

- n8n

ollama-cpu:

<<: *service-ollama