Hey everyone, I’m using n8n Cloud (v1.48.1) and running into an issue when trying to convert a Twilio MMS image into a base64 string.

Goal

When someone sends a photo to my Twilio MMS number, I want to:

- Download the image

- Convert it to a base64 string

- Use that base64 string in a downstream API (Google Vision, enrichment, AI, etc.)

Current Flow

- Webhook receives MMS

- Download MMS Image node successfully saves the image (image shows as binary)

- Encode to Base64 node uses this code:

const item = $input.item;

const binaryKeys = Object.keys(item.binary || {});

const key = binaryKeys[0];

if (key && item.binary[key]?.data) {

item.json.base64 = item.binary[key].data;

} else {

item.json.base64 = null;

}

return item;

Instead of actual base64, I get:

“base64”: “filesystem-v2”

Problem

The “filesystem-v2” string is not usable; it appears to be a placeholder indicating that the file is stored on disk instead of in memory. My downstream logic fails since there’s no real base64 string.

What I Need Help With

- How can I force n8n to read the binary image into memory and get the actual base64 string?

- Is there a better way to encode binary to base64 reliably, even if the file is offloaded to disk?

- Does this behavior differ between test and production execution modes?

- Are there env vars or settings I can tweak to avoid this?

Would love any ideas or working examples.

Thanks

Hey, @n8nnewbie48 hope all is good.

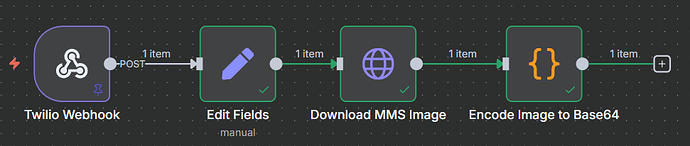

I think this workflow is all you need to get base64 of the image received from Twillio MMS.

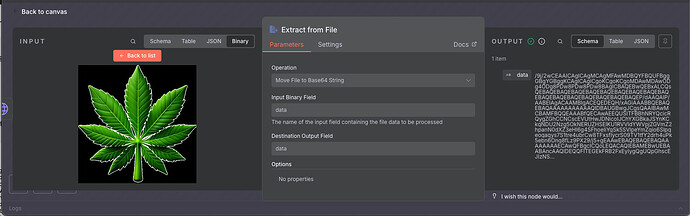

Above you can see the webhook, which is configured as Twillio webhook, then HTTP Request node downloads the MediaUrl0 (attached image) and passes it to the Extract from File node, where the image is transformed into a base64 string, which you can use downstream.

Here is the result of executing the last node featuring base64 encoded beautiful green maple leaf:

Result

1 Like

Hi, this was helpful, thank you! Just wondering, if the image contains text, how would you go about extracting that text from the image within the given workflow? Thank you,

There are a number of ways you can go about getting the text off of an image in n8n for example:

- External OCR APIs, like ocr.space

- Local OCR systems, running alongside your docker (if self-hosting)

- AI assistants which can help with analyzing the image and extracting the text, these can also be either locally running models or external LLMs

Here is an example with AI, using OpenAI:

Result showing input image, which comes to the AI Agent, and output with text recognized.

If you found these answers helpful, kindly mark the most helpful one as solution.

Thank you.

Cheers

@jabbson, your responses have been very helpful. Thank you for taking the time to walk me through a few of my questions. I’m using AI to help build my app, and the results can be hit or miss sometimes

1 Like