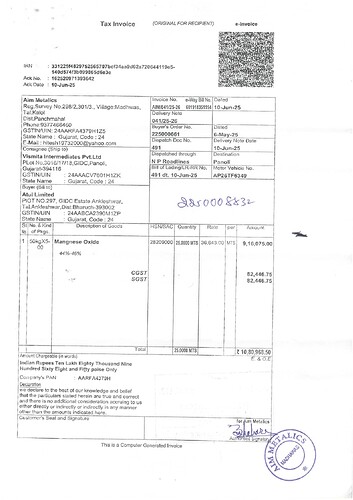

I am trying to extract information from invoices. I used the Extract from PDF node, but it only works for digital PDFs and does not extract data from scanned PDFs. What is the best approach to extract information from scanned invoices?

Hey @Chandana hope all is well.

What you need is an OCR process, which would recognize the text from images in your PDF.

I recommend Mistral, but there is more than one way to skin this cat.

Here is an example I built. I use telegram to upload a pdf, let an llm agent pull some information out and print it. You can extend it from here as needed

PS: this is a super old workflow I built and there is a lot which needs to be enhanced, especially the system prompt and the structured output to maybe include line items as well, but for this demo I just needed the totals and bank details for processing invoices.

Thank you for your response, I used http request to connect with the ocr , but still its extracting some wrong data, Its ocr issue I guess, instead of numbers its extracting alphabets.

Thank you for your response, here did you used scanned documents also?? or only digital docs

If you have an example document you could share (that you struggle with OCR-ing), please do share, no guarantees, but we could try to take a look at what’s what.

These are plain clean invoice pdfs. Not scanned ones from a paper printout. Oh I see you’re actually trying to use scanned documents. Mistral should be the best option for this kind of thing. I can try and push this sample through and see what result I get. Are your examples both images as well as PDFs for the scanned example above?

Only pdf’s.

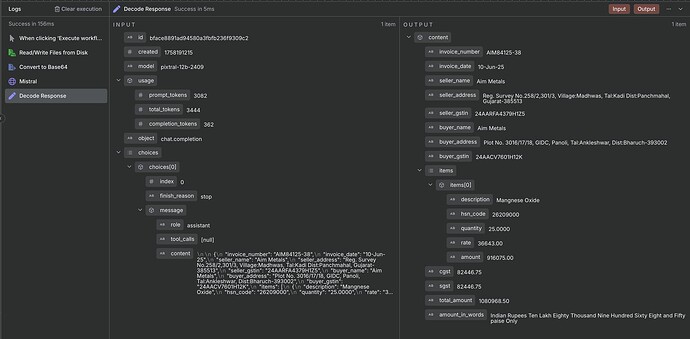

Here is an example using your exact image. You can just do the same for pdf:

{

"invoice_number": "AIM84125-38",

"invoice_date": "10-Jun-25",

"seller_name": "Aim Metals",

"seller_address": "Reg. Survey No.258/2,301/3, Village:Madhwas, Tal:Kadi Dist:Panchmahal, Gujarat-385513",

"seller_gstin": "24AARFA4379H1Z5",

"buyer_name": "Aim Metals",

"buyer_address": "Plot No. 3016/17/18, GIDC, Panoli, Tal:Ankleshwar, Dist:Bharuch-393002",

"buyer_gstin": "24AACV7601H12K",

"items": [

{

"description": "Mangnese Oxide",

"hsn_code": "26209000",

"quantity": "25.0000",

"rate": "36643.00",

"amount": "916075.00"

}

],

"cgst": "82446.75",

"sgst": "82446.75",

"total_amount": "1080968.50",

"amount_in_words": "Indian Rupees Ten Lakh Eighty Thousand Nine Hundred Sixty Eight and Fifty paise Only"

}

Based on the Mistral documentation, it appears the OCR functionality is actually part of their vision-capable chat models. So, you can actually provide the prompt to mistral instead of relying on a n8n AI Agent to extract info using chatgpt or claude.

Take note: We are not using the ocr model direct, but the vision api endpoint. Hope this helps

Reference: