I’m building out some automations for a client’s self-hosted n8n instance, and I’ve come across a super weird problem ![]() .

.

For background, I’m an experienced workflow developer with ~50 production n8n workflows/agents under my belt, and I have a number of Telegram-based n8n AI agents that I use on my own self-hosted instances on a daily basis.

I have a very simple sample workflow that is working, where I get a message from Telegram, and reply with a Telegram Message..

This is working as expected:

However, as soon as I add an AI Agent node into the mix, it errors out:

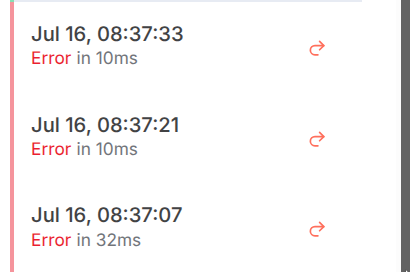

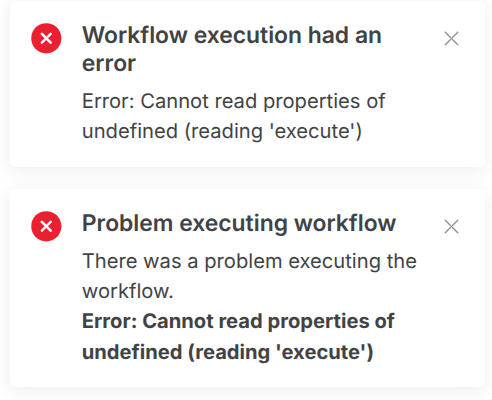

Every time I execute this workflow, the workflow shows as failing instantly, before the trigger node even finishes firing, with a Error: Cannot read properties of undefined (reading 'execute’) error. See below:

I’m pretty perplexed about this one. Haven’t experienced something similar on any of the other n8n instances or Telegram bots I’ve worked on.

For debugging I used an AI agent node just with the “Chat Trigger”, it works as expected.

And the Telegram nodes with an OpenAI Message a model node also works fine.

So it’s something about how the AI Agent Node is interacting with the Telegram nodes.

The weirdest part is that the same error happens, even if the AI Node is disconnected from the Telegram nodes, and is also deactivated. Example, the workflow below falis in the same way.

Any help or insight would be greatly appreciated!!