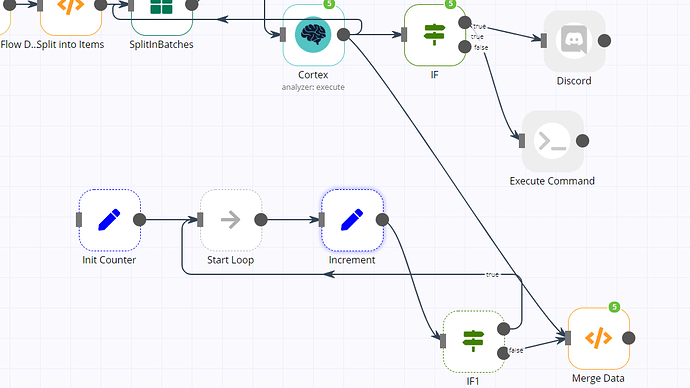

Hello. In node i’m receiving multiple inputs at the same (for example, 5 “data of execution”, so 5 in green number). I want to append all these multiples inputs into one array. How can i do that?

Hey @Bo_Wyatt!

You can refer to the example shared in this post: Merging items after "split in batches" - #2 by jan. Connect the node with the Function node. You will have to modify some values in the Function node.

Hey @Bo_Wyatt!

You only need the Function node. You will have to modify the code snippet in the Function node. You will have to modify line 6 in the Function node const items = $items("Increment", 0, counter).map(item => item.json); based on the output you receive.

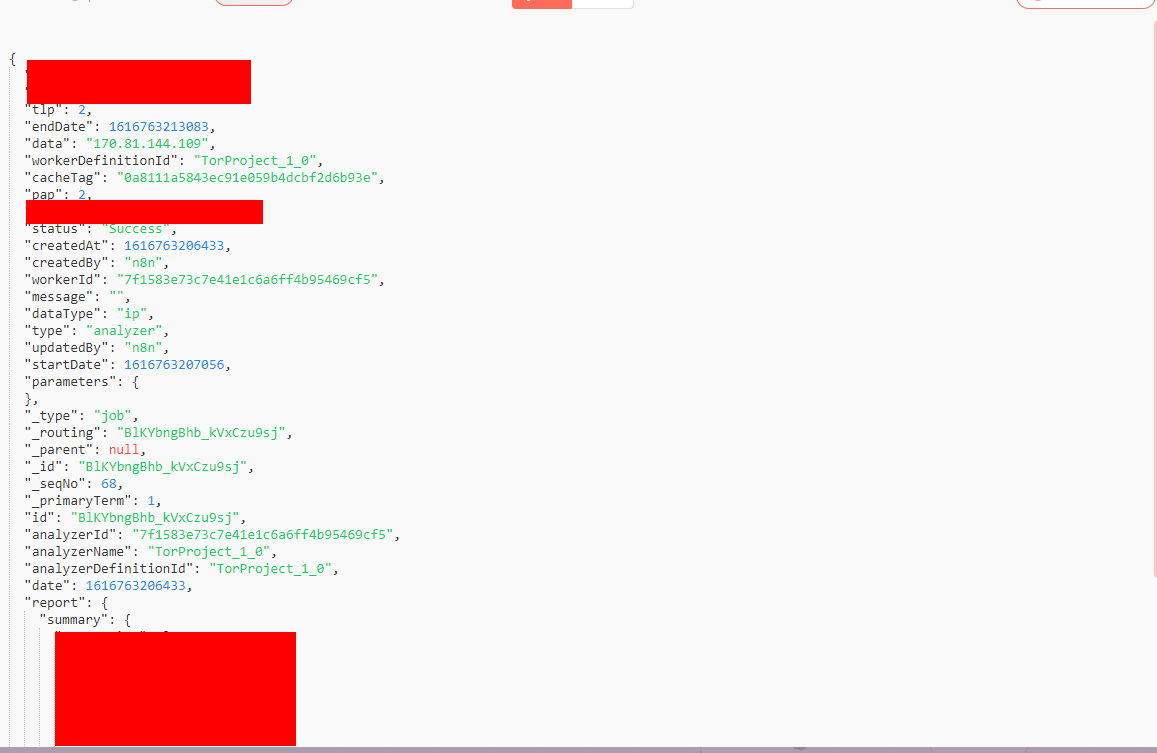

Hello. What kind of modification? The input data looks like this. Do i need to delete all copied nodes except the function one? Thanks for the reply

Ok i think i’ve figured it out. The last execution stores all my items. I’ve added an IF node to output when the length is the desired.

There is a better workaround for that?

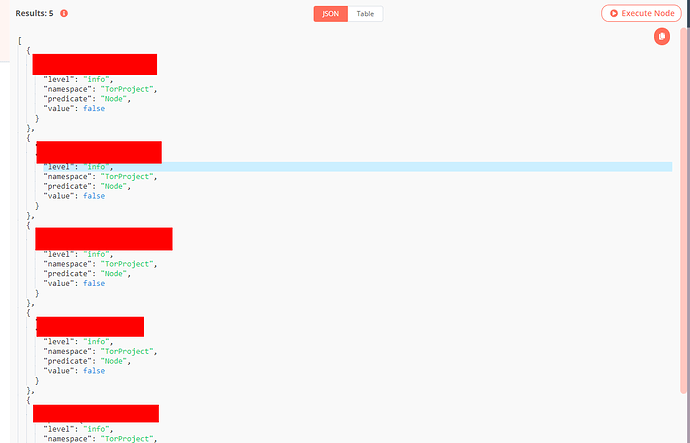

Ok, now i have other error. The output of the Merge Data node is a list with one item (an array), in this case, allData. So when i use the traditional “Make Binary”, the item at position 0 is that array so all items are being writed to a file. But i’m using an extra step to gather only the info i need, as show in the picture. How can i export the array showed in the picture to a file?

Hey @Bo_Wyatt,

Is your previous issue resolved? Were you able to merge the items?

Yes, indeed i was able to merge the items. The last item of the batch is which contains all the items. I’ve just used an IF Node to let through when the length is equal the desired.

I have another question on this. The length it’s not predictable, so how can i output only the full list (which contains all the items)?

You can use the .lenght method. So your expression in the IF node might look like $json['KEY'].length. You will have to modify this based on the output you get from the previous node and the length you want to check for.

But i dont know the lenght to compare, its unpredictable. Since i have another previous IF. For example, one run the “Merge Data” could receive 5 items, or other time 10

You can do a try/catch when it errors it means all the items were processed. Just adjust it with your parameters.

const allData = []

let counter = 0;

do {

try {

const items = $items("Get All Adastacks Users", 0, counter).map(item => item.json);

allData.push.apply(allData, items[0].records);

} catch (error) {

const response = allData.map(data => ({ json: data }))

return response;

}

counter++;

} while(true);

That does the same thing. It outputs one item per item processed. And in the last item there are all items appended. I don’t know the expected length beforehand so the simplest way is to output the last item of “Data of Execution” batch

Did you figure this out?

Not yet, still can figure it out how to get the last item of a node (ie last “Data of Execution”). Maybe try another approach? Consider that i need to append all the items to a file.

Ahh then when the catch is triggered, you have the last index.

const allData = []

let counter = 0;

do {

try {

const items = $items("Get All Adastacks Users", 0, counter).map(item => item.json);

allData.push.apply(allData, items[0].records);

} catch (error) {

const items = $items("Get All Adastacks Users", 0, counter - 1).map(item => item.json);

const response = allData.map(data => ({ json: data }))

return response;

}

counter++;

} while(true);Where do i have the index? In which variable? I can’t test it right now

Index = Context - 1. But just within the catch.

That seems to be the problem, please consider that my goal is to obtain the full list of items merged items. I need to use other nodes after merged the items, like “Write to File”. How can this help? Maybe appending an extra variable to the returned json? Please consider that i need to have only the full list as an input to my other nodes.