Describe the problem/error/question

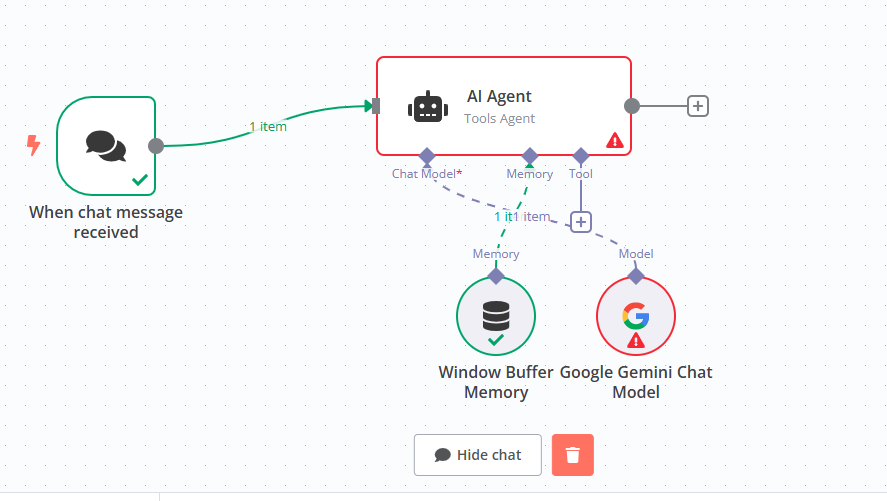

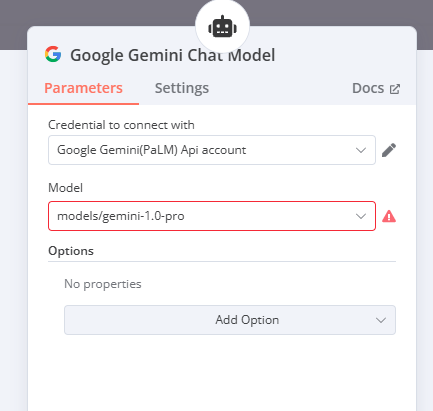

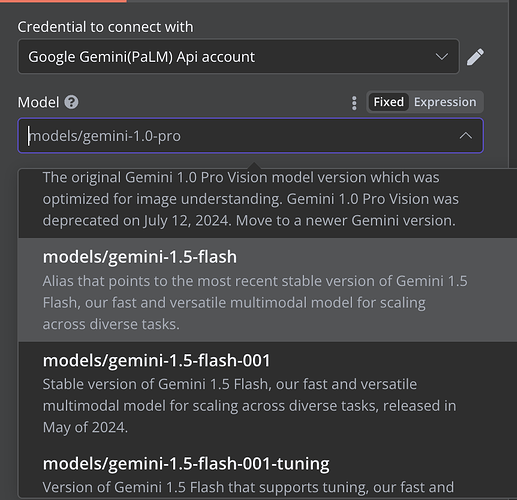

I am just starting my N8N journey and but am getting confused and frustrated because I am unable to connect any of my preferred chat models. I tried AWS Bedrock first and saw that there was an error currently and would not be fixed anytime soon. So I moved to Google Gemini. The Chat model says connected but when I try to send a query through an error pops up.

What is the error message (if any)?

[GoogleGenerativeAI Error]: Failed to parse stream

n8n version

1.81.4 (Self Hosted)

Time

3/12/2025, 8:47:51 PM

Error cause

{}

Please share your workflow

{

“nodes”: [

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.chatTrigger”,

“typeVersion”: 1.1,

“position”: [

-540,

-240

],

“id”: “8bd0f1d9-d45f-4079-8182-503df2e50f29”,

“name”: “When chat message received”,

“webhookId”: “4bf24b83-8b7e-4ff4-9e37-0dbabaf1f755”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.agent”,

“typeVersion”: 1.7,

“position”: [

-220,

-280

],

“id”: “c4ef33ef-e0e2-4200-ac18-89627f88fa45”,

“name”: “AI Agent”

},

{

“parameters”: {},

“type”: “@n8n/n8n-nodes-langchain.memoryBufferWindow”,

“typeVersion”: 1.3,

“position”: [

-140,

-60

],

“id”: “d417dfa4-c356-47b0-94e8-2bab676cf79d”,

“name”: “Window Buffer Memory”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.lmChatGoogleGemini”,

“typeVersion”: 1,

“position”: [

-280,

-60

],

“id”: “509704b5-e8e7-40fa-ac8d-c9a51cd49985”,

“name”: “Google Gemini Chat Model”,

“credentials”: {

“googlePalmApi”: {

“id”: “4s8xVHb9YwCCDjrN”,

“name”: “Google Gemini(PaLM) Api account”

}

}

}

],

“connections”: {

“When chat message received”: {

“main”: [

[

{

“node”: “AI Agent”,

“type”: “main”,

“index”: 0

}

]

]

},

“Window Buffer Memory”: {

“ai_memory”: [

[

{

“node”: “AI Agent”,

“type”: “ai_memory”,

“index”: 0

}

]

]

},

“Google Gemini Chat Model”: {

“ai_languageModel”: [

[

{

“node”: “AI Agent”,

“type”: “ai_languageModel”,

“index”: 0

}

]

]

}

},

“pinData”: {},

“meta”: {

“templateCredsSetupCompleted”: true,

“instanceId”: “213d3ef8f96a73b841b988e1a098566d2d8dc9aefb70ad481e1843df56108f02”

}

}

Share the output returned by the last node

Information on your n8n setup

- **n8n version: 1.81.4 (Self Hosted)

- **Database (default: SQLite): Default

- **n8n EXECUTIONS_PROCESS setting (default: own, main): Default

- **Running n8n via (Docker, npm, n8n cloud, desktop app): Docker

- **Operating system: Ubuntu