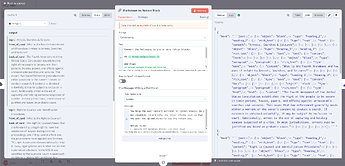

Trying to get Markdown out of any of the AI’s (ChatGPT, Claude etc) via API. The below output is the closest I get, but when I put it into Notion, it’s not actually in Markdown format. Seems to just be text.

Not sure if this is an AI limitation, n8n, langchain or I’m doing something wrong?

Found this Github thread which semi-indicates it’s a Langchain Tool limitation: Getting markdown formatting with GPT3.5 · langchain-ai/langchain · Discussion #12750 · GitHub

Any ideas?

Please share your workflow

Share the output returned by the last node

observation:{\n “id”: “msg_01WphmfsoxYy8cW3VKy16m39”,\n “type”: “message”,\n “role”: “assistant”,\n “model”: “claude-3-5-sonnet-20240620”,\n “content”: [\n {\n “type”: “text”,\n “text”: "Certainly! I’ll create three Mochi-compatible flashcards on the topic of Quantization in the context of AI, specifically focusing on GPT Models & Architecture. I’ll use a variety of interactive elements and provide simple and detailed explanations for each card.\n\n##Quantization in GPT Models\n\nWhat is quantization in the context of GPT models?\n\n—\n\nQuantization reduces the precision of model parameters.\n\n### Simple Analogy\nQuantization is like rounding prices to the nearest dollar instead of using cents.\n\n### Simple Explanation\nQuantization in GPT models reduces the precision of numbers used to represent weights and activations, making the model smaller and faster.\n\n### Detailed Explanation\nQuantization in GPT models is a technique that reduces the number of bits used to represent model parameters (weights and activations). It converts high-precision floating-point numbers (e.g., 32-bit) to lower-precision formats (e.g., 8-bit integers). This process significantly reduces model size and computational requirements, enabling faster inference and deployment on resource-constrained devices. However, it may result in a slight decrease in model accuracy.\n\n<input value="Reduces precision|Decreases model size|Speeds up inference" inline> are the main benefits of quantization in GPT models.\n\n##Types of Quantization\n\nWhat are the main types of quantization used in GPT models?\n\n—\n\nThe main types are:\n1.

Information on your n8n setup

- **n8n version:**1.56.2

- Database (default: SQLite): Postgres

- n8n EXECUTIONS_PROCESS setting (default: own, main):

- **Running n8n via (Docker, npm, n8n cloud, desktop app):**Docker

- **Operating system:**Linux