Hello community!

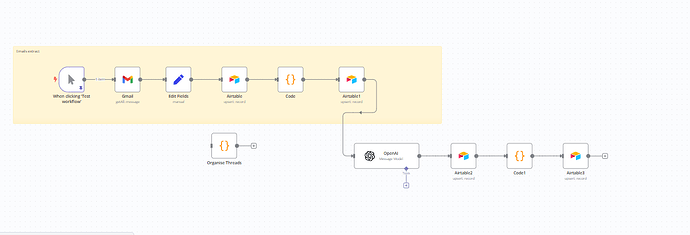

I have been busting my head with one project I’m working on. In essence, I am trying to build a knowledgebase by extracting all knowledge gathered over 3 years of emails exchanges. So far I need to:

- Download all emails from gmail (around 5K) -

- Read the content of each email and extract the questions and relevant answers to each question -

- Push the answers and questions into a vector database so it can be used by a chat agent

Issues faced:

- I have been able to push around 30 emails into multiple airtables tables, but I am finding issues of memory and size of the operation. I achieved this by threading all emails and have the AI look at the thread, not individual emails.

- The output shows the data that I want but now, I have to clean it again to separate the sender, receiver and questions with answers, per column

I thought of doing a loop, but I cant get the loop to continue indefinitely 5000 times (this is my lack of technical knowledge)

If you have any idea on how I can move past the error and lack of memory, that would be really helpful!

Thanks

Information on your n8n setup

- n8n version: 1.75.2,

- Database (default: SQLite):

- n8n EXECUTIONS_PROCESS setting (default: own, main):

- Running n8n via (Docker, npm, n8n cloud, desktop app): - n8n cloud

- Operating system: