Why I’m Posting This

I recently ran a large migration of job records from one job-management platform to another.

Each job had 150–200 photos, videos, and documents attached as binary data.

At first I left n8n’s binary-data mode at the default (in-memory) setting, and every bulk import run would crash after about 50 files because the server RAM was exhausted.

I needed to process ~2,000 jobs so that wasn’t sustainable.

What I Discovered

By default, n8n keeps all binary data in memory until the execution finishes, then off-loads it.

For small payloads that’s fine, but large batches (hundreds of images/videos per workflow) can easily fill up the RAM — in my case an 8 GB VPS.

Switching the environment variable:

N8N_DEFAULT_BINARY_DATA_MODE=filesystem

forces n8n to stream those files to disk instead of RAM, which made the workflow stable.

My Setup

- n8n: self-hosted (Docker) on Ubuntu

- Executions: queue-mode with workers

- VPS: 8 GB RAM / 100 GB disk / 4vCPU

- Workload: bulk import with heavy attachments

n8n’s docs say not to use filesystem mode in queue-mode, because usually workers run on different machines that don’t share a volume.

But in my case all workers run on the same host and share the same Docker volume, so it works fine.

Reference: Queue-mode + binary-data-mode discussion

Observations on Memory vs Filesystem Mode

After switching to filesystem mode (N8N_DEFAULT_BINARY_DATA_MODE=filesystem), the memory spike disappeared — binary data was streamed directly to disk during processing.

This made the workflow stable even when handling thousands of media files.

Deleting Old Binary Data to Free Storage

Instead of setting up a cron job, I manually deleted the binary data once each migration stage was complete.

- Stop your n8n instance so no files are in use:

docker compose down

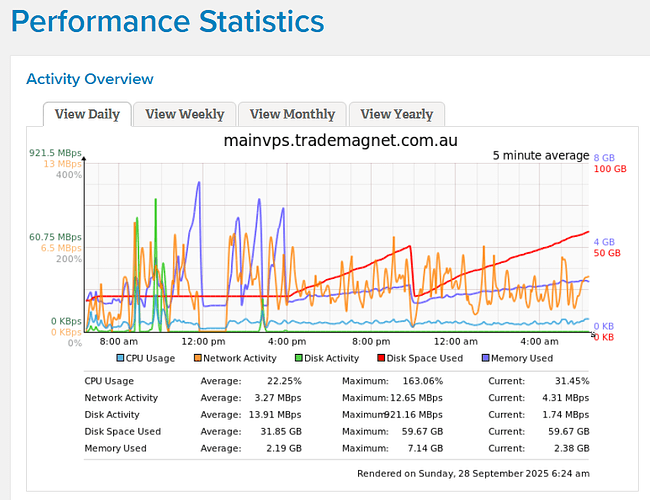

- The performance chart above shows how the purple line (memory) stayed low once I switched modes — the spike dropped dramatically.

- Delete the target execution folder (replace placeholders with your actual IDs):

rm -rfv /var/lib/docker/volumes/n8n_n8n_storage/_data/binary/workflows/<WORKFLOW_ID>/executions/<EXECUTION_ID>

- Or delete only the binary data inside that execution while keeping the record:*

rm -rfv/var/lib/docker/volumes/n8n_n8n_storage/_data/binary/workflows/<WORKFLOW_ID>/executions/<EXECUTION_ID>/binary_data/*

- Restart n8n:

docker compose up -d

Key Take-aways

- Default (memory) mode is fine for small jobs but risky for big media batches.

- Filesystem mode is a lifesaver when migrating or dealing with large attachments — provided your workers share the same storage volume.

- Queue-mode caution: if workers are on separate servers, you’ll need a shared network volume or S3-style external storage.

- Pruning: remember to periodically clean the binary-data folder or you’ll fill up the disk.

Hope This Helps

This little change saved me hours of failed runs and VPS crashes. If you’re self-hosting and see your memory pegged at 100% during heavy binary workflows, give filesystem mode a try — just be mindful of the storage requirements.