Hey man, sounds like your issue isn’t with Pinecone itself — it’s more about how n8n handles memory when looping through large volumes.

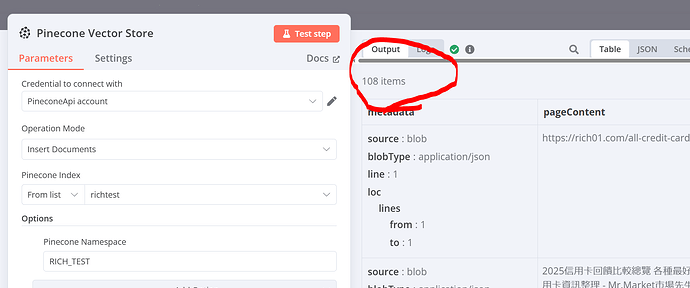

When you’re processing a big batch (like 20k+ items), n8n starts stacking up each node’s output in memory. Even if Pinecone accepts the inserts, memory usage can keep growing until the workflow crashes or freezes.

Here’s what can help right away;

Split into smaller batches

Use the SplitInBatches node to send chunks of 100 or 500 items. This helps avoid memory overload.

Don’t pass heavy data forward

If you don’t need Pinecone’s response after the insert, drop a NoOp (Empty Node) right after it. That breaks the data chain and keeps things light.

Disable full execution data saving (if self-hosted)

In your environment settings, set:

EXECUTIONS_DATA_SAVE_ON_SUCCESS = false

EXECUTIONS_DATA_SAVE_ON_ERROR = false

That way, n8n won’t try to store everything in memory or disk during large runs.

Need to delete vectors after each loop?

Pinecone doesn’t auto-purge, but you can send a DELETE request via the HTTP Request node with the vector IDs:

{ “ids”: [“vec_001”, “vec_002”] }

To keep it clean and solid, try this too:

Handle deletion in a separate flow

Instead of deleting inside your insert loop, create a dedicated cleanup workflow. It keeps things cleaner and avoids unexpected behavior.

Add a small delay between batches

Insert a $wait(1000) between batches to avoid hitting rate limits or overloading your server.

Keep an eye on n8n’s memory usage

If you’re running it in Docker or K8s, check if the container is hitting its memory cap. You might need to raise N8N_MEMORY_LIMIT

For really heavy loads, use a queue

Push your data into Redis, SQS, or RabbitMQ instead of looping everything inside n8n. Then process it gradually — way more stable and scalable.

Let me know if you want a sample flow using batches and a memory-safe setup.

Dandy