Is there a way to easily access newer models for the AWS Bedrock module?

I’m trying to add this one (full list here):

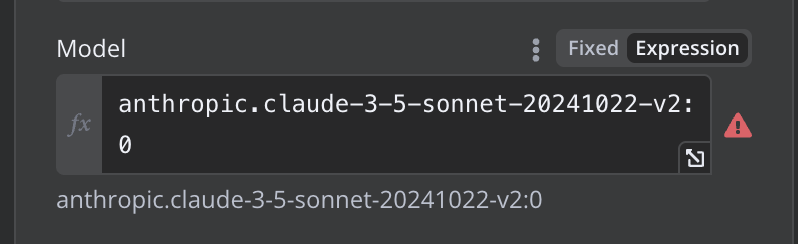

anthropic.claude-3-5-sonnet-20241022-v2:0

But it always returns:

Invocation of model ID anthropic.claude-3-5-sonnet-20241022-v2:0 with on-demand throughput isn’t supported. Retry your request with the ID or ARN of an inference profile that contains this model.

I already have access to those models on my account. It’s really that the module itself do not allow me to use one of these models:

Any easy solution for this?

I believe I could build a full node from scratch to access whatever model I need. But I was trying to keep things simple using the native AWS Bedrock module

Well it sounds like the node requires on-demand throughput, and that model does not yet support it.

hummm so if I wanted to use an inference profile, I can’t use that default node, correct?

I’d have to set up a HTTP request and all.

If you figure out how to setup the HTTP request to bedrock please let me know too. I am trying to set it up for Claude 3.7 and I just can’t manage. I am stuck with limited bedrock node models. Thanks!

yeah, not easy at all. if I do figure it out I’ll let u know  (but I’ll probably just stick to 3.5 for the moment)

(but I’ll probably just stick to 3.5 for the moment)

1 Like

@Constantine @brunopicinini

This should provide more help:

https://docs.anthropic.com/en/api/claude-on-vertex-ai

example curl request, that can be replicated in the HTTP node:

MODEL_ID="claude-3-5-sonnet-v2@20241022"

LOCATION="us-central1" # Choose appropriate region

PROJECT_ID="your-project-id" # Replace with your GCP project ID

curl -X POST \

-H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

"https://${LOCATION}-aiplatform.googleapis.com/v1/projects/${PROJECT_ID}/locations/${LOCATION}/publishers/anthropic/models/${MODEL_ID}:predict" \

-d '{

"anthropic_version": "vertex-2023-10-16",

"messages": [

{

"role": "user",

"content": "Write a short poem about artificial intelligence."

}

],

"max_tokens": 1024,

"temperature": 0.7

}'

2 Likes

That’s awesome! Thanks a bunch!

Let me know if that worked! (Will have to be changed)

Thanks for the reply, this seems to be quite far from a working AWS cURL . I am starting to think that it’s not possible with a pure an HTTP call. The call has to either happen using the AWS CLI or SigV4, at lest form what I’ve gathered. So either Claude 3.7, 3.7 Thinking and DeepSeek have to be included in the Bedrock node, or some hacking beyond my coding abilities has to happen.

If some tech guru figures it out, please let us know!

1 Like

The provided should work, you will just have to replace the variables.

Not for me. Maybe @brunopicinini had better luck.

Could I see how you attempted to set it up? Where did you get your api key from?

That is one of the problems mate. I used a fresh SessionToken since I can’t find any documentation on how to generate an actual API key, like in other platforms. I saw solutions using AWS CLI or SigV4, but I am not that technically adept to do all that.

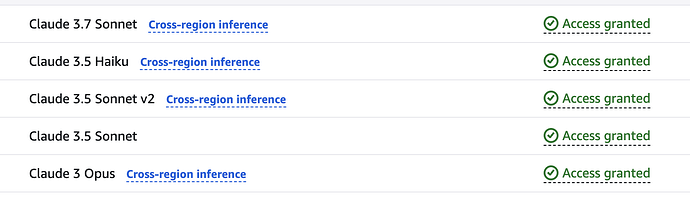

To utilize the latest AI models in AWS Bedrock, you’ll need to employ their inference profile, which essentially defines the configuration for running these models. These new models are set up for cross-region inference, allowing you to access them across different AWS regions.

Here’s how to proceed:

Navigate to the AWS Bedrock console and go to Inference and Assessment > Cross-Region Inference.

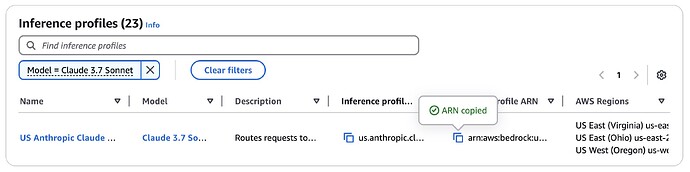

On this page, you’ll find a list of active models and their inference profiles. Locate the profile for the model you wish to use and copy its Inference Profile ARN (Amazon Resource Name). This ARN uniquely identifies the inference profile.

Next, go to the AWS Bedrock node within your workflow or application.

In the settings of this node, locate the option to specify the inference profile. Select the “fixed” mode and then paste the ARN you copied in the previous step. This ensures the node consistently uses the specified inference profile.

This process should be sufficient to enable the model. I have successfully used Sonnet 3.7 by following these steps.

Thanks for the help @MangelMelg.

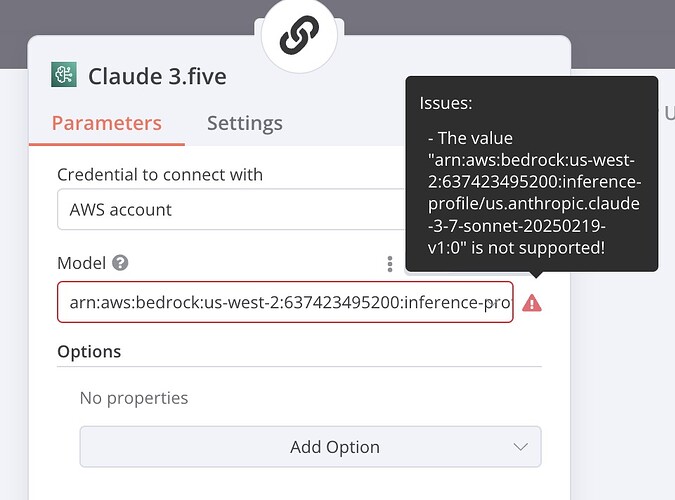

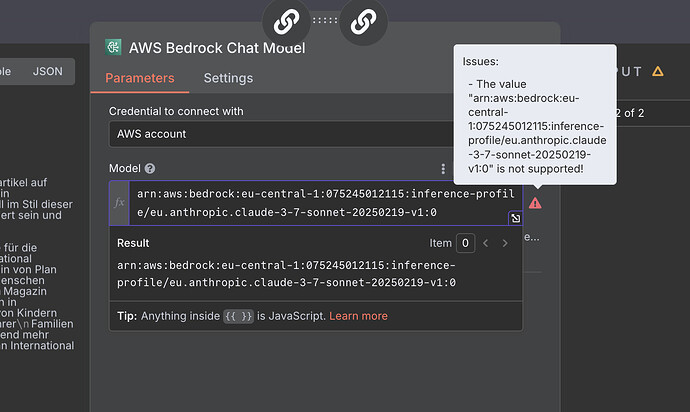

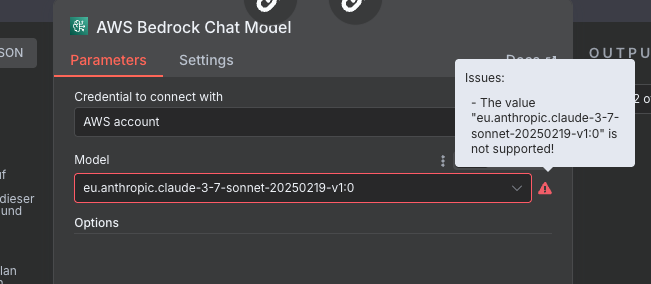

I have played with the ARN before with no avail, but I also followed your exact steps. I keep getting the same error [screenshots attached], that the model is not supported.

I am using bedrock Claude 3.7 in other apps though from that same AWS account. It’s odd that we get different results doing the same thing.

Same here: I followed the steps described by @MangelMelg and get the same error as @Constantine.

1 Like

Use only —> eu.anthropic.claude-3-7-sonnet-20250219-v1:0

work for me.

2 Likes

Thank you for your help.

I tried but got the same error.

The model is active and works in the playground, but the Bedrock node doesn’t accept it.

bingo!

I’m using us.anthropic.claude-sonnet-4-20250514-v1:0 right now and it’s working just fine

1 Like

![]()