I’m sharing my 10+ hours of experience here for anyone else who stumbles upon this migration challenge:

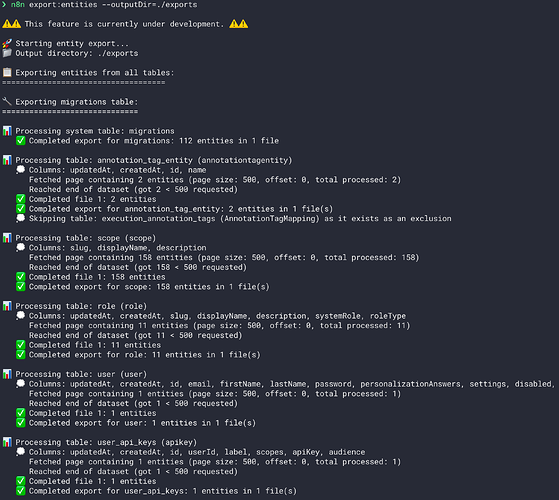

The official documentation points to export/import CLI commands.

Unfortunately, these were not present in my older version of n8n. If you run the command and get export:entities is not a valid command, your n8n version needs to be updated.

WARNING: By updating the n8n itself, stopping & redeploying containers you risk losing data, that are not written to the disk. The sqlite works using journaling, Write ahead logging (WAL) and therefore after spinning up the container, you may lose your data (Speaking of experience here).

WARNING: By updating the n8n itself, stopping & redeploying containers you risk losing data, that are not written to the disk. The sqlite works using journaling, Write ahead logging (WAL) and therefore after spinning up the container, you may lose your data (Speaking of experience here).

On top of that, my SQLite schema was corrupted. When importing with n8n import:entities, I ran into a duplicate key value violates unique constraint error. It seems the n8n import script was either importing data incorrectly or had an improperly configured compound index.

Option 2.: Migrate using sequel

While searching, I found this article on using Sequel and gave it a shot. I spun up a new instance and tried the command:

sequel -C sqlite://<sqlite_filename> postgresql://<user>:<pass>@<host>:<port>/<dbname>

This failed with a schema creation error: Sequel::database error pg:datatypemismatch foreign key constraint cannot be implemented.

This seemed specific to my setup, but after inspecting the database manually with sqlite3, I discovered a real data type mismatch.

CREATE TABLE "workflows_tags" (

"workflowId" varchar (36) NOT NULL,

"tagId" integer NOT NULL, -- <--- Note: This is an integer

PRIMARY KEY("workflowId","tagId"),

CONSTRAINT "FK_workflows_tags_tag_entity" FOREIGN KEY("tagId") REFERENCES "tag_entity"("id") ON DELETE CASCADE ON UPDATE NO ACTION,

CONSTRAINT "FK_workflows_tags_workflow_entity" FOREIGN KEY("workflowId") REFERENCES "workflow_entity"("id") ON DELETE CASCADE ON UPDATE NO ACTION

);

but the tag_entity table schema:

CREATE TABLE "tag_entity" (

"id" varchar (36) NOT NULL, -- <--- But this is a varchar

"name" varchar (24) NOT NULL,

"createdAt" datetime(3) NOT NULL DEFAULT (STRFTIME('%Y-%m-%d %H:%M:%f', 'NOW')),

"updatedAt" datetime(3) NOT NULL DEFAULT (STRFTIME('%Y-%m-%d %H:%M:%f', 'NOW')),

PRIMARY KEY("id")

);

So, the database schema was fundamentally incorrect. I’m still not sure how this happened.

My next approach was to let n8n create the schema first. I configured n8n to connect to a fresh PostgreSQL database by setting the DB_TYPE, DB_POSTGRESDB_DATABASE, etc., environment variables. On startup, n8n correctly ran its migrations and created all the necessary tables.

Unfortunately, the sequel -C command always tries to create the schema itself and cannot be used just to import data. So, this was another dead end.

I tried using this script, but unfortunately it is probably not future-proof. There are hardcoded table names and these tend to change over time. There was a data column names mismatch. I played with the shell script, was adding / removing table names (e.g. data_store vs. data_table, workflow_dependency) but didn’t manage to succeed.

Option 4: Manual Export & Import (The Tedious Last Resort)

This was the last resort: exporting each SQLite table to CSV and then importing them one by one into PostgreSQL.

After stubling on this piece of code, I tried exporting the tables to CSV:

TS=`sqlite3 ./database.sqlite "SELECT tbl_name FROM sqlite_master WHERE type='table' and tbl_name not like 'sqlite_%';"`

# exports each table to csv

for T in $TS; do

sqlite3 ./database.sqlite <<!

.headers on

.mode csv

.output $T.csv

select * from $T;

!

done

This left me with about 40+ .csv files.

In the meantime, I booted the n8n instance connected to the empty PostgreSQL server (as in Option 2) and let it run its migrations to create the correct schema.

Then came the tedious part. For each table, I used psql’s \copy command:

\copy <table_name> FROM '<table_name.csv>' DELIMITER ',' CSV HEADER

For example:

\copy user FROM 'user.csv' DELIMITER ',' CSV HEADER

This didn’t always work smoothly. In some tables, the column order in the SQLite export did not match the PostgreSQL schema. This caused type mismatch errors, like trying to import a string into a boolean column.

To fix this, I had to inspect the CSV header (head -1 <file>.csv) and manually specify the column order in the \copy command:

\copy workflow_entity(id,"workflowId","finished","mode","retryOf","retrySuccessId","startedAt","stoppedAt","waitTill","status","deletedAt","createdAt") FROM 'workflow_entity.csv' DELIMITER ',' CSV HEADER

By repeating this process for every table, I was finally able to import all my data into the new database.

Sidenotes

Disable instance owner setup

After the import, when you start your n8n instance, you will be prompted to create a new owner. To bypass this and log in with your migrated users, run the following SQL command, as mentioned by @Shadoxity:

UPDATE settings SET value = true WHERE key = 'userManagement.isInstanceOwnerSetUp';

After the next reboot, you should be able to log in with your original user credentials.

Truncate the database after every failed import

Each time an import attempt failed, I had to wipe the target database to start fresh. This ensures you don’t have partial or corrupted data.

\connect <N8N_DB_NAME>;

DROP SCHEMA public CASCADE;

CREATE SCHEMA public;

GRANT ALL ON SCHEMA public TO <N8N_DB_USER>;

Conclusion

I’m aware that this is not the most ideal approach, and I hope for most users, a simple export/import will work. For those unlucky ones, I wanted to show that there is a way to retrieve your data without having to set everything up again from scratch.

I hope someone can benefit from this.

Congrats, you made it to the end!

Edit: I was editing this text multiple times, because cloudflare wouldn’t let me post here (It thought the SQL queries are injection). And I had to bi-sect and find which part of the text causes this. Apparently the varchar keyword.