davidjm

September 4, 2024, 7:46am

1

Hi,

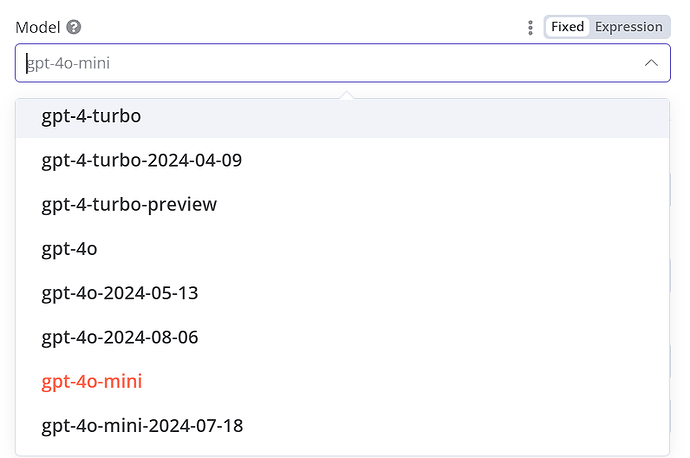

I’d like to know how to use the fine-tuned OpenAI model in AI agent. I checked and there is no fine-tuning model in the OpenAI Chat Model.

**n8n version: 1.55.3

**Database (default: SQLite): SQLite

**n8n EXECUTIONS_PROCESS setting (default: own, main): own

**Running n8n via (Docker, npm, n8n cloud, desktop app): Docker

**Operating system: Linux

aya

September 4, 2024, 11:28am

2

Hi @davidjm

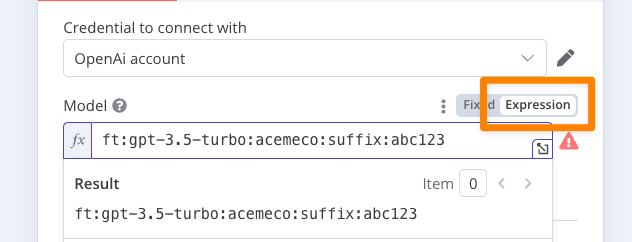

I don’t have a fine-tuned model to test this with but, you should be able to enter the name of the model by switching to the Expression tab:

Can you try using the fine-tuned model in the OpenAI playground, check the name of your fine-tuned model, and then enter the name directly in n8n?

aya

September 4, 2024, 11:30am

3

davidjm

September 6, 2024, 3:36am

4

Thanks 4 your info @aya . Can’t wait for the fixing to be done.

1 Like

in the meantime david u can choose gpt-4o and use the structured output parser to delineate how you want the output to be reasoned and delivered. this should improve ur reliability a lot. ai-jason has a good video showing this and here is the openai blog post: https://platform.openai.com/docs/guides/structured-outputs

1 Like

jan

September 11, 2024, 5:53pm

7

New version [email protected] GitHub PR 10662 .

system

December 10, 2024, 5:53pm

8

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.