Hello community.

Im doing parser with loop.

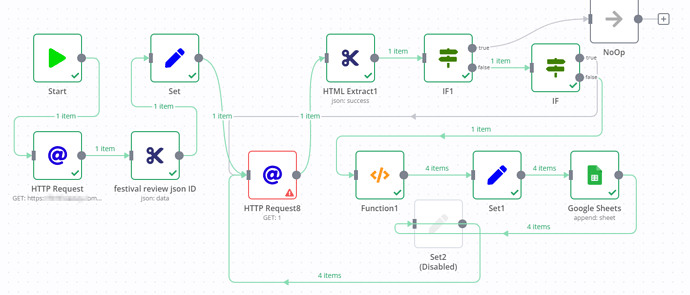

The workflow is like this:

- HTTP Request - starting URL domain.com/startUrl

-

HTML Extract - in this step im extracting URL of json file (/something/ID/something.json)

— (something.json has dozens of pages, on each page/json file are 0-5 links to profiles (some profiles are private so doesnt have links to profile) - links i need to save into google sheet) - so next step is SET where im adding domain before, and paging after - https://domain.com{{$json[“data”]}}?page=

- next is HTTP Request where URL is {{$node[“Set”].json[“review.json”]}}{{$runIndex+1}} - runIndex starting from 0 and =page 0 and 1 are the same, so +1 is ok for me.

- next is HTML Extract where im extracting 1)links to profiles, 2)name of profile (this will be used in next step)

- next is IF - checking if json?page=1 has “name of profile” - if doesnt - workflow is stoping, if have “name of profile” it goes to next

- next IF - checking if exist “links to profile” - if no - return again to last HTTP Request - if it has “link to profile” it continue to

- FUNCTION where links are splitting into one url per line in json (links are without domain like /profileURL)

- so in next step SET - adding domain before link

- next writing links to Google Sheet. This first run goes ok.

As I mentioned - there are many json pages (.json?page=1, .json?page=2, etc, so im returning to

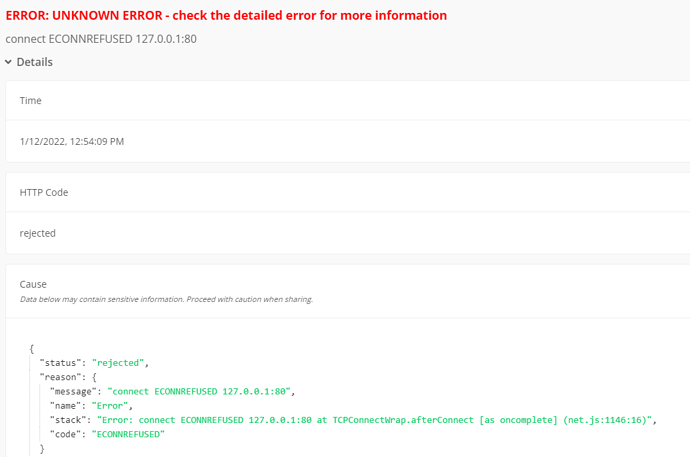

- HTTP Request again, to fetch next page {{$node[“Set”].json[“review.json”]}}{{$runIndex+1}} now sould return page=2, but here is an error and it looks like it forgot start URL

Error when starting LOOP is:

Workflow:

What I need is to loop json pages till there will be no names and links in json file. At this moment this one has cca 240pages, but (half empty) json is generating even when trying page=999.

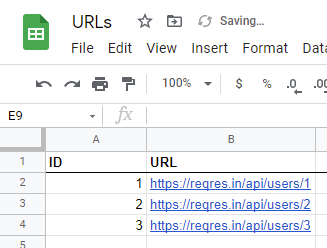

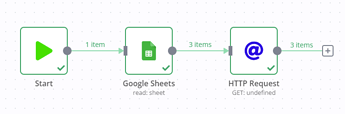

In close future i will need a hint how to read Google Sheet with many urls - one url per one workflow execution, then read next url. And how in this case about “runIndex”? how can i reset it? (on next ID/something.json file? becasue on next url i need to start it from zero again, and I plan to make this as loop too.

Information on your n8n setup

- **n8n version: 0.158.0

- **Database you’re using (default: SQLite): PS

- Running n8n with the execution process [own(default), main]:

- **Running n8n via [Docker, npm, n8n.cloud, desktop app]: docker