Hello everyone,

I am trying to use n8n along with the LLM + Groq chat model to create a LINE bot. However, I keep encountering an error message when testing the workflow at the fifth node, “HTTP Request.” The error message reads:

Problem in node ‘HTTP Request‘

Bad request - please check your parameters

I have correctly filled in all the LINE API parameters, yet I have been stuck for two days without finding a solution.

Here is my workflow

https://drive.google.com/file/d/1Q_HW3NyA21nCY0H8tjhs2_9Rx9ei6RNu/view?usp=sharing

My Channel access token (long-lived):

PgkX2v7HcWo5Opyp8QRtipjo98C3s6SzOJ5LnSnKWbx5y1gAdSRDqe8jv4GgY/QjQSmQ/HEcw5C/wVFv1sfv+GYwnbl4c98S8yzKaZXOZxF7nprnYYlt2O6bC8DvRaYJrB6UhFdceZSmdgBuj8IrqAdB04t89/1O/w1cDnyilFU=

I have also set the Header:

Name = Authorization

Value = Bearer PgkX2v7HcWo5Opyp8QRtipjo98C3s6SzOJ5LnSnKWbx5y1gAdSRDqe8jv4GgY/QjQSmQ/HEcw5C/wVFv1sfv+GYwnbl4c98S8yzKaZXOZxF7nprnYYlt2O6bC8DvRaYJrB6UhFdceZSmdgBuj8IrqAdB04t89/1O/w1cDnyilFU=

However, I still receive error messages.

Bad request - please check your parameters

Error details

From HTTP Request

Error code

400

Full message

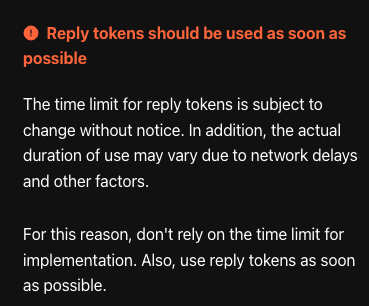

400 - "{\"message\":\"Invalid reply token\"}"

Request

{ "headers": { "content-type": "application/json", "Authorization": "**hidden**", "accept": "application/json,text/html,application/xhtml+xml,application/xml,text/*;q=0.9, image/*;q=0.8, */*;q=0.7" }, "method": "POST", "uri": "https://api.line.me/v2/bot/message/reply", "gzip": true, "rejectUnauthorized": true, "followRedirect": true, "resolveWithFullResponse": true, "followAllRedirects": true, "timeout": 300000, "body": { "replyToken": "{{$json.replyToken}}", "messages": [ { "type": "text", "text": "{{$json.replyMessage}}" } ] }, "encoding": null, "json": false, "useStream": true }

Other info

Item Index

0

Node type

n8n-nodes-base.httpRequest

Node version

4.2 (Latest)

n8n version

1.45.1 (Cloud)

Time

2024/6/21 下午7:33:42

Stack trace

NodeApiError: Bad request - please check your parameters at Object.execute (/usr/local/lib/node_modules/n8n/node_modules/n8n-nodes-base/dist/nodes/HttpRequest/V3/HttpRequestV3.node.js:1634:35) at processTicksAndRejections (node:internal/process/task_queues:95:5) at Workflow.runNode (/usr/local/lib/node_modules/n8n/node_modules/n8n-workflow/dist/Workflow.js:728:19) at /usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/WorkflowExecute.js:664:51 at /usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/WorkflowExecute.js:1079:20

Please help seniors