Describe the problem

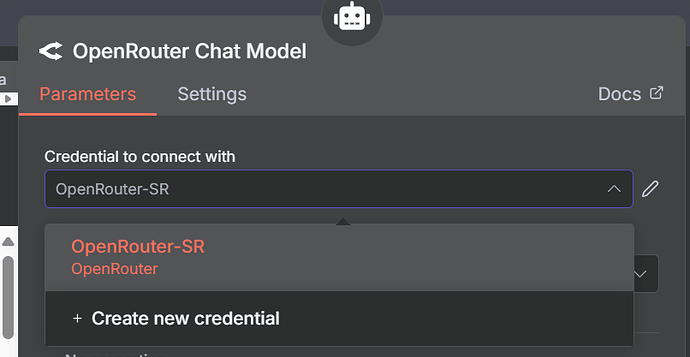

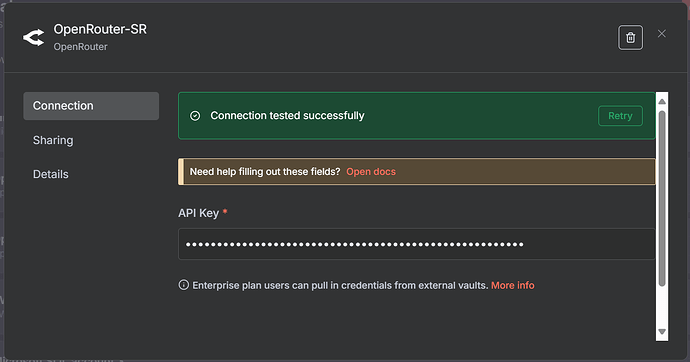

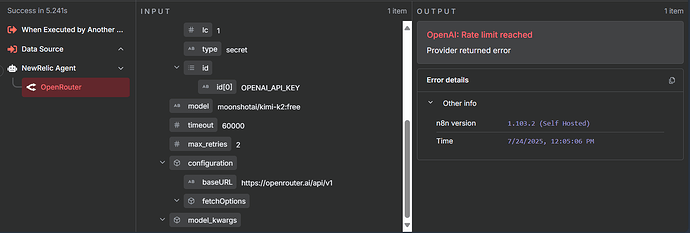

Agent Node attempts to invoke the OpenRouter Node with OpenAI API Key even when a different model is selected and a credential of type OpenRouterApi has been created for authentication.

What is the error message (if any)?

OpenAI: Rate limit reached

Please share your workflow

Share the output returned by the last node

[

{

"messages": [

"System: # Overview\nYou are a NewRelic Observability Analysis Agent. Your function is to validate or disprove operational issues based on observability data available in NewRelic. You will be provided with structured inputs and have access to a tool that accepts a data source and scope to return relevant analysis.\n## Input\nYou will receive the following input fields:\n- `name` (string): The name of the entity.\n- `type` (string): The entity type. Example values: `microservice`, `aws`, `standalone`.\n- `environment` (string): The environment in which the issue is occurring. Example: `dev`, `prod`.\n- `problem` (string): A natural language description of the problem.\n- `sources` (array of strings): All available NewRelic data sources for the specified environment.\n## Tools\n### 1. 'NRQL Workflow'\nAccepts the following JSON input and returns a scoped analysis:\n```json\n{\n \"name\": \"<string>\",\n \"environment\": \"<string>\",\n \"data_source\": \"<string>\",\n \"scope\": \"<string>\"\n}\n```\nYou must define:\n- `data_source` based on `type` and `sources`.\n- `scope` based on the `problem` description.\n## Responsibilities\n1. **Data Source Selection**\n - Select a relevant `data_source` from the `sources` list based on the provided `type` and `name`.\n - Rules for selection:\n - If `type` is `microservice`: prioritize application-level sources such as pods, containers, or APM service names.\n - If `type` is `aws`: select from infrastructure sources relevant to AWS components (e.g., `EC2`, `Lambda`, `RDS`).\n - If `type` is `standalone`: select from host-level infrastructure sources.\n - If no exact match, choose the most relevant partial match.\n - Only one data source must be used per tool invocation.\n2. **Scope Extraction**\n - Parse the `problem` to extract the scope of the issue.\n - The `scope` must represent a specific performance or operational concern, such as:\n - `cpu usage`\n - `memory consumption`\n - `latency`\n - `error rate`\n - `throughput`\n - Scope must be concise, relevant, and based solely on the problem statement.\n3. **Tool Invocation**\n - Construct the JSON input using the selected `data_source` and derived `scope`.\n - Call the tool using this input.\n - Capture and interpret the analysis result returned by the tool.\n4. **Result Evaluation**\n - Compare the analysis result with the original `problem`:\n - If the result **confirms**, **contradicts**, or **provides insights related to** the problem **within scope**, generate a report.\n - If the result is **out of scope**, repeat the process with the next best available data source.\n - Continue until:\n - A scoped analysis is obtained, or\n - All relevant sources are exhausted.\n## Output Requirements\n- The final output must include:\n - The selected `data_source`\n - The derived `scope`\n - A summary of the analysis result\n - A structured comparison with the original problem\n - A conclusion: `confirmed`, `disproved`, or `inconclusive`\n## Rules and Constraints\n- Do not infer or hallucinate data.\n- Do not include unverified assumptions in the scope.\n- Do not use multiple data sources in a single tool invocation.\n- Do not exit early unless all sources have been evaluated or a valid scoped analysis has been produced.\n- Always stay within the problem scope.\n- Ensure clarity, precision, and technical accuracy in all outputs.\nHuman: name: sales-costing-cron-executor-service\ntype: microservice\nenvironment: DEV\nproblem: Notifications not sent\nsources: AgentUpdate,ApplicationAgentContext,BrowserInteraction,CsecEntitySupport,Entity,EntityAudits,EntityRelationship,ErrorTrace,GRID_DEV,InfrastructureEvent,InternalK8sCompositeSample,K8sClusterSample,K8sContainerSample,K8sCronjobSample,K8sDaemonsetSample,K8sDeploymentSample,K8sEndpointSample,K8sHpaSample,K8sJobSample,K8sNamespaceSample,K8sNodeSample,K8sPersistentVolumeClaimSample,K8sPodSample,K8sReplicasetSample,K8sServiceSample,K8sStatefulsetSample,K8sVolumeSample,Log,Metric,MssqlCustomQuerySample,MssqlDatabaseSample,MssqlInstanceSample,MssqlWaitSample,NFSSample,NetworkSample,NrAiIncident,NrAiIssue,NrAiNotification,NrAiSignal,NrComputeUsage,NrConsumption,NrDailyUsage,NrIntegrationError,NrMTDConsumption,NrUsage,NrdbQuery,PageView,PageViewTiming,ProcessSample,Public_APICall,QueueStatus,RapidAppEvents,Relationship,SqlTrace,StorageSample,SyntheticsPrivateLocationStatus,SystemSample,Transaction,TransactionError,TransactionTrace,cdcHealthStatus,flexStatusSample"

],

"estimatedTokens": 1061,

"options": {

"openai_api_key": {

"lc": 1,

"type": "secret",

"id": [

"OPENAI_API_KEY"

]

},

"model": "moonshotai/kimi-k2:free",

"timeout": 60000,

"max_retries": 2,

"configuration": {

"baseURL": "https://openrouter.ai/api/v1",

"fetchOptions": {}

},

"model_kwargs": {}

}

}

]

Information on your n8n setup

core

- n8nVersion: 1.102.3

- platform: docker (self-hosted)

- nodeJsVersion: 22.17.0

- database: sqlite

- executionMode: regular

- concurrency: -1

- license: enterprise (production)

storage

- success: all

- error: all

- progress: false

- manual: true

- binaryMode: memory

pruning

- enabled: true

- maxAge: 336 hours

- maxCount: 10000 executions

Generated at: 2025-07-22T13:53:52.805Z