Hi expert,

I’m experiencing computational issues between Pinecone Vector Store and OpenAI Embeddings in my n8n RAG data processing workflow.

Environment Details:

-

n8n version: 1.95.3

-

Database (default: SQLite): SQLite (default)

-

**Running n8n :Docker

-

Operating system: Windows 10

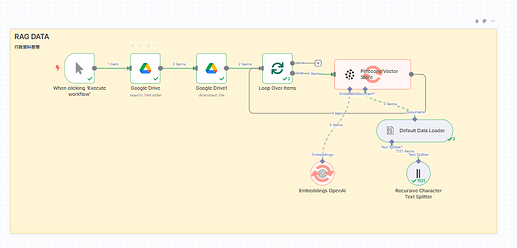

Current Workflow:

Google Sheets → Google Drive → Loop Over Items → Pinecone Vector Store

↓

OpenAI Embeddings ← Recursive Character Text Splitter

Issues I’m encountering:

- The embedding computation gets stuck/freezes during processing

- Inconsistent retrieval results from Pinecone queries

- Processing efficiency is very slow

Questions:

- Are there known compatibility issues between OpenAI embedding models and Pinecone?

- What’s the best practice for ensuring embedding model consistency?

- Should I use text-embedding-ada-002 or text-embedding-3-small for better Pinecone integration?

- How can I optimize the batch processing for large datasets?

- Are there specific timeout or rate limit configurations I should consider?

Additional Context:

- Data source: Google Sheets via Google Drive

- Processing method: Loop Over Items (sequential processing)

- Text processing: Recursive Character Text Splitter before embedding

Any guidance would be greatly appreciated!