Hi @Jon

Current version is:

0.201.0

Upgraded from:

0.199.0

I think I found the first place that causing that memory leak issue.

When the docker container is in idle (not running any workflows) it consumes ~130 MB

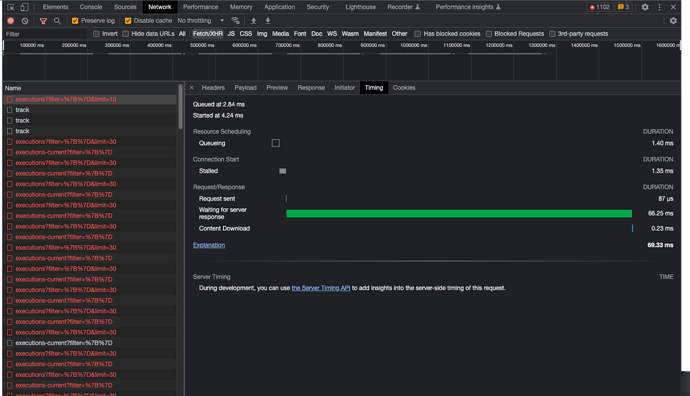

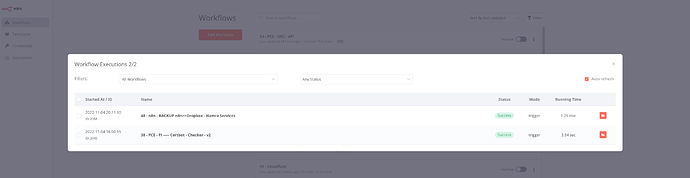

The moment I click on the left tab (Executions) it spikes to > 3 GB!

Example from the docker log #

11/04/2022 2:08:42 AM

http://localhost:5678/

11/04/2022 5:11:32 AM

query is slow: SELECT "ExecutionEntity"."id" AS "ExecutionEntity_id", "ExecutionEntity"."data" AS "ExecutionEntity_data", "ExecutionEntity"."finished" AS "ExecutionEntity_finished", "ExecutionEntity"."mode" AS "ExecutionEntity_mode", "ExecutionEntity"."retryOf" AS "ExecutionEntity_retryOf", "ExecutionEntity"."retrySuccessId" AS "ExecutionEntity_retrySuccessId", "ExecutionEntity"."startedAt" AS "ExecutionEntity_startedAt", "ExecutionEntity"."stoppedAt" AS "ExecutionEntity_stoppedAt", "ExecutionEntity"."workflowData" AS "ExecutionEntity_workflowData", "ExecutionEntity"."workflowId" AS "ExecutionEntity_workflowId", "ExecutionEntity"."waitTill" AS "ExecutionEntity_waitTill" FROM "execution_entity" "ExecutionEntity" WHERE "ExecutionEntity"."workflowId" IN (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?) AND 1=1 ORDER BY id DESC LIMIT 10 -- PARAMETERS: [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58]

11/04/2022 5:11:32 AM

execution time: 7294

11/04/2022 5:11:33 AM

query is slow: SELECT "SharedWorkflow"."createdAt" AS "SharedWorkflow_createdAt", "SharedWorkflow"."updatedAt" AS "SharedWorkflow_updatedAt", "SharedWorkflow"."roleId" AS "SharedWorkflow_roleId", "SharedWorkflow"."userId" AS "SharedWorkflow_userId", "SharedWorkflow"."workflowId" AS "SharedWorkflow_workflowId", "SharedWorkflow__workflow"."createdAt" AS "SharedWorkflow__workflow_createdAt", "SharedWorkflow__workflow"."updatedAt" AS "SharedWorkflow__workflow_updatedAt", "SharedWorkflow__workflow"."id" AS "SharedWorkflow__workflow_id", "SharedWorkflow__workflow"."name" AS "SharedWorkflow__workflow_name", "SharedWorkflow__workflow"."active" AS "SharedWorkflow__workflow_active", "SharedWorkflow__workflow"."nodes" AS "SharedWorkflow__workflow_nodes", "SharedWorkflow__workflow"."connections" AS "SharedWorkflow__workflow_connections", "SharedWorkflow__workflow"."settings" AS "SharedWorkflow__workflow_settings", "SharedWorkflow__workflow"."staticData" AS "SharedWorkflow__workflow_staticData", "SharedWorkflow__workflow"."pinData" AS "SharedWorkflow__workflow_pinData" FROM "shared_workflow" "SharedWorkflow" LEFT JOIN "workflow_entity" "SharedWorkflow__workflow" ON "SharedWorkflow__workflow"."id"="SharedWorkflow"."workflowId"

11/04/2022 5:11:33 AM

execution time: 1010

11/04/2022 5:12:25 AM

query is slow: SELECT "ExecutionEntity"."id" AS "ExecutionEntity_id", "ExecutionEntity"."data" AS "ExecutionEntity_data", "ExecutionEntity"."finished" AS "ExecutionEntity_finished", "ExecutionEntity"."mode" AS "ExecutionEntity_mode", "ExecutionEntity"."retryOf" AS "ExecutionEntity_retryOf", "ExecutionEntity"."retrySuccessId" AS "ExecutionEntity_retrySuccessId", "ExecutionEntity"."startedAt" AS "ExecutionEntity_startedAt", "ExecutionEntity"."stoppedAt" AS "ExecutionEntity_stoppedAt", "ExecutionEntity"."workflowData" AS "ExecutionEntity_workflowData", "ExecutionEntity"."workflowId" AS "ExecutionEntity_workflowId", "ExecutionEntity"."waitTill" AS "ExecutionEntity_waitTill" FROM "execution_entity" "ExecutionEntity" WHERE "ExecutionEntity"."workflowId" IN (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?) AND 1=1 ORDER BY id DESC LIMIT 30 -- PARAMETERS: [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58]

11/04/2022 5:12:25 AM

execution time: 47627

11/04/2022 5:12:26 AM

query is slow: SELECT "SharedWorkflow"."createdAt" AS "SharedWorkflow_createdAt", "SharedWorkflow"."updatedAt" AS "SharedWorkflow_updatedAt", "SharedWorkflow"."roleId" AS "SharedWorkflow_roleId", "SharedWorkflow"."userId" AS "SharedWorkflow_userId", "SharedWorkflow"."workflowId" AS "SharedWorkflow_workflowId", "SharedWorkflow__workflow"."createdAt" AS "SharedWorkflow__workflow_createdAt", "SharedWorkflow__workflow"."updatedAt" AS "SharedWorkflow__workflow_updatedAt", "SharedWorkflow__workflow"."id" AS "SharedWorkflow__workflow_id", "SharedWorkflow__workflow"."name" AS "SharedWorkflow__workflow_name", "SharedWorkflow__workflow"."active" AS "SharedWorkflow__workflow_active", "SharedWorkflow__workflow"."nodes" AS "SharedWorkflow__workflow_nodes", "SharedWorkflow__workflow"."connections" AS "SharedWorkflow__workflow_connections", "SharedWorkflow__workflow"."settings" AS "SharedWorkflow__workflow_settings", "SharedWorkflow__workflow"."staticData" AS "SharedWorkflow__workflow_staticData", "SharedWorkflow__workflow"."pinData" AS "SharedWorkflow__workflow_pinData" FROM "shared_workflow" "SharedWorkflow" LEFT JOIN "workflow_entity" "SharedWorkflow__workflow" ON "SharedWorkflow__workflow"."id"="SharedWorkflow"."workflowId"

11/04/2022 5:12:26 AM

execution time: 1515

11/04/2022 5:12:26 AM

query is slow: SELECT "User"."createdAt" AS "User_createdAt", "User"."updatedAt" AS "User_updatedAt", "User"."id" AS "User_id", "User"."email" AS "User_email", "User"."firstName" AS "User_firstName", "User"."lastName" AS "User_lastName", "User"."password" AS "User_password", "User"."resetPasswordToken" AS "User_resetPasswordToken", "User"."resetPasswordTokenExpiration" AS "User_resetPasswordTokenExpiration", "User"."personalizationAnswers" AS "User_personalizationAnswers", "User"."settings" AS "User_settings", "User"."apiKey" AS "User_apiKey", "User"."globalRoleId" AS "User_globalRoleId", "User__globalRole"."createdAt" AS "User__globalRole_createdAt", "User__globalRole"."updatedAt" AS "User__globalRole_updatedAt", "User__globalRole"."id" AS "User__globalRole_id", "User__globalRole"."name" AS "User__globalRole_name", "User__globalRole"."scope" AS "User__globalRole_scope" FROM "user" "User" LEFT JOIN "role" "User__globalRole" ON "User__globalRole"."id"="User"."globalRoleId" WHERE "User"."id" IN (?) -- PARAMETERS: ["ca7028e1-66e6-4b35-82fa-c5916c801882"]

11/04/2022 5:12:26 AM

execution time: 1595

11/04/2022 5:12:26 AM

query is slow: SELECT "User"."createdAt" AS "User_createdAt", "User"."updatedAt" AS "User_updatedAt", "User"."id" AS "User_id", "User"."email" AS "User_email", "User"."firstName" AS "User_firstName", "User"."lastName" AS "User_lastName", "User"."password" AS "User_password", "User"."resetPasswordToken" AS "User_resetPasswordToken", "User"."resetPasswordTokenExpiration" AS "User_resetPasswordTokenExpiration", "User"."personalizationAnswers" AS "User_personalizationAnswers", "User"."settings" AS "User_settings", "User"."apiKey" AS "User_apiKey", "User"."globalRoleId" AS "User_globalRoleId", "User__globalRole"."createdAt" AS "User__globalRole_createdAt", "User__globalRole"."updatedAt" AS "User__globalRole_updatedAt", "User__globalRole"."id" AS "User__globalRole_id", "User__globalRole"."name" AS "User__globalRole_name", "User__globalRole"."scope" AS "User__globalRole_scope" FROM "user" "User" LEFT JOIN "role" "User__globalRole" ON "User__globalRole"."id"="User"."globalRoleId" WHERE "User"."id" IN (?) -- PARAMETERS: ["ca7028e1-66e6-4b35-82fa-c5916c801882"]

11/04/2022 5:12:26 AM

execution time: 1614

11/04/2022 5:12:26 AM

query is slow: SELECT "User"."createdAt" AS "User_createdAt", "User"."updatedAt" AS "User_updatedAt", "User"."id" AS "User_id", "User"."email" AS "User_email", "User"."firstName" AS "User_firstName", "User"."lastName" AS "User_lastName", "User"."password" AS "User_password", "User"."resetPasswordToken" AS "User_resetPasswordToken", "User"."resetPasswordTokenExpiration" AS "User_resetPasswordTokenExpiration", "User"."personalizationAnswers" AS "User_personalizationAnswers", "User"."settings" AS "User_settings", "User"."apiKey" AS "User_apiKey", "User"."globalRoleId" AS "User_globalRoleId", "User__globalRole"."createdAt" AS "User__globalRole_createdAt", "User__globalRole"."updatedAt" AS "User__globalRole_updatedAt", "User__globalRole"."id" AS "User__globalRole_id", "User__globalRole"."name" AS "User__globalRole_name", "User__globalRole"."scope" AS "User__globalRole_scope" FROM "user" "User" LEFT JOIN "role" "User__globalRole" ON "User__globalRole"."id"="User"."globalRoleId" WHERE "User"."id" IN (?) -- PARAMETERS: ["ca7028e1-66e6-4b35-82fa-c5916c801882"]

11/04/2022 5:12:26 AM

execution time: 1621