Actually i tries the document mentioned GitHub - n8n-io/self-hosted-ai-starter-kit: The Self-hosted AI Starter Kit is an open-source template that quickly sets up a local AI environment. Curated by n8n, it provides essential tools for creating secure, self-hosted AI workflows. and run in windows machine , but always get the same error and the node never move to ollama model at all .

Actually the main reason is ollama has two models in the n8n

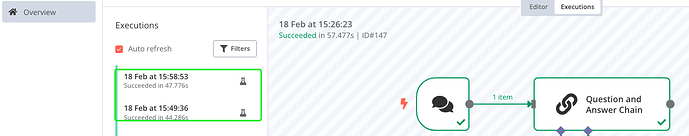

Now with all model its working fine, i am not sure what was the issue earlier

the docker .yml file

volumes:

n8n_storage:

postgres_storage:

ollama_storage:

qdrant_storage:

networks:

demo:

x-n8n: &service-n8n

image: n8nio/n8n:latest

networks: [‘demo’]

environment:

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=postgres

- DB_POSTGRESDB_USER=${POSTGRES_USER}

- DB_POSTGRESDB_PASSWORD=${POSTGRES_PASSWORD}

- N8N_DIAGNOSTICS_ENABLED=false

- N8N_PERSONALIZATION_ENABLED=false

- N8N_ENCRYPTION_KEY

- N8N_USER_MANAGEMENT_JWT_SECRET

- OLLAMA_HOST=host.docker.internal:11434

env_file:

- .env

x-ollama: &service-ollama

image: ollama/ollama:latest

container_name: ollama

networks: [‘demo’]

restart: unless-stopped

ports:

- 11434:11434

volumes:

- ollama_storage:/root/.ollama

x-init-ollama: &init-ollama

image: ollama/ollama:latest

networks: [‘demo’]

container_name: ollama-pull-llama

volumes:

- ollama_storage:/root/.ollama

entrypoint: /bin/sh

environment:

- OLLAMA_HOST=host.docker.internal:11434

command:

- “-c”

- “sleep 3”

services:

postgres:

image: postgres:16-alpine

hostname: postgres

networks: [‘demo’]

restart: unless-stopped

environment:

- POSTGRES_USER

- POSTGRES_PASSWORD

- POSTGRES_DB

volumes:

- postgres_storage:/var/lib/postgresql/data

healthcheck:

test: [‘CMD-SHELL’, ‘pg_isready -h localhost -U ${POSTGRES_USER} -d ${POSTGRES_DB}’]

interval: 5s

timeout: 5s

retries: 10

n8n-import:

<<: *service-n8n

hostname: n8n-import

container_name: n8n-import

entrypoint: /bin/sh

command:

- “-c”

- “n8n import:credentials --separate --input=/backup/credentials && n8n import:workflow --separate --input=/backup/workflows”

volumes:

- ./n8n/backup:/backup

depends_on:

postgres:

condition: service_healthy

n8n:

<<: *service-n8n

hostname: n8n

container_name: n8n

restart: unless-stopped

ports:

- 5678:5678

volumes:

- n8n_storage:/home/node/.n8n

- ./n8n/backup:/backup

- ./shared:/data/shared

depends_on:

postgres:

condition: service_healthy

n8n-import:

condition: service_completed_successfully

qdrant:

image: qdrant/qdrant

hostname: qdrant

container_name: qdrant

networks: [‘demo’]

restart: unless-stopped

ports:

- 6333:6333

volumes:

- qdrant_storage:/qdrant/storage

ollama-cpu:

profiles: [“cpu”]

<<: *service-ollama

ollama-gpu:

profiles: [“gpu-nvidia”]

<<: *service-ollama

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]

ollama-gpu-amd:

profiles: [“gpu-amd”]

<<: *service-ollama

image: ollama/ollama:rocm

devices:

- “/dev/kfd”

- “/dev/dri”

ollama-pull-llama-cpu:

profiles: [“cpu”]

<<: *init-ollama

depends_on:

- ollama-cpu

ollama-pull-llama-gpu:

profiles: [“gpu-nvidia”]

<<: *init-ollama

depends_on:

- ollama-gpu

ollama-pull-llama-gpu-amd:

profiles: [gpu-amd]

<<: *init-ollama

image: ollama/ollama:rocm

depends_on:

- ollama-gpu-amd

and also i locally run the ollam serve command so that it runs locally and run docker using docker compose --profile cpu up