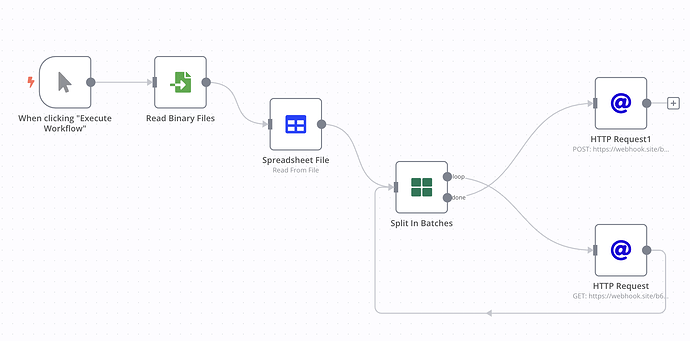

I’m currently working on a workflow to process a large CSV file (~126K rows) in n8n . My goal is to split the file into manageable batches and send each batch to a webhook for further processing. However, i’m running into issues related to rate limits when sending requests.

n8n version: 0.222.3 Database : postgresql n8n EXECUTIONS_MODE: queue Running n8n via: docker Operating system: Macos

n8n

December 2, 2024, 10:12am

2

It looks like your topic is missing some important information. Could you provide the following if applicable.

n8n version: Database (default: SQLite): n8n EXECUTIONS_PROCESS setting (default: own, main): Running n8n via (Docker, npm, n8n cloud, desktop app): Operating system:

ria

December 10, 2024, 2:48pm

3

Hi @Mostafa_Nabawy

Thanks for posting here and welcome to the community!

To start with, I would recommend you update your n8n version to our latest (1.71.1)

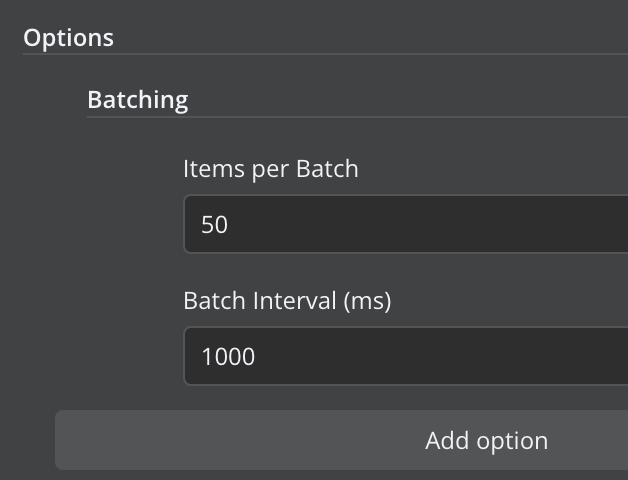

You can use the Batching option of the HTTP node directly, that also has a time interval you can customise to slow down your requests.

system

March 10, 2025, 2:49pm

4

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.