[

{

"urn": "7359117118409191425",

"full_urn": "urn:li:activity:7359117118409191425",

"posted_at": {

"date": "2025-08-07 09:04:06",

"relative": "1 week ago • Visible to anyone on or off LinkedIn",

"timestamp": 1754550246813

},

"text": "𝗪𝗮𝗮𝗿𝗼𝗺 𝟳𝟯% 𝘃𝗮𝗻 𝗱𝗲 𝗡𝗲𝗱𝗲𝗿𝗹𝗮𝗻𝗱𝘀𝗲 𝗯𝗲𝗱𝗿𝗶𝗷𝘃𝗲𝗻 𝗔𝗜 𝗮𝗴𝗲𝗻𝘁𝘀 𝘃𝗲𝗿𝗸𝗲𝗲𝗿𝗱 𝗶𝗻𝘇𝗲𝘁 (en hoe het beter kan)\n\nDe meeste organisaties zetten één AI-assistent in voor alles.. een fundamentele denkfout die miljoenen euro's aan potentiële waarde vernietigt.\n\nWat als je in plaats daarvan een team samenstelt van gespecialiseerde AI agents die naadloos samenwerken?\n\n𝗪𝗲𝗹𝗸𝗼𝗺 𝗯𝗶𝗷 𝗔𝗜 𝗮𝗴𝗲𝗻𝘁 𝗼𝗿𝗰𝗵𝗲𝘀𝘁𝗿𝗮𝘁𝗶𝗲: de strategie die toonaangevende Nederlandse bedrijven implementeren om hun concurrenten te overtreffen.\n\nHet principe is eenvoudig maar krachtig:\n\n• 𝗧𝗿𝗮𝗱𝗶𝘁𝗶𝗼𝗻𝗲𝗹𝗲 𝗮𝗮𝗻𝗽𝗮𝗸: Eén algemeen AI systeem dat worstelt met complexe processen\n\n• 𝗢𝗿𝗰𝗵𝗲𝘀𝘁𝗿𝗮𝘁𝗶𝗲 𝗮𝗮𝗻𝗽𝗮𝗸: Meerdere gespecialiseerde AI agents die samenwerken onder centrale coördinatie\n\nEen Nederlandse logistieke speler implementeerde dit jaar een orchestratie laag die 5 verschillende AI-agents coördineert:\n- 𝗩𝗼𝗼𝗿𝗿𝗮𝗮𝗱𝘃𝗼𝗼𝗿𝘀𝗽𝗲𝗹𝗹𝗶𝗻𝗴𝘀 𝗮𝗴𝗲𝗻𝘁\n- 𝗥𝗼𝘂𝘁𝗲 𝗼𝗽𝘁𝗶𝗺𝗮𝗹𝗶𝘀𝗮𝘁𝗶𝗲 𝗮𝗴𝗲𝗻𝘁\n- 𝗗𝗼𝗰𝘂𝗺𝗲𝗻𝘁𝗮𝘁𝗶𝗲 𝗮𝗴𝗲𝗻𝘁\n- 𝗞𝗹𝗮𝗻𝘁𝗲𝗻𝘀𝗲𝗿𝘃𝗶𝗰𝗲 𝗮𝗴𝗲𝗻𝘁\n- 𝗙𝗮𝗰𝘁𝘂𝗿𝗲𝗿𝗶𝗻𝗴𝘀 𝗮𝗴𝗲𝗻𝘁\n\nResultaat? 43% snellere doorlooptijden en 35% kostenbesparing binnen zes maanden.\n\n𝗗𝗲 𝗱𝗿𝗶𝗲 𝗸𝗿𝗶𝘁𝗶𝗲𝗸𝗲 𝗯𝗼𝘂𝘄𝘀𝘁𝗲𝗻𝗲𝗻 𝘃𝗼𝗼𝗿 𝘀𝘂𝗰𝗰𝗲𝘀𝘃𝗼𝗹𝗹𝗲 𝗼𝗿𝗰𝗵𝗲𝘀𝘁𝗿𝗮𝘁𝗶𝗲:\n\n1. Taaksplitsing: Verdeel complexe werkstromen in duidelijk afgebakende deeltaken\n\n2. Specialisatie: Train iedere agent om uitmuntend te zijn in één specifiek deelproces\n\n3. Communicatieprotocol: Ontwikkel een gestandaardiseerde manier waarop agents informatie uitwisselen\n\nBedrijven die deze aanpak omarmen, creëren een exponentieel schaalbare automatisering laag die menselijke teams bevrijdt voor strategisch werk.\n\nWelke complexe bedrijfsprocessen zou je transformeren met een georchestreerd AI team?\n\n#ai #aiagent #automation #openai #workflow #emailautomation #aiagents #techstack #efficiency #leadgen #B2BMarketing #LeadGeneration #MarketingAutomation #EmailMarketing #DigitalMarketing #GrowthHacking #MarketingStrategy #MachineLearning #n8n #GenerativeAI #google #hr #sales #cms #dxp #composable #chatgpt #marketing #hrm #finance #klantenservice",

"url": "https://www.linkedin.com/posts/jimdejager_ai-aiagent-automation-activity-7359117118409191425-l42g?utm_source=social_share_send&utm_medium=member_desktop_web&rcm=ACoAAF3IbmsBapYWOUSHvOaplfrho6JFb1hIMXM",

"post_type": "regular",

"author": {

"first_name": "Jim",

"last_name": "de Jager",

"headline": "Building AI Bots & Automations 🏆➤ Consultative Sales | Solution Sales | Presales | Customer Success & Change Management",

"username": "jimdejager",

"profile_url": "https://www.linkedin.com/in/jimdejager?miniProfileUrn=urn%3Ali%3Afsd_profile%3AACoAAAwYQP0BOafQGTJtNJDighWarr4fn-EvQkA",

"profile_picture": "https://media.licdn.com/dms/image/v2/D4E35AQEkQbsQSGOprw/profile-framedphoto-shrink_800_800/B4EZflg2q5GwAk-/0/1751902284898?e=1756033200&v=beta&t=69CPt5S_6Vf7C6pR3ybUSy14_76FH8La3YIS9kWS1vo"

},

"stats": {

"total_reactions": 11,

"like": 11,

"support": 0,

"love": 0,

"insight": 0,

"celebrate": 0,

"funny": 0,

"comments": 0,

"reposts": 0

},

"media": {

"type": "image",

"url": "https://media.licdn.com/dms/image/v2/D4D22AQFRGf6sn7Wq0A/feedshare-shrink_2048_1536/B4DZiDWBBMGkAo-/0/1754550245183?e=1758153600&v=beta&t=zeOQoGg0gzUIMm52ucWY3b4ZbLGTVeTYc6unzeaE2gM",

"images": [

{

"url": "https://media.licdn.com/dms/image/v2/D4D22AQFRGf6sn7Wq0A/feedshare-shrink_2048_1536/B4DZiDWBBMGkAo-/0/1754550245183?e=1758153600&v=beta&t=zeOQoGg0gzUIMm52ucWY3b4ZbLGTVeTYc6unzeaE2gM",

"width": 1024,

"height": 1024

}

]

},

"profile_input": "https://www.linkedin.com/in/jimdejager/"

},

{

"urn": "7358063647660621824",

"full_urn": "urn:li:ugcPost:7358063646935027712",

"posted_at": {

"date": "2025-08-04 11:17:59",

"relative": "1 week ago • Edited • Visible to anyone on or off LinkedIn",

"timestamp": 1754299079814

},

"text": "𝗛𝗼𝘄 𝗜 𝗕𝘂𝗶𝗹𝘁 𝗮𝗻 𝗔𝗜 𝗔𝗴𝗲𝗻𝘁 𝗧𝗵𝗮𝘁 𝗪𝗿𝗶𝘁𝗲𝘀 𝗮𝗻𝗱 𝗣𝗼𝘀𝘁𝘀 𝗩𝗶𝗿𝗮𝗹 𝗟𝗶𝗻𝗸𝗲𝗱𝗜𝗻 𝗖𝗼𝗻𝘁𝗲𝗻𝘁 (full demo!)\n\nMost companies struggle with one recurring challenge: staying visible on LinkedIn without spending hours every day creating content.\n\nMy solution? An AI automation that handles everything from idea to post:\nIt generates new post ideas based on trends and my previous content.\n\nIt designs on-brand visuals automatically.\n\nIt schedules and publishes posts on LinkedIn completely hands off.\n\nThe result: a consistent stream of engaging content without the manual grind of writing and designing.\n\nThe essence? The future of personal branding isn’t about working harder. It’s about automating smarter.\n\n𝗥𝗲𝗮𝗱 𝘁𝗵𝗲 𝗳𝘂𝗹𝗹 𝗮𝗿𝘁𝗶𝗰𝗹𝗲 𝘁𝗼 𝗴𝗲𝘁 𝗱𝗲𝗲𝗽𝗲𝗿 𝗶𝗻𝘀𝗶𝗴𝗵𝘁𝘀 𝗶𝗻𝘁𝗼 𝘁𝗵𝗲 𝘄𝗼𝗿𝗸𝗳𝗹𝗼𝘄.\n𝗪𝗮𝘁𝗰𝗵 𝘁𝗵𝗲 𝗱𝗲𝗺𝗼 𝗳𝗼𝗿 𝗮 𝗹𝗶𝘃𝗲 𝗲𝘅𝗮𝗺𝗽𝗹𝗲.\n\n#ai #aiagent #automation #gmail #openai #workflow #emailautomation #aiagents #techstack #efficiency #coldemail #leadgen #B2BMarketing #LeadGeneration #MarketingAutomation #EmailMarketing #MarketingOps #DigitalMarketing #GrowthHacking #MarketingStrategy #MachineLearning #n8n #GenerativeAI #google #sales #cms #dxp #composable #chatgpt #marketing #blog #content #viralpost #blogcontent",

"url": "https://www.linkedin.com/posts/jimdejager_ai-aiagent-automation-activity-7358063647660621824-gqY5?utm_source=social_share_send&utm_medium=member_desktop_web&rcm=ACoAAF3IbmsBapYWOUSHvOaplfrho6JFb1hIMXM",

"post_type": "regular",

"author": {

"first_name": "Jim",

"last_name": "de Jager",

"headline": "Building AI Bots & Automations 🏆➤ Consultative Sales | Solution Sales | Presales | Customer Success & Change Management",

"username": "jimdejager",

"profile_url": "https://www.linkedin.com/in/jimdejager?miniProfileUrn=urn%3Ali%3Afsd_profile%3AACoAAAwYQP0BOafQGTJtNJDighWarr4fn-EvQkA",

"profile_picture": "https://media.licdn.com/dms/image/v2/D4E35AQEkQbsQSGOprw/profile-framedphoto-shrink_800_800/B4EZflg2q5GwAk-/0/1751902284898?e=1756033200&v=beta&t=69CPt5S_6Vf7C6pR3ybUSy14_76FH8La3YIS9kWS1vo"

},

"stats": {

"total_reactions": 8,

"like": 7,

"support": 0,

"love": 0,

"insight": 1,

"celebrate": 0,

"funny": 0,

"comments": 1,

"reposts": 0

},

"article": {

"url": "https://www.linkedin.com/pulse/how-i-built-ai-agent-creates-posts-viral-linkedin-content-de-jager-ovfke?trackingId=bQw2NOj7eZHhPOD0xs59cg%3D%3D",

"title": "How I built an AI Agent that creates and posts viral LinkedIn content",

"subtitle": "Jim de Jager"

},

"profile_input": "https://www.linkedin.com/in/jimdejager/"

},

{

"urn": "7357663155218440192",

"full_urn": "urn:li:activity:7357663155218440192",

"posted_at": {

"date": "2025-08-03 08:46:34",

"relative": "2 weeks ago • Visible to anyone on or off LinkedIn",

"timestamp": 1754203594975

},

"text": "𝗔𝗹𝗯𝗲𝗿𝘁 𝗛𝗲𝗶𝗷𝗻 𝘃𝗲𝗿𝘀𝗽𝗶𝗹𝘁 𝟯𝟴% 𝗺𝗶𝗻𝗱𝗲𝗿 𝗺𝗲𝘁 𝗔𝗜 (en hoe jij hetzelfde kan bereiken)\n\nJe staat voor een halfvolle koelkast maar zonder kookinspiratie?\n\nJe bent niet de enige. Dit alledaagse dilemma leidt jaarlijks tot €2,5 miljard aan voedselverspilling in Nederland.\n\nAlbert Heijn heeft dit probleem stilletjes aangepakt met behulp van hun AI innovatie: 𝗦𝗰𝗮𝗻 & 𝗞𝗼𝗼𝗸.\n\n𝗪𝗮𝘁 𝗵𝗲𝗯𝗯𝗲𝗻 𝘇𝗲 𝗴𝗲𝗱𝗮𝗮𝗻?\n- De oprichting van 𝗚𝗲𝗻 𝗔𝗜 𝗟𝗮𝗯𝘀 als interne AI-start-up\n- De ontwikkeling van technologie die foto's van ingrediënten analyseert\n- De integratie van 𝗔𝗜 𝗮𝘀𝘀𝗶𝘀𝘁𝗲𝗻𝘁 𝗦𝘁𝗲𝗶𝗷𝗻 in de app om slimmer boodschappen te doen\n\n𝗪𝗮𝘁 𝗹𝗲𝘃𝗲𝗿𝘁 𝗵𝗲𝘁 𝗼𝗽?\n- 𝟯𝟴% 𝗺𝗶𝗻𝗱𝗲𝗿 𝘃𝗼𝗲𝗱𝘀𝗲𝗹𝘃𝗲𝗿𝘀𝗽𝗶𝗹𝗹𝗶𝗻𝗴 bij huishoudens die de functie gebruiken\n- 𝟮𝟮% 𝗵𝗼𝗴𝗲𝗿𝗲 𝗸𝗹𝗮𝗻𝘁𝘁𝗲𝘃𝗿𝗲𝗱𝗲𝗻𝗵𝗲𝗶𝗱\n- Een duidelijke stap richting hun doel: 𝟱𝟬% 𝗺𝗶𝗻𝗱𝗲𝗿 𝘃𝗲𝗿𝘀𝗽𝗶𝗹𝗹𝗶𝗻𝗴 𝗶𝗻 𝟮𝟬𝟯𝟬\n\n𝗪𝗮𝘁 𝗸𝗮𝗻 𝗷𝗶𝗷 𝗵𝗶𝗲𝗿𝘃𝗮𝗻 𝗹𝗲𝗿𝗲𝗻?\nVeel automatiseringsprojecten mislukken vanwege de omvang of vaagheid. Albert Heijn koos een scherp omlijnd meetbaar probleem: voedselverspilling als gevolg van gebrek aan maaltijdplanning.\n\nZe pasten drie essentiële AI principes toe die elk bedrijf kan benutten:\n\n𝗣𝗿𝗼𝗯𝗹𝗲𝗲𝗺𝗴𝗲𝗿𝗶𝗰𝗵𝘁𝗲 𝗶𝗻𝘇𝗲𝘁 𝘃𝗮𝗻 𝗔𝗜\nRicht je op een concreet en herkenbaar pijnpunt en niet op 'algemene efficiëntie'.\n\n𝗚𝗲𝗯𝗿𝘂𝗶𝗸𝘀𝗴𝗲𝗺𝗮𝗸 𝘀𝘁𝗮𝗮𝘁 𝘃𝗼𝗼𝗿𝗼𝗽\nLaat gebruikers simpelweg een foto maken zonder ingewikkelde interfaces.\n\n𝗦𝗹𝘂𝗶𝘁 𝗮𝗮𝗻 𝗼𝗽 𝗯𝗲𝘀𝘁𝗮𝗮𝗻𝗱𝗲 𝗴𝗲𝘄𝗼𝗼𝗻𝘁𝗲𝗻\nIntegreer AI in kanalen en tools die klanten al gebruiken.\n\n𝗪𝗲𝗹𝗸 𝘀𝗽𝗲𝗰𝗶𝗳𝗶𝗲𝗸 𝗽𝗿𝗼𝗯𝗹𝗲𝗲𝗺 𝗸𝗮𝗻 𝗷𝗼𝘂𝘄 𝗼𝗿𝗴𝗮𝗻𝗶𝘀𝗮𝘁𝗶𝗲 𝗼𝗽𝗹𝗼𝘀𝘀𝗲𝗻 𝗺𝗲𝘁 𝗱𝗼𝗲𝗹𝗴𝗲𝗿𝗶𝗰𝗵𝘁𝗲 𝗔𝗜?\n\nDeel het hieronder!\n\n#ai #aiagent #automation #gmail #openai #workflow #emailautomation #aiagents #techstack #efficiency #coldemail #leadgen #B2BMarketing #LeadGeneration #MarketingAutomation #EmailMarketing #MarketingOps #DigitalMarketing #GrowthHacking #MarketingStrategy #MachineLearning #GenerativeAI #google #sales #cms #dxp #composable #chatgpt #AlbertHeijn #supermarkt #voedselverspilling #FMCG #retail",

"url": "https://www.linkedin.com/posts/jimdejager_ai-aiagent-automation-activity-7357663155218440192-Alw8?utm_source=social_share_send&utm_medium=member_desktop_web&rcm=ACoAAF3IbmsBapYWOUSHvOaplfrho6JFb1hIMXM",

"post_type": "regular",

"author": {

"first_name": "Jim",

"last_name": "de Jager",

"headline": "Building AI Bots & Automations 🏆➤ Consultative Sales | Solution Sales | Presales | Customer Success & Change Management",

"username": "jimdejager",

"profile_url": "https://www.linkedin.com/in/jimdejager?miniProfileUrn=urn%3Ali%3Afsd_profile%3AACoAAAwYQP0BOafQGTJtNJDighWarr4fn-EvQkA",

"profile_picture": "https://media.licdn.com/dms/image/v2/D4E35AQEkQbsQSGOprw/profile-framedphoto-shrink_800_800/B4EZflg2q5GwAk-/0/1751902284898?e=1756033200&v=beta&t=69CPt5S_6Vf7C6pR3ybUSy14_76FH8La3YIS9kWS1vo"

},

"stats": {

"total_reactions": 3,

"like": 3,

"support": 0,

"love": 0,

"insight": 0,

"celebrate": 0,

"funny": 0,

"comments": 1,

"reposts": 0

},

"media": {

"type": "image",

"url": "https://media.licdn.com/dms/image/v2/D4E22AQFnFpciaUO1xw/feedshare-shrink_1280/B4EZhurpbfHEAk-/0/1754203593500?e=1758153600&v=beta&t=MzHz0XEbnK2tnsZYaK6P9eVeenF1x5QZl18BkOsziRM",

"images": [

{

"url": "https://media.licdn.com/dms/image/v2/D4E22AQFnFpciaUO1xw/feedshare-shrink_1280/B4EZhurpbfHEAk-/0/1754203593500?e=1758153600&v=beta&t=MzHz0XEbnK2tnsZYaK6P9eVeenF1x5QZl18BkOsziRM",

"width": 1024,

"height": 1536

}

]

},

"profile_input": "https://www.linkedin.com/in/jimdejager/"

},

{

"timestamp": "2025-08-17T10:25:04.122Z",

"summary": {

"totalProfiles": 1,

"successfulProfiles": 1,

"failedProfiles": 0,

"totalPostsExtracted": 3,

"failedUsernames": []

},

"results": [

{

"username": "https://www.linkedin.com/in/jimdejager/",

"success": true,

"postsCount": 3,

"responseStatus": 200

}

]

}

]

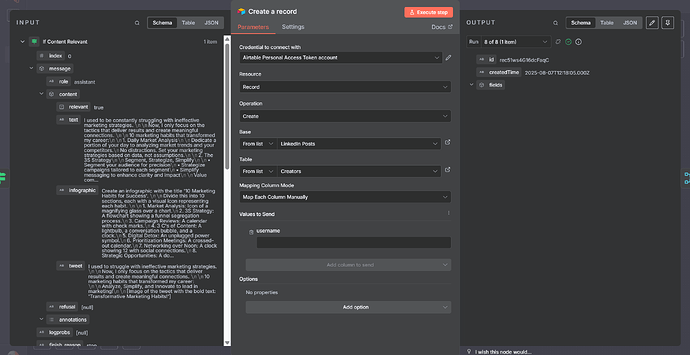

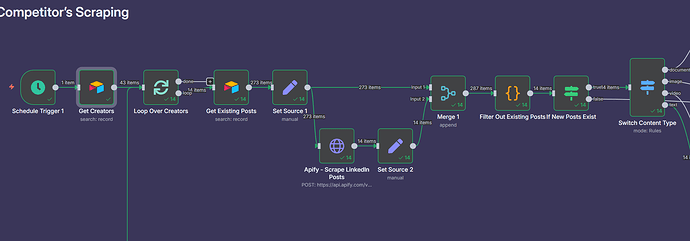

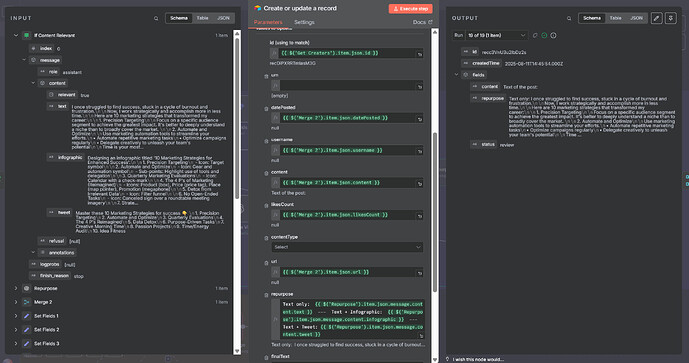

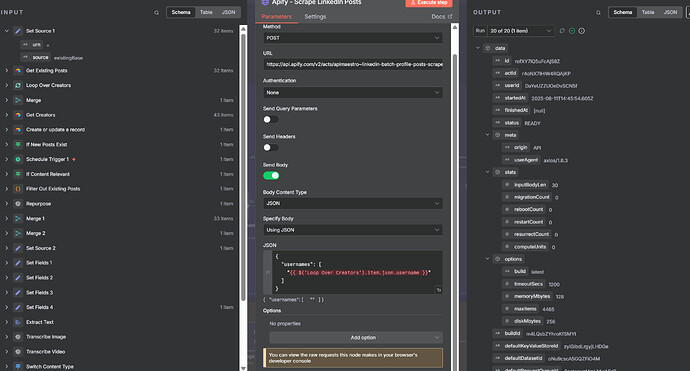

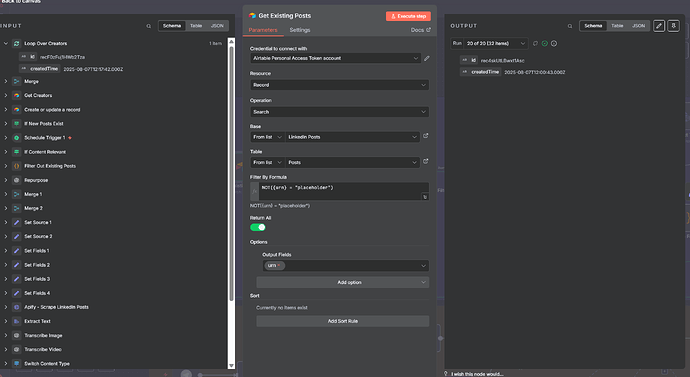

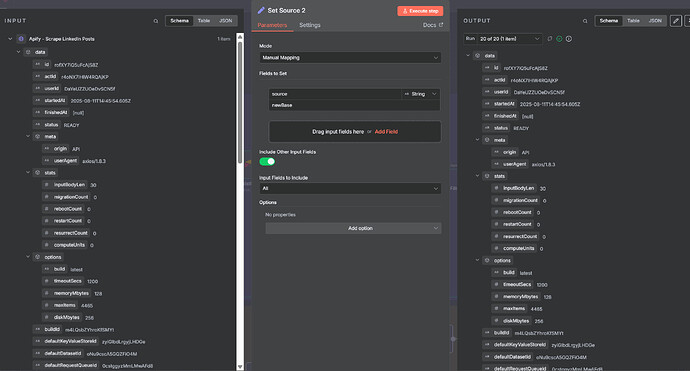

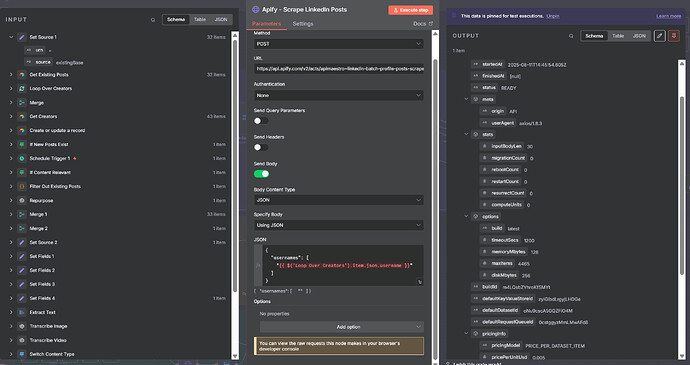

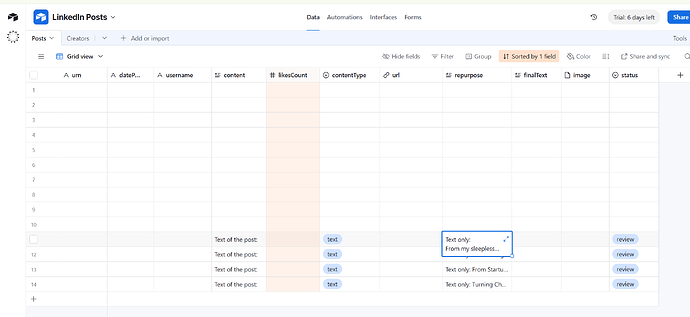

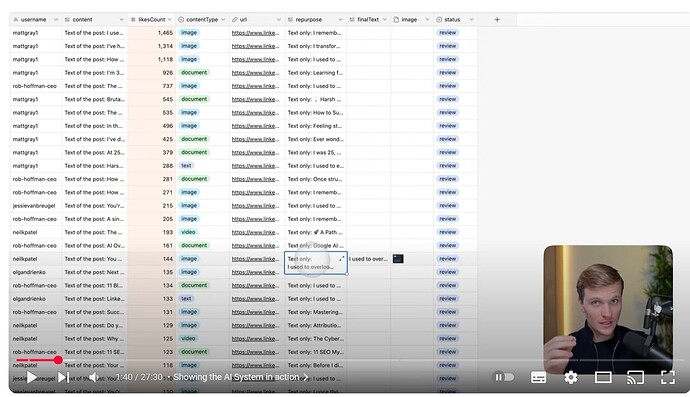

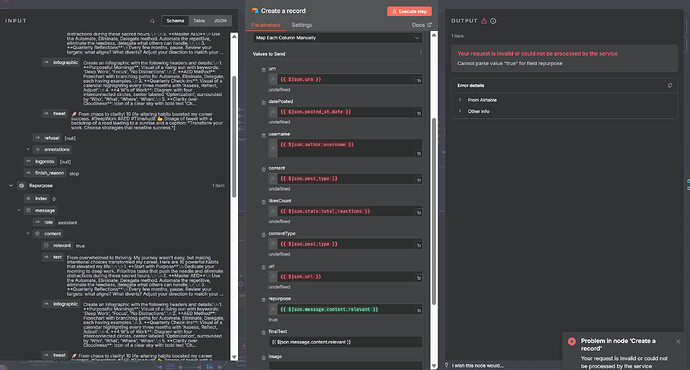

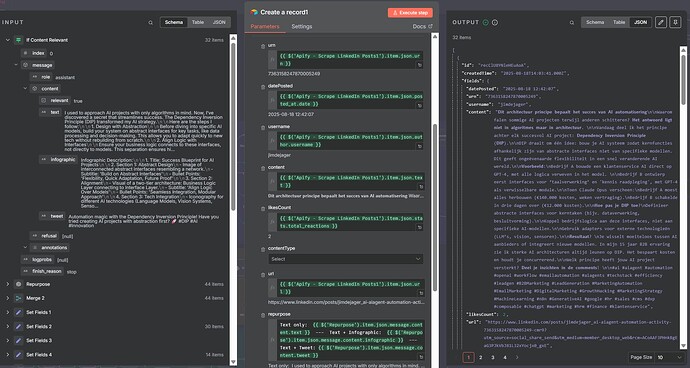

here the logg from the succesfully run in Apify.

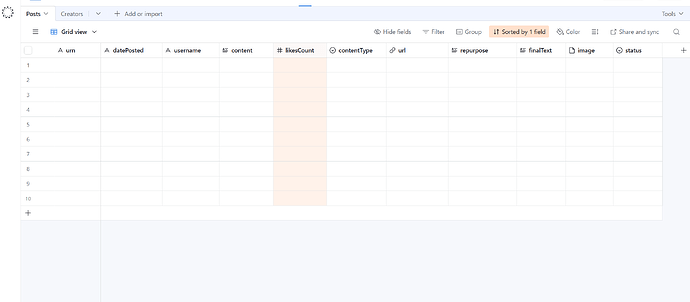

Why are the values still incorrect in the airtable node create a record?