I’m encountering an error during the second-to-last step of my workflow. The workflow runs fine until it reaches this step. After the error occurs, if I only run the last two nodes after gathering all the data, everything works fine. The issue only happens when running the entire workflow, causing it to stop at the error.

Please share your workflow

Share the output returned by the last node

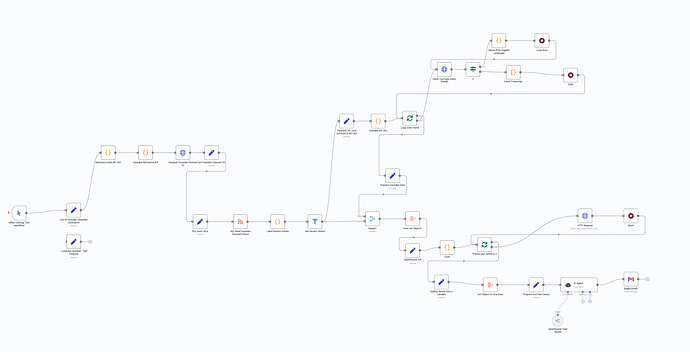

This workflow works on getting the transcript of 30+ videos, summarize each video using openrouter request. Next, compile all video summary into 1, let another openrouter request to get the repetitive word mention. Last step is to send it gmail message.

Information on your n8n setup

- **n8n version: 1.82.3

- Database (default: SQLite):

- n8n EXECUTIONS_PROCESS setting (default: own, main):

- **Running n8n via (Docker, npm, n8n cloud, desktop app): chrome browser

- **Operating system: win10

Hey @Marc_Justin_Rait,

It might not be a memory issue, but something else — that error message is generic and simply means n8n has crashed.

That said, since you’re processing 30+ videos, memory is still the most likely cause.

To solve this, you have a few options:

- Increase your server’s memory.

- Process fewer items per workflow run.

- Use Sub-workflows (I’d say this is the best option for your case).

With Sub-workflows, you can send each video transcript to be processed by a separate workflow. This way, only one video is processed at a time, drastically reducing memory usage.

If my reply answers your question please remember to mark it as the solution

If my reply answers your question please remember to mark it as the solution

1 Like

Sorry for not getting back the sub-workflows helps me to almost solve the problem but it still keeps on appearing only the last part.

I don’t know any solution to this anymore. please help me with this one. i send the image

That’s great, @Marc_Justin_Rait

Congratulations on the improvement

Can you show me a screenshot of the error message?

I can’t know what is happening by just seeing an error icon.

Hello, the error message is this :

" Execution stopped at this node

n8n may have run out of memory while running this execution. More context and tips on how to avoid this in the docs"

Same problem, then. But on the server side hahahaha

It may be because you could still be receiving the output JSON from the subworkflows. That JSON data will consume your RAM.

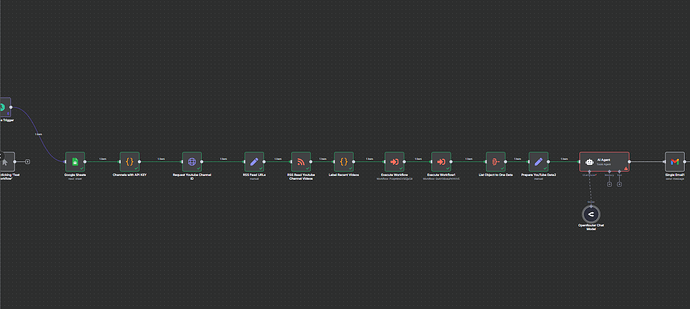

To free up memory you would have to split your items into batches, using the Loop node.

The subworkflow would then execute only some items (let’s say 50 items) at once. And then get the next 50… and so on.

Like this: