Setup

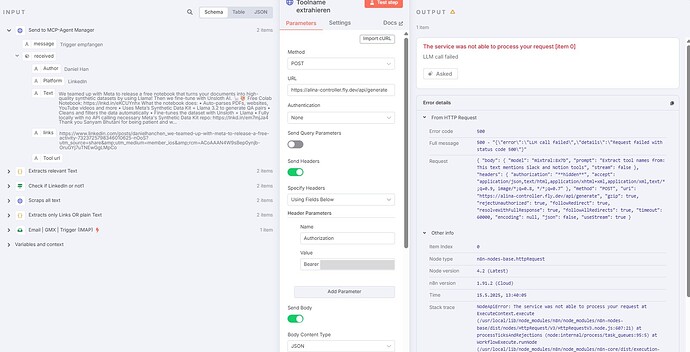

- External API: alina-controller.fly.dev/api/generate (Custom LLM proxy)

- Config: POST, Bearer Token, JSON Body, Timeout 60s, Retry 3x

- Node: HTTP Request v4.2, N8N Cloud 1.91.2

Following works:

- PowerShell: API calls successful with same parameters

- Services: Both Controller and Ollama services running

- Authorization: Bearer token correct (verified via PowerShell)

- Request Format: JSON body validated

Following does not work

- N8N Node: Consistent HTTP 500 errors

- Error Message:

"LLM call failed", "Request failed with status code 500"

Debug Steps Tried

- Timeout/Retry: Increased to 60s, retry 3x

- Service Warm-up: Manual service startup via PowerShell

- Parameter Verification: All parameters match working PowerShell request

- Simple Test: Hardcoded prompt instead of dynamic data

Current Status

PowerShell API calls work perfectly with identical parameters, N8N fails consistently. Seeking guidance on N8N-specific request handling differences.

Request Code that works in PowerShell but fails in N8N:

{

“model”: “mixtral:8x7b”,

“prompt”: “Extract tool names from: Test text”,

“stream”: false

}

Thanks for your support