Describe the problem/error/question

I have a VPS with this configuration from Hostinger (KVM 2):

2 cœurs vCPU

8 Go de RAM

100 Go d’espace disque NVM

8 To de bande passante

What is the error message (if any)?

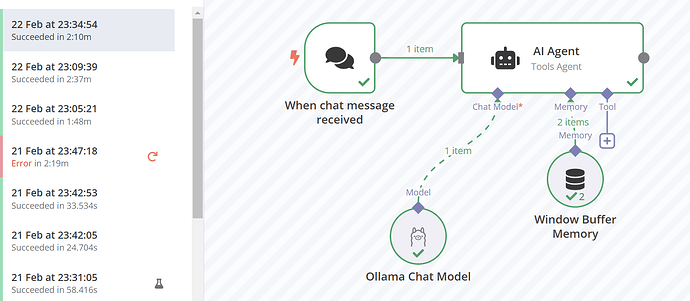

The last 3 tries were with three different model

llama , deepseek and mistral

Each one took 1m30 to 2m40 to reply

root@srv999:~# docker stats --no-stream | grep ollama

d73ca1352b23 ollama 104.76% 2.416GiB / 7.755GiB 31.15% 208kB / 395kB 61.1GB / 1.37GB 16

root@srv718602:~#

I create a swap not sure if it’s good please let me no as well

root@srv999:~# free -h

total used free shared buff/cache available

Mem: 7.8Gi 1.2Gi 1.4Gi 6.8Mi 5.5Gi 6.6Gi

Swap: 2.0Gi 1.0Gi 1.0Gi

- Swap total : 2.0 GiB

- Swap used : 1.0 GiB

Can you lead me to optimize my agent please ?

(Select the nodes on your canvas and use the keyboard shortcuts CMD+C/CTRL+C and CMD+V/CTRL+V to copy and paste the workflow.)

Share the output returned by the last node

Information on your n8n setup

- n8n version: : n8n Version: 1.77.3

- Database (default: SQLite): postgresdb

- **n8n EXECUTIONS_PROCESS setting : main

- **Running n8n via : Docker

- **Operating system: Ubuntu 24.04.2 LTS

- **Docker : version 27.5.1, build 9f9e405

- **Docker Compose version v2.32.4

- **git version 2.43.0