Describe the problem/error/question

Not able to connect to local ollama and qudrant db , also lost all my workflows on self hosted n8n after upgrade. i deployed self-hosted kit through docker composer with n8n 1.99 V and today upgraded n8n to 1.103 V . upgrade procedure i followed as described in n8n documentation

Navigate to the directory containing your docker compose file

cd </path/to/your/compose/file/directory>

Pull latest version

docker compose pull

Stop and remove older version

docker compose down

Start the container

docker compose up -d

Below is the docker container status after restart

After, this ,

- i was asked to login again on n8n browser

- lost all my workflow

- quadrant collection is present but status is grey

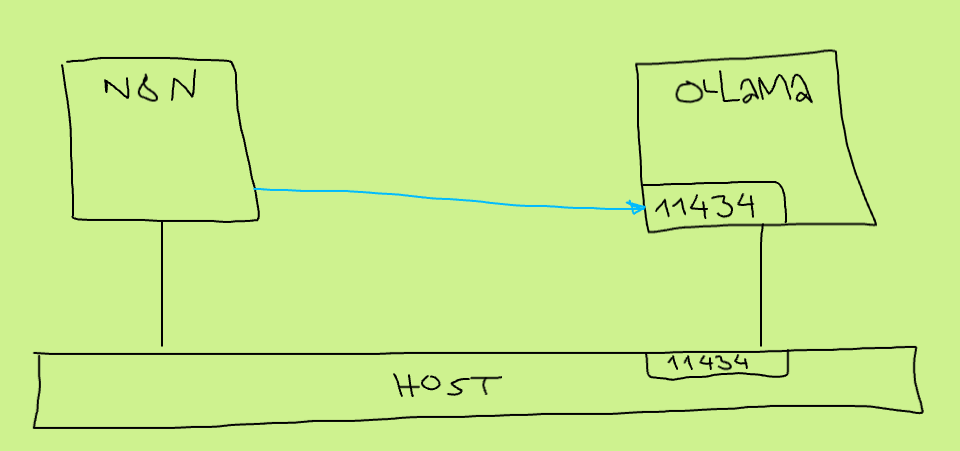

- i created new workflow but not able to connect using local ollama

- lost all my credentials

What is the error message (if any)?

Please share your workflow

please check my docker compose yml

volumes:

n8n_storage:

postgres_storage:

ollama_storage:

qdrant_storage:

networks:

demo:

x-n8n: &service-n8n

image: n8nio/n8n:latest

networks: [‘demo’]

environment:

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=postgres

- DB_POSTGRESDB_USER=${POSTGRES_USER}

- DB_POSTGRESDB_PASSWORD=${POSTGRES_PASSWORD}

- N8N_DIAGNOSTICS_ENABLED=false

- N8N_PERSONALIZATION_ENABLED=false

- N8N_ENCRYPTION_KEY

- N8N_USER_MANAGEMENT_JWT_SECRET

- CODE_ENABLE_STDOUT=true

- N8N_LOG_LEVEL=debug

- NODE_FUNCTION_ALLOW_BUILTIN_MODULES=path,fs,os

- NODE_FUNCTION_ALLOW_EXTERNAL=*

- OLLAMA_HOST=ollama:11434

env_file:

- .env

x-ollama: &service-ollama

image: ollama/ollama:latest

container_name: ollama

networks: [‘demo’]

restart: unless-stopped

ports:

- 11434:11434

volumes:

- ollama_storage:/root/.ollama

deploy:

resources:

limits:

cpus: ‘6’

memory: 8G

x-init-ollama: &init-ollama

image: ollama/ollama:latest

networks: [‘demo’]

container_name: ollama-pull-llama

volumes:

- ollama_storage:/root/.ollama

entrypoint: /bin/sh

environment:

- OLLAMA_HOST=ollama:11434

command:

- “-c”

- “sleep 3; ollama pull llama3.2”

services:

postgres:

image: postgres:16-alpine

hostname: postgres

networks: [‘demo’]

restart: unless-stopped

environment:

- POSTGRES_USER

- POSTGRES_PASSWORD

- POSTGRES_DB

volumes:

- postgres_storage:/var/lib/postgresql/data

healthcheck:

test: [‘CMD-SHELL’, ‘pg_isready -h localhost -U ${POSTGRES_USER} -d ${POSTGRES_DB}’]

interval: 5s

timeout: 5s

retries: 10

n8n-import:

<<: *service-n8n

hostname: n8n-import

container_name: n8n-import

entrypoint: /bin/sh

command:

- “-c”

- “n8n import:credentials --separate --input=/demo-data/credentials && n8n import:workflow --separate --input=/demo-data/workflows”

volumes:

- ./n8n/demo-data:/demo-data

depends_on:

postgres:

condition: service_healthy

n8n:

<<: service-n8n

hostname: n8n

container_name: n8n

restart: unless-stopped

ports:

- 5678:5678

environment:

- CODE_ENABLE_STDOUT=true

- N8N_LOG_LEVEL=debug

- NODE_FUNCTION_ALLOW_BUILTIN_MODULES=path,fs,os

- NODE_FUNCTION_ALLOW_EXTERNAL=

volumes:

- n8n_storage:/home/node/.n8n

- ./n8n/demo-data:/demo-data

- ./shared:/data/shared

depends_on:

postgres:

condition: service_healthy

n8n-import:

condition: service_completed_successfully

qdrant:

image: qdrant/qdrant

hostname: qdrant

container_name: qdrant

networks: [‘demo’]

restart: unless-stopped

ports:

- 6333:6333

volumes:

- qdrant_storage:/qdrant/storage

ollama-cpu:

profiles: [“cpu”]

deploy:

resources:

limits:

cpus: ‘6’

memory: 8G

<<: *service-ollama

ollama-gpu:

profiles: [“gpu-nvidia”]

<<: *service-ollama

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]

ollama-gpu-amd:

profiles: [“gpu-amd”]

<<: *service-ollama

image: ollama/ollama:rocm

devices:

- “/dev/kfd”

- “/dev/dri”

ollama-pull-llama-cpu:

profiles: [“cpu”]

<<: *init-ollama

depends_on:

- ollama-cpu

ollama-pull-llama-gpu:

profiles: [“gpu-nvidia”]

<<: *init-ollama

depends_on:

- ollama-gpu

ollama-pull-llama-gpu-amd:

profiles: [gpu-amd]

<<: *init-ollama

image: ollama/ollama:rocm

depends_on:

- ollama-gpu-amd

please check n8n below

{

“nodes”: [

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.chatTrigger”,

“typeVersion”: 1.1,

“position”: [

0,

0

],

“id”: “4eaed734-d139-4a78-9cd3-e98b17612ccc”,

“name”: “When chat message received”,

“webhookId”: “8e2a5903-0044-431e-bc54-6760427fde5a”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.chainRetrievalQa”,

“typeVersion”: 1.6,

“position”: [

208,

0

],

“id”: “cea1c2dc-dbfd-44b4-8db3-cef01e9fc684”,

“name”: “Question and Answer Chain”

},

{

“parameters”: {

“options”: {}

},

“type”: “@n8n/n8n-nodes-langchain.lmChatOllama”,

“typeVersion”: 1,

“position”: [

96,

208

],

“id”: “131dc353-4eec-4171-a7cd-95a36d18c9c9”,

“name”: “Ollama Chat Model”,

“credentials”: {

“ollamaApi”: {

“id”: “UBbYjYWntLXLNG3S”,

“name”: “Ollama account”

}

}

}

],

“connections”: {

“When chat message received”: {

“main”: [

[

{

“node”: “Question and Answer Chain”,

“type”: “main”,

“index”: 0

}

]

]

},

“Ollama Chat Model”: {

“ai_languageModel”: [

[

{

“node”: “Question and Answer Chain”,

“type”: “ai_languageModel”,

“index”: 0

}

]

]

}

},

“pinData”: {},

“meta”: {

“templateCredsSetupCompleted”: true,

“instanceId”: “558d88703fb65b2d0e44613bc35916258b0f0bf983c5d4730c00c424b77ca36a”

}

}

(Select the nodes on your canvas and use the keyboard shortcuts CMD+C/CTRL+C and CMD+V/CTRL+V to copy and paste the workflow.)

Share the output returned by the last node

Information on your n8n setup

-

n8n version:

-

i am using self hosted docker kit for n8n using docker compose

-

n8n : 1.103.1 (upgraded today , earlier 1.99.x)

-

Database (default: SQLite):

-

Postgress

-

n8n EXECUTIONS_PROCESS setting (default: own, main):

-

Running n8n via (Docker, npm, n8n cloud, desktop app):

-

through docker , self hosting

-

Operating system:

-

MAC OS