I put this in as a bug, but they thought it might be config related. I don’t since tools calls were designed to enable some uniformity in code functionality. Only certain models are even built with it. Please let me know where you think this should be..

Bug Description

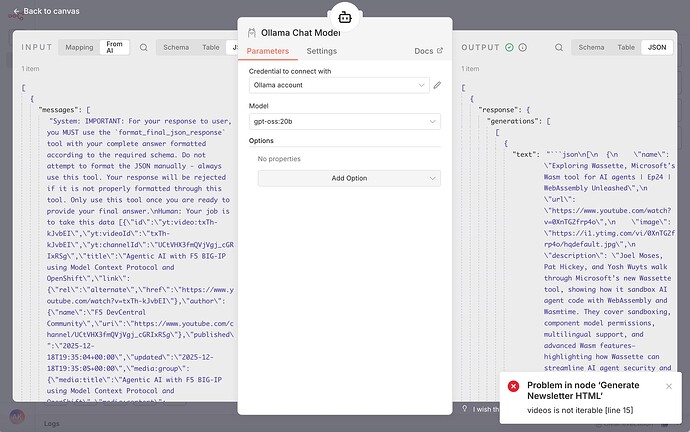

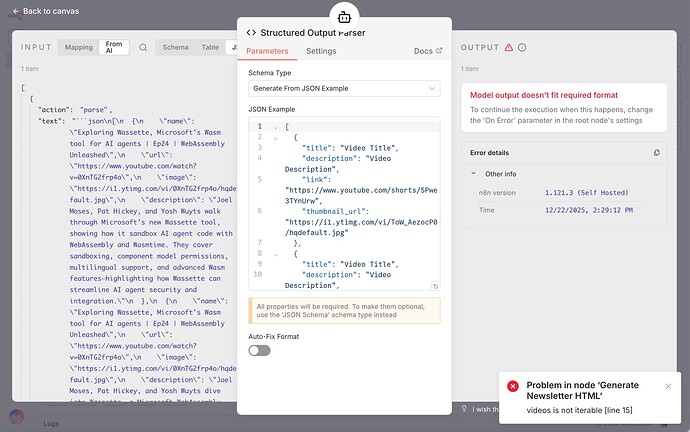

I was trying to build a simplified version of Saad Naveen’s RSS Newsletter flow, but I wanted to use Ollama instead of OpenAI. When connecting the flow the same as the working OpenAI flow, the run always fails. The failure occurs at the structured output parser, but it would appear that it fails because the output from the Tools call of the Ollama node is not close to what is expected.

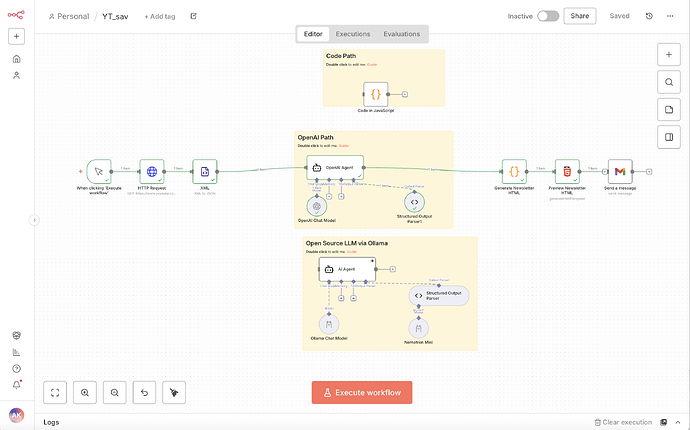

This was a test, so The flow I have here converts XML from a YouTube RSS feed to JSON, shares it to a specialized agent, which reformats the JSON to be readied for the creation of an HTML newsletter, which is mailed out via gmail. Since I had trouble with the Ollama, I replicated via JavaScript and then, again, with OpenAI, exactly as in the initial example.

Here is the flow:

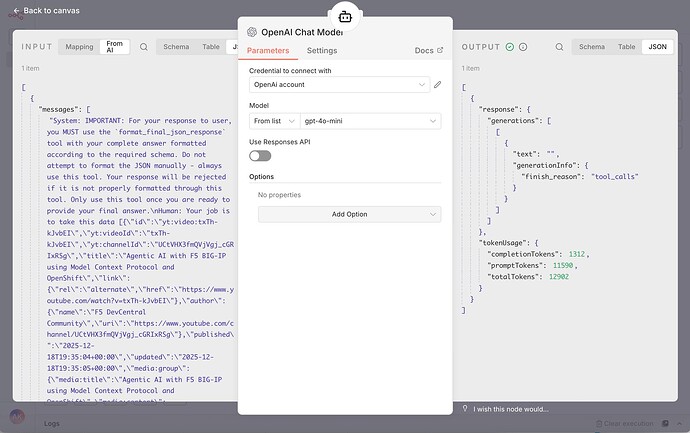

OpenAI flow result:

Ollama flow result:

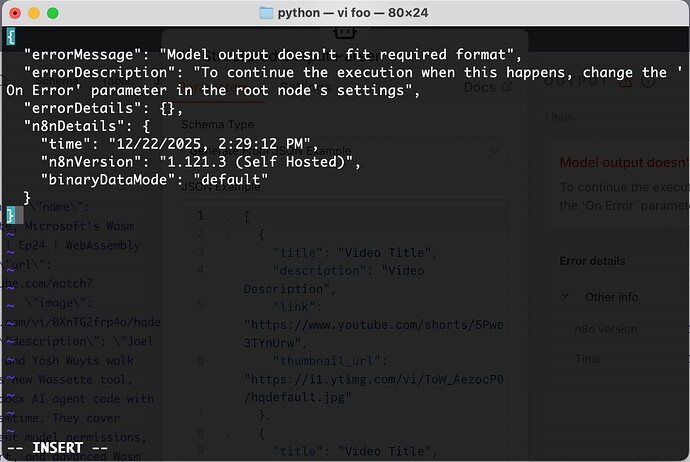

It would appear, to me, that the input to the model nodes is identical, but the way the node handles the JSON is very different. As a result, the data sent back to the agent is lost in the structured output parser:

Here’s the error detsils:

To reproduce:

-

Setup http request node against any youtube rss feed

-

pass to an xml node and convert it to json

-

use an agent and use the following as a source for prompt:

Your job is to take this data {{ $json.feed.entry.toJsonString() }} and format it in the required output format. Also, summarize the video description at the same time as it’ll be used in a newsletter. -

Require specific output and attach a structured output parser with the following schema example:

[

{

"title": "Video Title",

"description": "Video Description",

"link": "https://www.youtube.com/shorts/5Pwe3TYnUrw",

"thumbnail_url": "https://i1.ytimg.com/vi/ToW_AezocP0/hqdefault.jpg"

},

{

"title": "Video Title",

"description": "Video Description",

"link": "https://www.youtube.com/shorts/5Pwe3TYnUrw",

"thumbnail_url": "https://i1.ytimg.com/vi/ToW_AezocP0/hqdefault.jpg"

}

]

-

Attach an Ollama model node to the agent, use ollama credentials and use a gpt-oss-20b (it was as close as I saw as the gpt-4o-mini from the working test.

-

Execute.

Expected behavior

Expeted behavior is that it formats the json appropriately to be processed by the following in an html node:

const videos = $input.first().json.output;

let html = `

DevCentral YouTube Channel NewsletterLatest Videos from DevCentral

`;

for (const video of videos) {

const thumbnail = video.thumbnail_url || ‘’;

html += <div style="border-bottom: 1px solid #e0e0e0; padding-bottom: 20px; margin-bottom: 20px; display: flex; align-items: flex-start; gap: 32px;"> <div aria-label="Video thumbnail" style="flex-shrink: 0; width: 120px; height: 67px; border-radius: 8px; overflow: hidden; box-shadow: 0 3px 8px rgba(0,0,0,0.15); margin-right: 16px;"> <a href="${video.link}" target="_blank" rel="noopener noreferrer"> <img src="${thumbnail}" alt="Thumbnail for ${video.title}" style="width: 100%; height: 100%; object-fit: cover; display: block;" /> </a> </div> <div style="flex-grow: 1;"> <p style="font-size: 18px; font-weight: 600; margin: 0 0 8px 0; color: #222222;"> <a href="${video.link}" target="_blank" rel="noopener noreferrer" style="color: #ff6d5a; text-decoration: none;" tabindex="0">${video.title}</a> </p> <p style="font-size: 14px; color: #555555; margin: 0; line-height: 1.5;">${video.description}</p> </div> </div>;

}

html += `

`;

// Return the full HTML for use in the next node

return [{ json: { newsletterHtml: html } }];