Hi guys ,

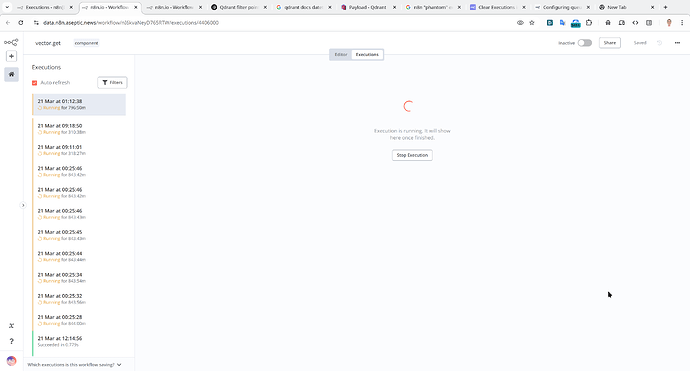

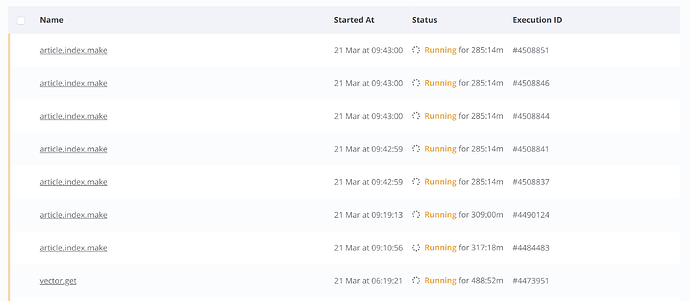

I have a lot of phantom execution, as you can see on screenshots.

Error in logs (only):

on webhook node: “{“log”:“Error: timeout exceeded when trying to connect\n”,“stream”:“stdout”,“time”:“2025-03-21T12:51:45.315171232Z”}”

on worker node: “{“log”:“Worker errored while running execution 4637590 (job 229086)\n”,“stream”:“stdout”,“time”:“2025-03-21T12:46:43.901422953Z”}”

n8n works in queue mode, postgres, redis, Google Management Instance Group with autoscalling for worker & webhook and without autoscaling for master.

Hey @yt8aseptic

Please try the following:

stop the executions manually and see if they stop

restart your instance and see if the executions go away

Dear @solomon

executions can be stopped but don’t go away after reboot.

I’ve cleaned up by

delete from execution_metadata where "executionId" in ( SELECT id FROM execution_entity WHERE status = 'running' );

delete from execution_data where "executionId" in ( SELECT id FROM execution_entity WHERE status = 'running' );

delete from execution_annotations where "executionId" in ( SELECT id FROM execution_entity WHERE status = 'running' );

delete FROM execution_entity WHERE status = 'running';

delete from execution_metadata where "executionId" in ( SELECT id FROM execution_entity where finished = false and status = 'new' );

delete from execution_data where "executionId" in ( SELECT id FROM execution_entity where finished = false and status = 'new' );

delete from execution_annotations where "executionId" in ( SELECT id FROM execution_entity where finished = false and status = 'new' );

delete FROM execution_entity where finished = false and status = 'new';

Then I increase resources on the cluster. Now I have much fewer phantoms but there are.

FROM python:3.13-slim-bookworm as base

EXPOSE 8008

SHELL ["/bin/bash", "-c"]

RUN \

apt update && \

apt install -y npm nodejs apt-transport-https ca-certificates gnupg curl pipx && \

apt autoremove -y && apt clean -y && rm -rf /var/lib/apt/lists/*

RUN \

npm install -g n8n &&\

pip3 install --upgrade --no-cache-dir pip &&\

\

pip3 install --upgrade --no-cache-dir \

google-cloud-firestore google-cloud-storage google-cloud-pubsub google-cloud-tasks google-auth \

arrow httpx pycountry langdetect lxml orjson regex qdrant-client

ARG N8N_ROOT=/n8n

ENV N8N_ROOT=${N8N_ROOT}

ENV N8N_PORT=8008

ENV PATH="${N8N_ROOT}/scripts:${PATH}"

ENV PYTHONPATH="${N8N_ROOT}/scripts:${PYTHONPATH}"

ENV NODE_ENV=production

ENV GENERIC_TIMEZONE=Europe/Kyiv

ENV N8N_AVAILABLE_BINARY_DATA_MODES=filesystem

ENV N8N_DEFAULT_BINARY_DATA_MODE=filesystem

ARG N8N_BINARY_DATA_STORAGE_PATH=${N8N_ROOT}/uploads

ENV N8N_BINARY_DATA_STORAGE_PATH=${N8N_BINARY_DATA_STORAGE_PATH}

ENV N8N_TEMPLATES_ENABLED=false

ENV N8N_PUBLIC_API_DISABLED=true

ENV N8N_PUBLIC_API_SWAGGERUI_DISABLED=true

ENV N8N_HIRING_BANNER_ENABLED=false

ENV N8N_BASIC_AUTH_ACTIVE=false

ENV N8N_AUTH_EXCLUDE_ENDPOINTS=*

ENV N8N_USER_MANAGEMENT_DISABLED=true

ENV N8N_DISABLE_UI_AUTH=true

ENV N8N_ENFORCE_SETTINGS_FILE_PERMISSIONS=false

ENV N8N_PERSONALIZATION_ENABLED=false

ENV N8N_VERSION_NOTIFICATIONS_ENABLED=false

ENV EXECUTIONS_MODE=queue

ENV EXECUTIONS_DATA_SAVE_ON_SUCCESS=none

ENV EXECUTIONS_DATA_PRUNE=true

ENV EXECUTIONS_DATA_MAX_AGE=168

ENV EXECUTIONS_DATA_PRUNE_MAX_COUNT=25000

ENV N8N_DIAGNOSTICS_ENABLED=false

ENV N8N_LOG_LEVEL=warn

ENV DB_TYPE=postgresdb

COPY --chmod=555 pod/src/nodes /opt/nodes/

COPY --chmod=444 pod/src/workflows ${N8N_ROOT}/workflows/

COPY --chmod=555 pod/src/scripts /opt/scripts/

COPY --chmod=555 pod/src/entrypoint.sh /opt/entrypoint.sh

RUN \

useradd -s /bin/bash -m -u 10001 aseptic &&\

\

mkdir -p ${N8N_ROOT}/backups/workflows && mkdir -p ${N8N_ROOT}/backups/credentials &&\

chown aseptic:root -R ${N8N_ROOT}/backups && chmod 664 -R ${N8N_ROOT}/backups &&\

\

mkdir -p ${N8N_BINARY_DATA_STORAGE_PATH} && chown aseptic:root ${N8N_BINARY_DATA_STORAGE_PATH} && chmod 664 ${N8N_BINARY_DATA_STORAGE_PATH} &&\

mkdir -p /home/aseptic/.n8n && chown aseptic:root -R /home/aseptic && chmod 664 -R /home/aseptic/.n8n

WORKDIR ${N8N_ROOT}

ENTRYPOINT [ "/opt/entrypoint.sh" ]

FROM base as gce

# RUN \

# curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | gpg --dearmor -o /usr/share/keyrings/cloud.google.gpg &&\

# echo "deb [signed-by=/usr/share/keyrings/cloud.google.gpg] https://packages.cloud.google.com/apt cloud-sdk main" | tee -a /etc/apt/sources.list.d/google-cloud-sdk.list &&\

# \

# echo "deb [signed-by=/usr/share/keyrings/cloud.google.asc] https://packages.cloud.google.com/apt gcsfuse-bookworm main" | tee /etc/apt/sources.list.d/gcsfuse.list &&\

# curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | tee /usr/share/keyrings/cloud.google.asc &&\

# \

# apt update && apt upgrade -y && apt install google-cloud-cli gcsfuse tini --no-install-recommends -y &&\

# curl -sS https://dl.google.com/cloudagents/add-google-cloud-ops-agent-repo.sh | bash -s -- --also-install &&\

# apt autoremove -y && apt clean -y && rm -rf /var/lib/apt/lists/*

WORKDIR ${N8N_ROOT}

#ENTRYPOINT [ "/usr/bin/tini", "--", "/opt/entrypoint.sh"]

entrypoint

#!/bin/bash

set -eo pipefail

# WORKFLOW_DIR="${N8N_ROOT}/workflows"

# BACKUPS_DIR="${N8N_ROOT}/backups"

# if [[ -n "${N8N_BUCKET}" ]]; then

# gcsfuse -o allow_other --uid 10001 --gid 10001 --implicit-dirs --only-dir BACKUPS ${N8N_BUCKET} $BACKUPS_DIR

# echo "The BACKUPS from GCS ${N8N_BUCKET} was mounted in $BACKUPS_DIR"

# gcsfuse -o allow_other --uid 10001 --gid 10001 --implicit-dirs --only-dir UPLOADS ${N8N_BUCKET} ${N8N_ROOT}/uploads

# echo "The UPLOADS from GCS ${N8N_BUCKET} was mounted in ${N8N_ROOT}/uploads"

# gcsfuse -o allow_other --uid 10001 --gid 10001 --implicit-dirs --only-dir PROFILE ${N8N_BUCKET} /home/aseptic/.n8n

# echo "The PROFILE from GCS ${N8N_BUCKET} was mounted in /home/aseptic/.n8n"

# else

# echo "N8N_BUCKET is not set. Skipping GCS mounting."

# fi

if [[ -n "$1" ]]; then

echo "RUN $1 NODE"

exec su aseptic -c "n8n $1"

else

echo "RUN MAIN NODE"

exec su aseptic -c "n8n"

fi

wait -n

server run script

#!/bin/bash

set -eo pipefail

#INSTANCE="worker"

#INSTANCE="webhook"

N8N_BUCKET=<>

N8N_DATA=/var/aseptic

gcsfuse -o allow_other --uid 10001 --gid 10001 --limit-ops-per-sec 0 --implicit-dirs ${N8N_BUCKET} ${N8N_DATA}

echo "The GCS ${N8N_BUCKET} was mounted in ${N8N_DATA}"

docker rm -f $( docker ps -aq ) || true

if [ "$INSTANCE" == "worker" ]; then

docker run -d --restart on-failure --name n8n --publish 8008:8008 \

\

-e N8N_ENCRYPTION_KEY=$( gcloud secrets versions access latest --secret=N8N_ENCRYPTION_KEY ) \

\

-e QUEUE_BULL_REDIS_HOST=<> \

-e QUEUE_BULL_REDIS_DB=0 \

-e QUEUE_HEALTH_CHECK_ACTIVE=true \

-e QUEUE_HEALTH_CHECK_PORT=8008 \

\

-e DB_POSTGRESDB_DATABASE=n8n \

-e DB_POSTGRESDB_HOST=<> \

-e DB_POSTGRESDB_USER=n8n \

-e DB_POSTGRESDB_PASSWORD=$( gcloud secrets versions access latest --secret=DB_POSTGRESDB_PASSWORD ) \

\

-e WEBHOOK_URL="<>" \

-e N8N_ENDPOINT_WEBHOOK="v1" \

-e N8N_ENDPOINT_WEBHOOK_TEST="v1/test" \

-e N8N_ENDPOINT_WEBHOOK_WAIT="v1/wait" \

\

-e N8N_DISABLE_UI=true \

\

-e N8N_RUNNERS_ENABLED=true \

-e N8N_RUNNERS_MODE=internal \

\

-v /var/aseptic/WORKFLOWS:/n8n/workflows \

-v /var/aseptic/SCRIPTS:/n8n/scripts \

-v /var/aseptic/UPLOADS:/n8n/uploads \

-v /var/aseptic/BACKUPS:/n8n/backups \

-v /var/aseptic/PROFILE:/home/aseptic/.n8n \

\

us-docker.pkg.dev/asepticnews/asepticnews-data-n8n/main:latest \

worker

elif [ "$INSTANCE" == "webhook" ]; then

docker run -d --restart on-failure --name n8n --publish 8008:8008 \

\

-e N8N_ENCRYPTION_KEY=$( gcloud secrets versions access latest --secret=N8N_ENCRYPTION_KEY ) \

\

-e QUEUE_BULL_REDIS_HOST=<> \

-e QUEUE_BULL_REDIS_DB=0 \

\

-e DB_POSTGRESDB_DATABASE=n8n \

-e DB_POSTGRESDB_HOST=<> \

-e DB_POSTGRESDB_USER=n8n \

-e DB_POSTGRESDB_PASSWORD=$( gcloud secrets versions access latest --secret=DB_POSTGRESDB_PASSWORD ) \

\

-e WEBHOOK_URL="<>" \

-e N8N_ENDPOINT_WEBHOOK="v1" \

-e N8N_ENDPOINT_WEBHOOK_TEST="v1/test" \

-e N8N_ENDPOINT_WEBHOOK_WAIT="v1/wait" \

\

-e N8N_DISABLE_PRODUCTION_MAIN_PROCESS=true \

-e OFFLOAD_MANUAL_EXECUTIONS_TO_WORKERS=true \

\

-e N8N_DISABLE_UI=true \

\

-v /var/aseptic/WORKFLOWS:/n8n/workflows \

-v /var/aseptic/SCRIPTS:/n8n/scripts \

-v /var/aseptic/UPLOADS:/n8n/uploads \

-v /var/aseptic/BACKUPS:/n8n/backups \

-v /var/aseptic/PROFILE:/home/aseptic/.n8n \

\

us-docker.pkg.dev/asepticnews/asepticnews-data-n8n/main:latest \

webhook

else

docker run -d --restart on-failure --name n8n --publish 8008:8008 \

\

-e N8N_ENCRYPTION_KEY=$( gcloud secrets versions access latest --secret=N8N_ENCRYPTION_KEY ) \

\

-e QUEUE_BULL_REDIS_HOST=<> \

-e QUEUE_BULL_REDIS_DB=0 \

\

-e DB_POSTGRESDB_DATABASE=n8n \

-e DB_POSTGRESDB_HOST=<> \

-e DB_POSTGRESDB_USER=n8n \

-e DB_POSTGRESDB_PASSWORD=$( gcloud secrets versions access latest --secret=DB_POSTGRESDB_PASSWORD ) \

\

-e WEBHOOK_URL="<>" \

-e N8N_ENDPOINT_WEBHOOK="v1" \

-e N8N_ENDPOINT_WEBHOOK_TEST="v1/test" \

-e N8N_ENDPOINT_WEBHOOK_WAIT="v1/wait" \

\

-e N8N_DISABLE_PRODUCTION_MAIN_PROCESS=true \

-e OFFLOAD_MANUAL_EXECUTIONS_TO_WORKERS=true \

\

-e N8N_RUNNERS_ENABLED=true \

-e N8N_RUNNERS_MODE=internal \

\

-v /var/aseptic/WORKFLOWS:/n8n/workflows \

-v /var/aseptic/SCRIPTS:/n8n/scripts \

-v /var/aseptic/UPLOADS:/n8n/uploads \

-v /var/aseptic/BACKUPS:/n8n/backups \

-v /var/aseptic/PROFILE:/home/aseptic/.n8n \

\

us-docker.pkg.dev/asepticnews/asepticnews-data-n8n/main:latest

fi

I didn’t use --workers because my worker is so small (1CPU/2Gb) but horizontally scaled by Google Cloud (worker & webhook node).

system

June 23, 2025, 9:32am

4

This topic was automatically closed 90 days after the last reply. New replies are no longer allowed.