OpenAI just released several cool features:

- New models (GPT-4 128k window, DALLE-3, GPT-vision)

- Built-in Assistants API

Would be cool to see some OpenAI node updates and maybe a new one for assistants ![]()

OpenAI just released several cool features:

Would be cool to see some OpenAI node updates and maybe a new one for assistants ![]()

+1 from me

+1.

And it will be great to see it in new LangChain flows. Especially since it has already been supported by LangChain itself OpenAI Assistant | 🦜️🔗 Langchain

+1 from me

Yep. And the current gpt-turbo-vision model should have an image input type:

curl https://api.openai.com/v1/chat/completions

-H “Content-Type: application/json”

-H “Authorization: Bearer $OPENAI_API_KEY”

-d ‘{

“model”: “gpt-4-vision-preview”,

“messages”: [

{

“role”: “user”,

“content”: [

{

“type”: “text”,

“text”: “What’s in this image?”

},

{

“type”: “image_url”,

“image_url”: {

“url”: “https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg”

}

}

]

}

],

“max_tokens”: 300

}’

Quick update on this one…

GPT-4 128k Window - Can partially be used already, I need to remove the limit on the token field to fully support this

Dalle-3 - Should be supported in the next release

GPT-vision - This will come at some point soon

Assistants API - This is being looked into as part of the LangChain updates

While we would of course love to add everything we still have to prioritise what is being added but we will get there ![]()

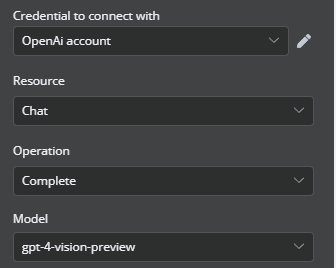

Thanks. It looks like gpt4-vision-preview is available in n8n

so I guess the missing part is to update the OpenAI node type so that it generates { “type”: “image_url” … } in the HTTP request?

Because it doesn’t seem to work when the prompt contains the URL, for example prompt = “Describe this image : https://…”

Hey @tomtom,

Vision is there as we load the models from OpenAI without needing to add them manually for completions but it needs a bit more work to support it properly.

Thanks @Jon

I actually managed to run gpt-4-vision-preview once successfully in my workflow by creating 2 distinct user messages. The first one is my prompt, the second one contained only a URL.

The JSON response contained a short description that matched the image.

I’m gonna try to generalize this and use variables instead of a static URL when my internet connection is in better shape.

Hey @tomtom,

That would make sense, although currently we do not officially support it as there are some changes needed to get it working properly ![]()

You can use the HTTP module for now:

Merci Tiago. It works.

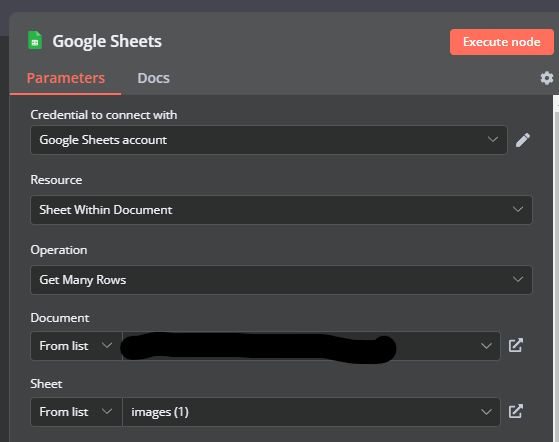

I’m new to n8n so when I ran this node over 600+ image URLs from a Sheets file, it exploded my gpt4vision quota ![]() My mistake was thinking that the node’s “Execute Once” setting would only execute on 1 URL for testing.

My mistake was thinking that the node’s “Execute Once” setting would only execute on 1 URL for testing.

How do you test drive your nodes before running them over lots of data? In my example, I’d like to test on just 1 or a couple of URLs from my Sheets file (I already have a node that reads data from Sheets).

You can try ItemLists node, limit option to cut the number of records in the workflow

I just set the source module (Sheets, Gmail etc) to retrieve only 1 item Max while testing. The max tokens parameter on the OpenAI call doesn’t seem to be respected, so got to be careful. When using text gpt I always manually add a .substring to avoid surprises

gpt-vision has a image size limit of 2000p x 768p so I resize the image to that size before. You can resize it further to limit tokens, in my case I have a lot of text so I need the max size.

For long running conversations, we suggest passing images via URL’s instead of base64. The latency of the model can also be improved by downsizing your images ahead of time to be less than the maximum size they are expected them to be. For low res mode, we expect a 512px x 512px image. For high rest mode, the short side of the image should be less than 768px and the long side should be less than 2,000px.

src: https://platform.openai.com/docs/guides/vision

Support for an image as input got added with this PR from @oleg . It is in the latest ai-beta image.

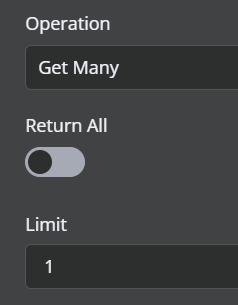

Thanks Tiago. Weirdly, I don’t see the “Return all” switch and the “Limit” field you show in your screenshot in my n8n Google Sheets node. It looks like I’ll have to use the ItemLists node suggested by Ed_P unless there’s something I didn’t understand.

If anyone like me can not wait for the Assistant API, here is a link to json with telegram flow.

Here’s a little guide on how to create your Assistant API chatbot in telegram in 5 minutes.

1.1 describe the role and attach useful files here

1.2 Test until you find the optimal level of adequacy

1.3. Remember Assist ID, it here

2.1 import workflow from file by link

2.2 Choose the correct telegram bot connection here

2.3 Insert the correct OpenAI key (hopefully it will soon cease to be relevant, because a new language model appeared in the list for langchain)

https://tinyurl.com/ywrw7lfr

https://tinyurl.com/yute7e8r

2.4. insert the correct assistant id

https://tinyurl.com/yukoon8x

https://tinyurl.com/yrvbjkc9

2.5. Save

Voila, the prototype is ready!

Just in case, the scheme in airtable

Template__TG___Assistant_API.json - Google Drive live link to json

@RedPacketSec thanks for sharing as well. Would you be open to sharing your template json? (after removing your pvt info)