I’m running n8n in queue mode (with separate webhook and worker processes) using the 8gears/n8n Helm chart on Kubernetes (GKE). I configured PostgreSQL as the database and validated the connection using a custom Node.js script, which works perfectly. However, n8n seems to be falling back to SQLite, as indicated by the log message:

Scaling mode is not officially supported with sqlite. Please use PostgreSQL instead.

I want to ensure that n8n uses PostgreSQL correctly in queue mode and understand why it might be falling back to SQLite despite the successful PostgreSQL connection.

What is the error message (if any)?

The following message appears in the n8n logs:

Scaling mode is not officially supported with sqlite. Please use PostgreSQL instead.

Please share your workflow

This issue is related to the n8n setup and configuration, not a specific workflow. However, here is the script I used to validate the PostgreSQL connection:

const { Client } = require('pg');

// Required environment variables

const requiredEnvVars = [

'DB_POSTGRESDB_HOST',

'DB_POSTGRESDB_USER',

'DB_POSTGRESDB_PASSWORD',

'DB_POSTGRESDB_DATABASE'

];

// Validate environment variables

function validateEnvVars() {

const missingVars = requiredEnvVars.filter(envVar => !process.env[envVar]);

if (missingVars.length > 0) {

console.error('Error: The following environment variables are missing:');

missingVars.forEach(envVar => console.error(`- ${envVar}`));

process.exit(1);

}

console.log('All required environment variables are set.');

}

// Test PostgreSQL connection

async function testPostgresConnection() {

const client = new Client({

host: process.env.DB_POSTGRESDB_HOST,

user: process.env.DB_POSTGRESDB_USER,

password: process.env.DB_POSTGRESDB_PASSWORD,

database: process.env.DB_POSTGRESDB_DATABASE,

port: 5432, // Default PostgreSQL port

});

try {

await client.connect();

console.log('Successfully connected to PostgreSQL.');

// Execute a simple query to confirm the connection

const res = await client.query('SELECT version();');

console.log('PostgreSQL version:', res.rows[0].version);

} catch (err) {

console.error('Error connecting to PostgreSQL:', err);

process.exit(1);

} finally {

await client.end();

}

}

// Run validations

(async () => {

validateEnvVars();

await testPostgresConnection();

})();

Share the output returned by the last node

This issue is not related to a specific workflow or node output. However, here is the output from the validation script:

All required environment variables are set.

Successfully connected to PostgreSQL.

PostgreSQL version: PostgreSQL 15.12 on x86_64-pc-linux-gnu, compiled by Debian clang version 12.0.1, 64-bit

Information on your n8n setup

- n8n version: 1.84.1

- Database (default: SQLite): PostgreSQL 15.12

- n8n EXECUTIONS_PROCESS setting (default: own, main): queue mode (webhook and worker)

- Running n8n via: Kubernetes (GKE) using the 8gears/n8n Helm chart

- Operating system: Debian-based container

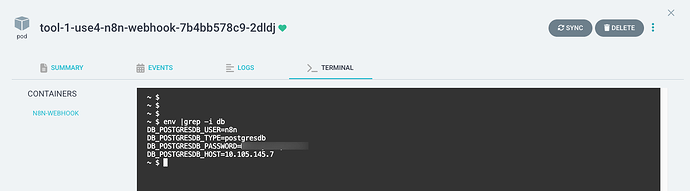

Environment Variables

Here are the environment variables I’m using in the Helm chart:

env:

- name: DB_TYPE

value: "postgresdb"

- name: DB_POSTGRESDB_HOST

value: "10.105.145.7"

- name: DB_POSTGRESDB_USER

value: "n8n"

- name: DB_POSTGRESDB_PASSWORD

value: "MYHsdasaddVqcFdfi8"

- name: DB_POSTGRESDB_DATABASE

value: "n8n"

Additional Information

- I confirmed that the PostgreSQL database is accessible and the required tables (

execution_entity,workflow_entity, etc.) are created. - The issue persists even after restarting n8n and ensuring all environment variables are correctly set.

- I’m using the 8gears/n8n Helm chart for deployment, which might have specific configurations or limitations.

Questions for the n8n Team

- Are there any additional configurations required to enforce PostgreSQL usage in queue mode when using the 8gears/n8n Helm chart?

- Could there be a bug causing n8n to fall back to SQLite even when PostgreSQL is correctly configured?

- Are there any known issues with PostgreSQL 15.12 and n8n, especially when deployed via Helm?

Useful Links

- n8n Documentation on Queue Mode

- n8n Documentation on PostgreSQL Configuration

- 8gears/n8n Helm Chart Repository

Next Steps

- Post this issue on the n8n forum using the template above.

- Add any additional details that might be relevant (e.g., full n8n logs, Helm values file, etc.).

- Monitor the responses from the n8n team and provide further information if needed.