My n8n version is 1.56.2 (Self Hosted), and running by docker-compose.

Ollama env is ollama.app(local app) and i use MacOS.

When i try to use model in SummarizationChain, raised [Problem in node ‘Summarization Chain‘ : fetch failed].

This is my error log(n8n).

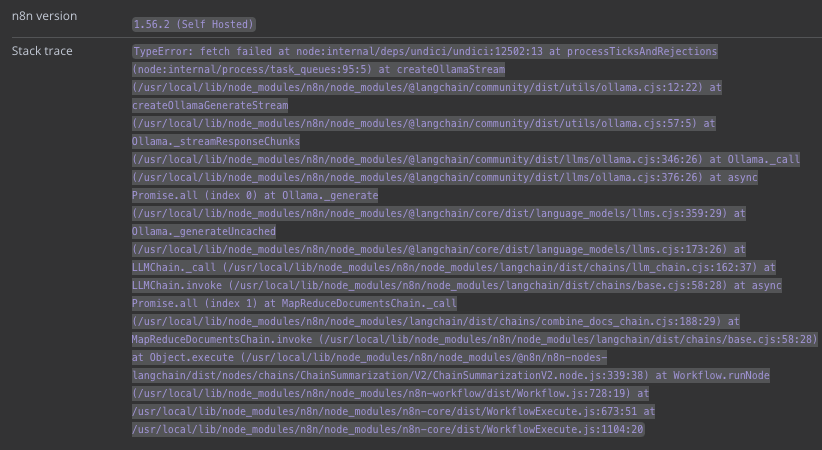

Stack trace

TypeError: fetch failed at node:internal/deps/undici/undici:12502:13 at processTicksAndRejections (node:internal/process/task_queues:95:5) at createOllamaStream (/usr/local/lib/node_modules/n8n/node_modules/@langchain/community/dist/utils/ollama.cjs:12:22) at createOllamaGenerateStream (/usr/local/lib/node_modules/n8n/node_modules/@langchain/community/dist/utils/ollama.cjs:57:5) at Ollama._streamResponseChunks (/usr/local/lib/node_modules/n8n/node_modules/@langchain/community/dist/llms/ollama.cjs:346:26) at Ollama._call (/usr/local/lib/node_modules/n8n/node_modules/@langchain/community/dist/llms/ollama.cjs:376:26) at async Promise.all (index 0) at Ollama._generate (/usr/local/lib/node_modules/n8n/node_modules/@langchain/core/dist/language_models/llms.cjs:359:29) at Ollama._generateUncached (/usr/local/lib/node_modules/n8n/node_modules/@langchain/core/dist/language_models/llms.cjs:173:26) at LLMChain._call (/usr/local/lib/node_modules/n8n/node_modules/langchain/dist/chains/llm_chain.cjs:162:37) at LLMChain.invoke (/usr/local/lib/node_modules/n8n/node_modules/langchain/dist/chains/base.cjs:58:28) at async Promise.all (index 1) at MapReduceDocumentsChain._call (/usr/local/lib/node_modules/n8n/node_modules/langchain/dist/chains/combine_docs_chain.cjs:188:29) at MapReduceDocumentsChain.invoke (/usr/local/lib/node_modules/n8n/node_modules/langchain/dist/chains/base.cjs:58:28) at Object.execute (/usr/local/lib/node_modules/n8n/node_modules/@n8n/n8n-nodes-langchain/dist/nodes/chains/ChainSummarization/V2/ChainSummarizationV2.node.js:339:38) at Workflow.runNode (/usr/local/lib/node_modules/n8n/node_modules/n8n-workflow/dist/Workflow.js:728:19) at /usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/WorkflowExecute.js:673:51 at /usr/local/lib/node_modules/n8n/node_modules/n8n-core/dist/WorkflowExecute.js:1104:20