Question

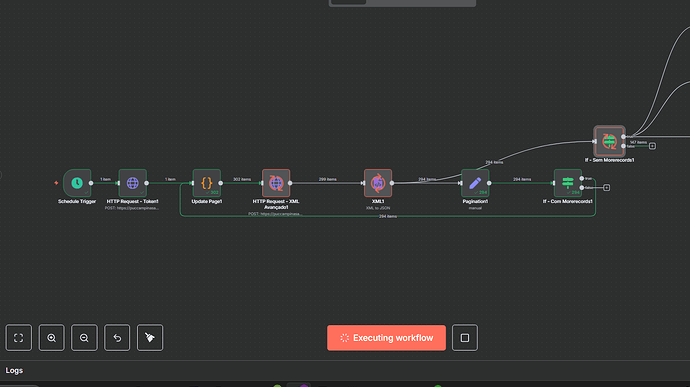

My workflow is designed to achieve a simple but essential goal: extract data from the Microsoft Dynamics CRM API using an XML Fetch, convert it to JSON, and then load the updated data into a BigQuery table, merging it with the existing records. This allows me to keep an up-to-date status of all records on a daily basis. The process runs a few times each day.

The current flow works as follows:

-

Authenticate with Microsoft Dynamics via an HTTP request to obtain a token.

-

Loop through pagination to make multiple XML HTTP requests to Dynamics until all pages are retrieved.

-

Convert XML to JSON.

-

When no more pages remain:

-

Create or replace a BigQuery staging table with the same schema as the main table.

-

Create a backup of the main table.

-

Wait 5–10 minutes.

-

Transform all the retrieved Dynamics data.

-

Load the transformed data into the staging table.

-

Replace the matching records in the main table with the updated data from the staging table.

-

I have tested the flow, and it works correctly for smaller data pulls.

However, when fetching all pages, the process becomes too heavy and eventually gets stuck in what appears to be an endless loop of processing.

I need to find a solution so the flow can reliably handle large pagination runs without overloading the execution, ensuring it completes successfully every time.

Workflow and Print Screen from the Main Issue

Information on your n8n setup

-

n8n version: 1.102

-

Database: postgress

-

n8n EXECUTIONS_PROCESS main

-

Running n8n via Docker

-

Operating system: ubuntu